AMAZON multi-meters discounts AMAZON oscilloscope discounts

Although the DFT is the most straightforward mathematical procedure for determining the frequency content of a time-domain sequence, it's terribly in efficient. As the number of points in the DFT is increased to hundreds, or thousands, the amount of necessary number crunching becomes excessive. In 1965 a paper was published by Cooley and Tukey describing a very efficient algorithm to implement the DFT [1]. That algorithm is now known as the fast Fourier transform (FFT). Before the advent of the FFT, thousand-point DFTs took so long to perform that their use was restricted to the larger research and university computer centers. Thanks to Cooley, Tukey, and the semiconductor industry, 1024-point DFTs can now be performed in a few seconds on home computers.

Volumes have been written about the FFT, and, like no other innovation, the development of this algorithm transformed the discipline of digital signal processing by making the power of Fourier analysis affordable. In this section, we'll show why the most popular FFT algorithm (called the radix-2 FFT) is superior to the classical DFT algorithm, present a series of recommendations to enhance our use of the FFT in practice, and provide a list of sources for FFT routines in various software languages. We conclude this section, for those readers wanting to know the internal details, with a derivation of the radix-2 FFT and introduce several different ways in which this FFT is implemented.

[Actually, the FFT has an interesting history. While analyzing X-ray scattering data, a couple of physicists in the 1940s were taking advantage of the symmetries of sines and cosines using a mathematical method based on a technique published in the early 1900s. Remarkably, over 20 years passed before the FFT was (re)discovered. Reference RI tells the full story. ]

1. RELATIONSHIP OF THE FFT TO THE DFT

Although many different FFT algorithms have been developed, in this section we'll see why the radix-2 FFT algorithm is so popular and learn how it's related to the classical DFT algorithm. The radix-2 FFT algorithm is a very efficient process for performing DFTs under the constraint that the DFT size be an integral power of two. (That is, the number of points in the transform is N = 2k, where k is some positive integer.) Let's see just why the radix-2 FFT is the favorite spectral analysis technique used by signal-processing practitioners.

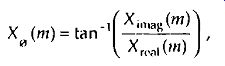

Recall that our DFT Example 1 in Section 3.1 illustrated the number of redundant arithmetic operations necessary for a simple 8-point DFT. (For ex ample, we ended up calculating the product of 1.0607 • 0.707 four separate times.) On the other hand, the radix-2 FFT eliminates these redundancies and greatly reduces the number of necessary arithmetic operations. To appreciate the FFT's efficiency, let's consider the number of complex multiplications necessary for our old friend, the expression for an N-point DFT,

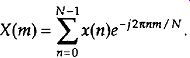

(eqn. 1)

For an 8-point DFT, Eq. (eqn. 1) tells us that we'd have to perform N2 or 64 complex multiplications. (That's because, for each of the eight X(m)s, we have to sum eight complex products as n goes from 0 to 7.) As we'll verify in later sections of this section, the number of complex multiplications, for an N-point FFT, is approximately

- N/2 log 2 N 2 (eqn. 2)

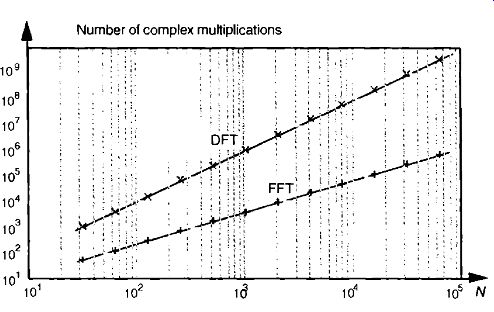

FIG. 1 Number of complex multiplications in the DFT and the radix-2 FFT

as a function of N.

(We say approximately because some multiplications turn out to be multiplications by +1 or -1, which amount to mere sign changes.) Well, this (N/2)log2 N value is a significant reduction from the N2 complex multiplications required by Eq. (eqn. 1), particularly for large N. To show just how significant, FIG. 1 compares the number of complex multiplications required by DFTs and radix-2 FFTs as a function of the number of input data points N. When N = 512, for example, the DFT requires 200 times more complex multiplications than those needed by the FFT. When N = 8192, the DFT must calculate 1000 complex multiplications for each complex multiplication in the FFT! Here's my favorite example of the efficiency of the radix-2 FFT. Say you perform a two million-point FFT (N = 2,097,152) on your desktop computer and it takes 10 seconds. A two million-point DFT on the other hand, using your computer, will take more than three weeks! The publication and dissemination of the radix-2 FFT algorithm was, arguably, the most important event in digital signal processing.

It's appropriate now to make clear that the FFT is not an approximation of the DFT. It's exactly equal to the DFT; it is the DFT. Moreover, all of the performance characteristics of the DFT described in the previous section, output symmetry, linearity, output magnitudes, leakage, scalloping loss, etc., also de scribe the behavior of the HT.

2. HINTS ON USING FFTS IN PRACTICE

Based on how useful FFTs are, here's a list of practical pointers, or tips, on acquiring input data samples and using the radix-2 HT to analyze real-world signals or data.

2.1 Sample Fast Enough and Long Enough

When digitizing continuous signals with an A/D converter, for example, we know, from Section 2, that our sampling rate must be greater than twice the bandwidth of the continuous A /D input signal to prevent frequency domain aliasing. Depending on the application, practitioners typically sample at 2.5 to four times the signal bandwidth. If we know that the bandwidth of the continuous signal is not too large relative to the maximum sample rate of our A/D converter, it's easy to avoid aliasing. If we don't know the continuous A/D input signal's bandwidth, how do we tell if we're having aliasing problems? Well, we should mistrust any FFT results that have significant spectral components at frequencies near half the sample rate. Ideally, we'd like to work with signals whose spectral amplitudes decrease with increasing frequency. Be very suspicious of aliasing if there are any spectral components whose frequencies appear to depend on the sample rate. If we suspect that aliasing is occurring or that the continuous signal contains broadband noise, we'll have to use an analog low-pass filter prior to A/D conversion. The cutoff frequency of the low-pass filter must, of course, be greater than the frequency band of interest but less than half the sample rate.

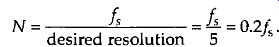

Although we know that an N-point radix-2 FFT requires N = 2k input samples, just how many samples must we collect before we perform our FFT? The answer is that the data collection time interval must be long enough to satisfy our desired FFT frequency resolution for the given sample rate 4. The data collection time interval is the reciprocal of the desired FFT frequency resolution, and the longer we sample at a fixed f, sample rate, the finer our frequency resolution will be; that is, the total data collection time interval is N/f, seconds, and our N-point FFT bin-to-bin frequency resolution is fs / N Hz. So, for example, if we need a spectral resolution of 5 Hz, then, 4/ N = 5 Hz, and

(eqn. 3)

In this case, if fs is, say, 10 kHz, then N must be at least 2,000, and we'd choose N equal to 2048 because this number is a power of 2.

2.2 Manipulating the Time Data Prior to Transformation

When using the radix-2 FFT, if we don't have control over the length of our time-domain data sequence, and that sequence length is not an integral power of two, we have two options. We could discard enough data samples so that the remaining FFT input sequence length is some integral power of two. This scheme is not recommended because ignoring data samples de grades our resultant frequency-domain resolution. (The larger N, the better our frequency resolution, right?) A better approach is to append enough zero valued samples to the end of the time data sequence to match the number of points of the next largest radix-2 FFT. For example, if we have 1000 time samples to transform, rather than analyzing only 512 of them with a 512-point FFT, we should add 24 trailing zero-valued samples to the original sequence and use a 1024-point FFT. (This zero-padding technique is discussed in more detail in Section 3.11.) FFTs suffer the same ill effects of spectral leakage that we discussed for the DFT in Section 3.8. We can multiply the time data by a window function to alleviate this leakage problem. Be prepared, though, for the frequency resolution degradation inherent when windows are used. By the way, if appending zeros is necessary to extend a time sequence, we have to make sure that we append the zeros after multiplying the original time data sequence by a window function. Applying a window function to the appended zeros will distort the resultant window and worsen our FFT leakage problems.

Although windowing will reduce leakage problems, it will not eliminate them altogether. Even when windowing is employed, high-level spectral components can obscure nearby low-level spectral components. This is especially evident when the original time data has a nonzero average, i.e., it's riding on a DC bias. When the FFI' is performed in this case, a large-amplitude DC spectral component at 0 Hz will overshadow its spectral neighbors. We can eliminate this problem by calculating the average of the time sequence and subtract that average value from each sample in the original sequence. (The averaging and subtraction process must be performed before windowing.) This technique makes the new time sequence's average (mean) value equal to zero and eliminates any high-level, 0-Hz component in the FFT results.

2.3 Enhancing FFT Results

If we're using the FFT to detect signal energy in the presence of noise and enough time-domain data is available, we can improve the sensitivity of our processing by averaging multiple FFTs. This technique, discussed in Section 11.3, can be implemented to detect signal energy that's actually below the average noise level; that is, given enough time-domain data, we can detect signal components that have negative signal-to-noise ratios.

if our original time-domain data is real-valued only, we can take advantage of the 2N-Point Real EFT technique in Section 13.5 to speed up our processing; that is, a 2N-point real sequence can be transformed with a single N-point complex radix-2 HI Thus we can get the frequency resolution of a 2N-point FFT for just about the computational price of performing a standard N-point FFT. Another FFT speed enhancement is the possible use of the frequency-domain windowing technique discussed in Section 13.3. If we need the FFT of unwindowed time-domain data and, at the same time, we also want the FFT of that same time data with a window function applied, we don't have to perform two separate FFTs. We can perform the EFT of the un windowed data, and then we can perform frequency-domain windowing to reduce spectral leakage on any, or all, of the FFT bin outputs.

2.4 Interpreting FFT Results

The first step in interpreting FFT results is to compute the absolute frequency of the individual FFT bin centers. Like the DFT, the FIT bin spacing is the ratio of the sampling rate (f ) over the number of points in the EFT, or fs /N. With our FFT output designated by X(m), where m = 0, 1, 2, 3, ..., N-1, the absolute frequency of the mth bin center is mfs /N. If the FFT's input time samples are real, only the X(m) outputs from ni = 0 to m = N/2 are independent.

So, in this case, we need determine only the absolute FFT bin frequencies for m over the range of 0 N/2. If the FFT input samples are complex, all N of the FFT outputs are independent, and we should compute the absolute FFT bin frequencies for ni over the full range of 0 N-1.

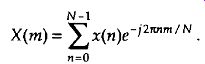

If necessary, we can determine the true amplitude of time-domain signals from their FFT spectral results. To do so, we have to keep in mind that radix-2 FFT outputs are complex and of the form

(eqn. 4)

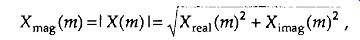

Also, the FFT output magnitude samples,

(eqn. 5)

are all inherently multiplied by the factor N/2, as described in Section 3.4, when the input samples are real. If the FFT input samples are complex, the scaling factor is N. So to determine the correct amplitudes of the time-domain sinusoidal components, we'd have to divide the FFT magnitudes by the appropriate scale factor, N/2 for real inputs and N for complex inputs.

If a window function was used on the original time-domain data, some of the FFT input samples will be attenuated. This reduces the resultant FFT output magnitudes from their true unwindowed values. To calculate the correct amplitudes of various time-domain sinusoidal components, then, we'd have to further divide the FFT magnitudes by the appropriate processing loss factor associated with the window function used. Processing loss factors for the most popular window functions are listed in Reference [3]. Should we want to determine the power spectrum X15 (m) of an FFT result, we'd calculate the magnitude-squared values using

(eqn. 6)

Doing so would allow us to compute the power spectrum in decibels with XdB (ln)= 10 .log io ( I X(m) I 2 ) dB.

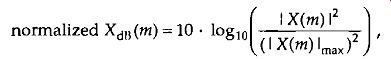

The normalized power spectrum in decibels can be calculated using normalized XdB

(eqn. 7)

(eqn. 8)

or

(eqn. 9)

In Eqs. (eqn. 8) and (eqn. 9), the term 1 X(m) I ma), is the largest FFT output magnitude sample. In practice, we find that plotting X du(m) is very informative be cause of the enhanced low-magnitude resolution afforded by the logarithmic decibel scale, as described in Section E. If either Eq. (eqn. 8) or Eq. (eqn. 9) is used, no compensation need be performed for the above-mentioned N or N/2 FR' scale or window processing loss factors. Normalization through division by (I X(m) I or or I X(m) I max eliminates the effect of any absolute FFT or window scale ; factors.

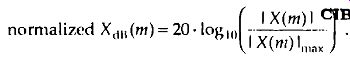

Knowing that the phase angles X0 (m) of the individual FFT outputs are given by

(eqn. 10)

it's important to watch out for X rcal(m) values that are equal to zero. That would invalidate our phase angle calculations in Eq. (eqn. 1.0) due to division by a zero condition. In practice, we want to make sure that our calculations (or software compiler) detect occurrences of = 0 and set the corresponding Xo(m) to 90° if X_imag( M) is positive, set Xo(m) to 00 if X_imag (m) is zero, and set Xo(m) to -90 if X_imag(m) is negative. While we're on the Aject of FFT output phase angles, be aware that FFT outputs containing significant noise components can cause large fluctuations in the computed X0 (m) phase angles. This means that the X „,(ni) samples are only meaningful when the corresponding I X(m) I is well above the aver age FFT output noise level.

3. FFT SOFTWARE PROGRAMS

For readers seeking actual FFT software routines without having to buy those high-priced signal processing software packages, public domain radix-2 FFT routines are readily available. References [4-7] provide standard FFT pro gram listings using the FORTRAN language. Reference [8] presents an efficient FFT program written in FORTRAN for real-only input data sequences.

Reference 191 provides a standard FFT program written in FII) BASIC", and reference 1 101 presents an FFT routine written in Applesoft BASIC. Readers interested in the Ada language can find FFT-related subroutines in reference [11].

4. DERIVATION OF THE RADIX-2 FFT ALGORITHM

This section and those that follow provide a detailed description of the internal data structures and operations of the radix-2 FFT for those readers interested in developing software FFT routines or designing FFT hardware. To see just exactly how the FFT evolved from the DFT, we return to the equation for an N-point DFT,

(eqn. 11)

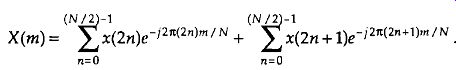

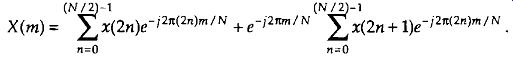

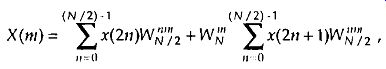

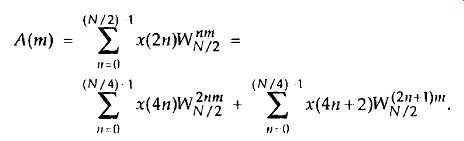

A straightforward derivation of the FFT proceeds with the separation of the input data sequence x(n) into two parts. When x(n) is segmented into its even and odd indexed elements, we can, then, break Eq. (eqn. 11) into two parts as

(eqn. 12)

Pulling the constant phase angle outside the second summation,

(eqn. 13)

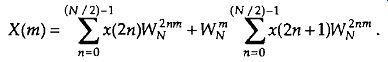

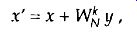

Well, here the equations get so long and drawn out that we'll use the standard notation to simplify things. We'll define INN = C121" to represent the complex phase angle factor that is constant with N. So Eq. (eqn. 13) becomes

(eqn. 14)

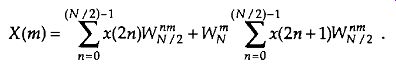

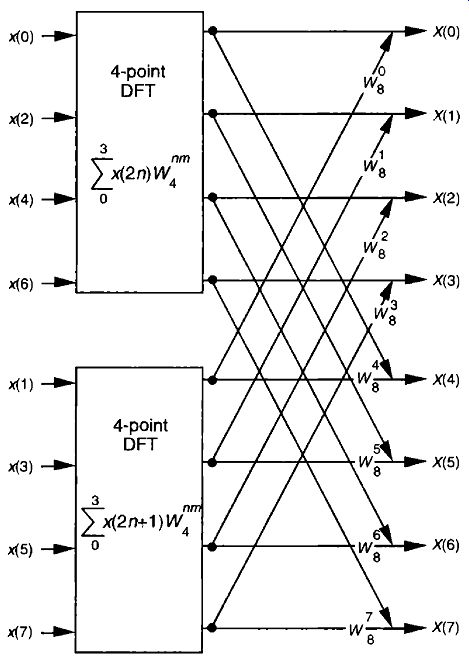

Because W N^2 = e^-j2n/(N) = e^-j2n/(N/2), we can substitute W N/ 2 for WN^2 in Eq. (eqn. 14),

(eqn. 15)

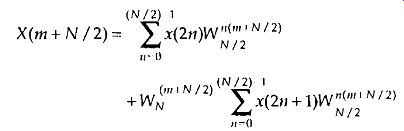

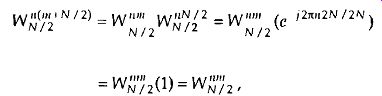

So we now have two N / 2 summations whose results can be combined to give us the N-point DFT. We've reduced some of the necessary number crunching in Eq. (eqn. 15) relative to Eq. (eqn. 11) because the W terms in the two summations of Eq. (eqn. 15) are identical. There's a further benefit in breaking the N-point DFT into two parts because the upper half of the DFT outputs is easy to calculate. Consider the X(m+N /2) output. If we plug m+N/2 in for m in Eq. (eqn. 15), then

(eqn. 16)

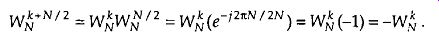

It looks like we're complicating things, right? Well, just hang in there for a moment. We can now simplify the phase angle terms inside the summations because

(eqn. 17)

for any integer n.

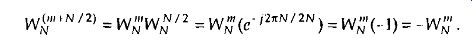

Looking at the so-called twiddle factor in front of the second summation in Eq. (eqn. 16), we can simplify it as

(eqn. 18)

OK, using Eqs. (eqn. 17) and (eqn. 18), we represent Eq. (eqn. 16)

(eqn. 19)

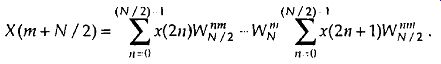

Now, let's repeat Eqs. (eqn. 15) and (eqn. 19) to see the similarity; and

(eqn. 20)

(eqn. 20')

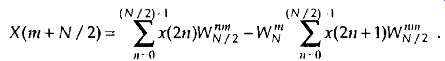

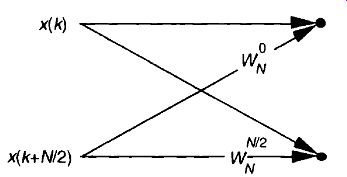

So here we are. We need not perform any sine or cosine multiplications to get X(m+N /2). We just change the sign of the twiddle factor 1/1P l; and use the results of the two summations from X(m) to get X(m+N/2). Of course, nt goes from 0 to (N / 2)-1 in Eq. (eqn. 20) which means, for an N-point DFT, we perform an N/2-point DFT to get the first N / 2 outputs and use those to get the last N/2 outputs. For N = 8, Eqs. (eqn. 20) and (eqn. 20') are implemented as shown in FIG. 2.

FIG. 2 FFT implementation of an 8-point DFT using two 4-point DFTs.

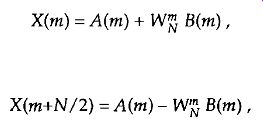

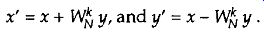

If we simplify Eqs. (eqn. 20) and (eqn. 20') to the form

(eqn. 21)

and

(eqn. 21')

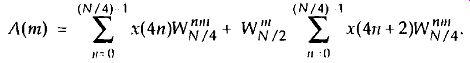

we can go further and think about breaking the two 4-point DFTs into four 2-point DFTs. Let's see how we can subdivide the upper 4-point DFT in FIG. -2 whose four outputs are A(m) in Eqs. (eqn. 21) and (eqn. 21'). We segment the inputs to the upper 4-point DFT into their odd and even components

(eqn. 22)

(eqn. 23)

Notice the similarity between Eq. (eqn. 23) and Eq. (eqn. 20). This capability to subdivide an N/2-point DFT into two N/4-point DFTS gives the FFT its capacity to greatly reduce the number of necessary multiplications to implement DFTs. (We're going to demonstrate this shortly.) Following the same steps we used to obtained A(m), we can show that Eq.(eqn. 21)'s 13(m) is

(eqn. 24)

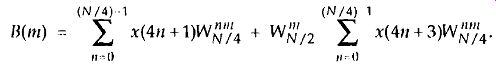

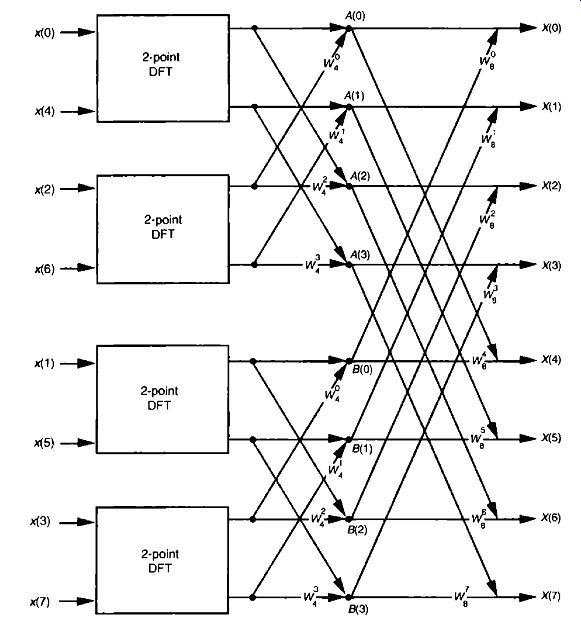

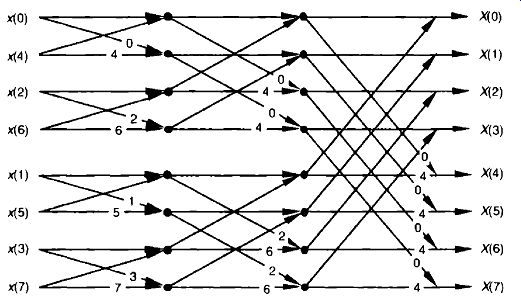

For our N 8 example, Eqs. (eqn. 23) and (eqn. 24) are implemented as shown in FIG. 3. The FFT's well-known butterfly pattern of signal flows is certainly evident, and we see the further shuffling of the input data in FIG. 3. The twiddle factor Wi t? \; 12 in Eqs. (eqn. 23) and (eqn. 24), for our N = 8 example, ranges from W( , ) 4 to 1/1/ 4 because the it index, for A(m) and B(in), goes from 0 to 3. For any N-point DFT, we can break each of the N/2-point DFTs into two N/4-point DFTs to further reduce the number of sine and cosine multiplications. Eventually, we would arrive at an array of 2-point DFTs where no further computational savings could be realized. This is why the number of points in our FFTs are constrained to be some power of 2 and why this ITT algorithm is referred to as the radix-2 FFT.

Moving right along, let's go one step further, and then we'll be finished with our N = 8 point FFT derivation. The 2-point DFT functions in FIG. 3 cannot be partitioned into smaller parts--we've reached the end of our DFT reduction process arriving at the butterfly of a single 2-point DFT as shown in FIG. 4. From the definition of WN, 1/0 PRO / N 1 and 0./1 1V = e 122'' =1.

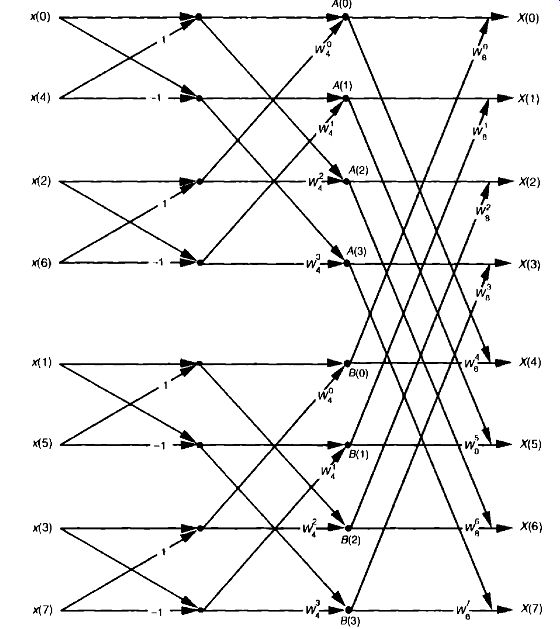

So the 2-point DFT blocks in FIG. 3 can be replaced by the butterfly in FIG. 4 to give us a full 8-point FFT implementation of the DFT as shown in FIG. 5.

FIG. 3 FFT implementation of an 8-point DFT as two 4-point DFTs and four

2-pont DFTs.

OK, we've gone through a fair amount of algebraic foot shuffling here. To verify that the derivation of the FFT is valid, we can apply the 8-point data sequence of Section 3's DFT Example 1 to the 8-point FFT represented by FIG. 5. The data sequence representing x(n) = sin(2 pi 1000nt5 ) + 0.5sin(2 pi 2000nt s +3n/ 4) is

(eqn. 25)

FIG. 4 Single 2-point DFT butterfly.

FIG. 5 Full decimation-in-time FFT implementation of an 8-point DFT.

FIG. 6 8-point FFT of Example 1 from Section 3.1.

We begin grinding through this example by applying the input values from Eq. (eqn. 25) to FIG. 5, giving the data values shown on left side of FIG. 6. The outputs of the second stage of the FFT are:

A(0) = 0.707 + 10 4 (-0.707) = 0.707 + (1 + j0)(-0.707) = 0 + j0,

A(1) = 0.0 + WI (1.999) = O. 0+ (0 - j1)(1.999) = 0 - j1.999,

A(2) = 0.707 + (-0.707) = 0.707 + (-1 + j0)(-0.707) = 1.414 +j0,

A(3) = 0.0 + WI (1.999) = 0 .0 + (0 + j1)(1.999) = 0 + j1.999,

B(0) = 0.707 + TM (0.707) = -0.707 + (1 + j0)(0.707) = 0 + j0,

B(1) = 1.414 + WI 4 (1.414) = 1.414 + (0 - j1)(1.414) = 1.414 - j1.414,

Calculating the outputs of the third stage of the FFT to arrive at our final answer

So, happily, the EFT gives us the correct results, and again we remind the reader that the FFT is not an approximation to a DFT; it is the DFT with a reduced number of necessary arithmetic operations. You've seen from the above example that the 8-point FFT example required less effort than the 8-point DFT Example 1 in Section 3.1. Some authors like to explain this arithmetic reduction by the redundancies inherent in the twiddle factors W. They illustrate this with the starburst pattern in FIG. 7 showing the equivalencies of some of the twiddle factors in an 8-point DFT. FIG. 7 Cyclic redundancies in the twiddle factors of an 8-point FFT.

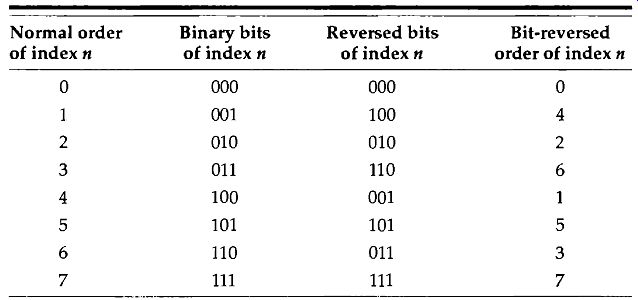

Table 1 Input Index Bit Reversal for an 8-point FFT

5. FFT INPUT/OUTPUT DATA INDEX BIT REVERSAL

OK, let's look into some of the special properties of the FFT that are important to FFT software developers and FFT hardware designers. Notice that FIG. 5 was titled "Full decimation-in-time FFT implementation of an 8-point DFT." The decimation-in-time phrase refers to how we broke the DFT input samples into odd and even parts in the derivation of Eqs. (eqn. 20), (eqn. 23), and (eqn. 24). This time decimation leads to the scrambled order of the input data's index n in FIG. 5. The pattern of this shuffled order cart be understood with the help of Table 1. The shuffling of the input data is known as bit reversal because the scrambled order of the input data index can be obtained by reversing the bits of the binary representation of the normal input data index order. Sounds confusing, but it's really not-Table 1 illustrates the input index bit reversal for our 8-point FFT example. Notice the normal index order in the left column of Table 4-1 and the scrambled order in the right column that corresponds to the final decimated input index order in FIG. 5.

We've transposed the original binary bits representing the normal index order by reversing their positions. The most significant bit becomes the least significant bit and the least significant bit becomes the most significant bit, the next to the most significant bit becomes the next to the least significant bit, and the next to the least significant bit becomes the next to the most significant bit, and so on.

6 RADIX-2 FFT BUTTERFLY STRUCTURES

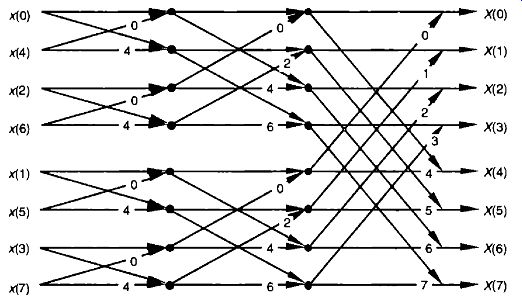

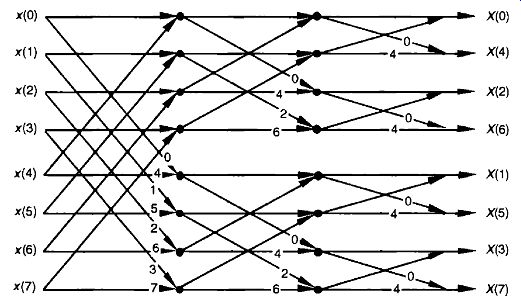

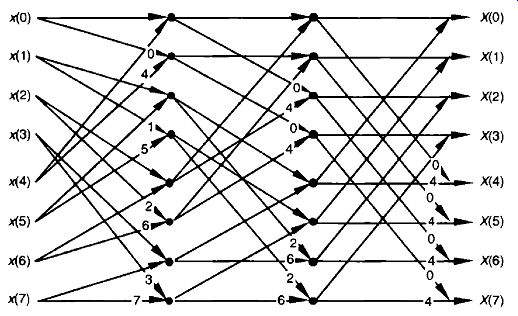

Let's explore the butterfly signal flows of the decimation-in-time FFT a bit further. To simplify the signal flows, let's replace the twiddle factors in FIG. 5 with their equivalent values referenced to In, where N = 8. We can show just the exponents m of W_N^m, to get the FFT structure shown in FIG. 8.

That is, W1 from FIG. 5 is equal to W4 and is shown as a 2 in FIG. 8, W1 from FIG. 5 is equal to W4 and is shown as a 4 in FIG. 8, etc. The 1s and -1s in the first stage of FIG. 5 are replaced in FIG. 8 by Os and 4s, respectively. Other than the twiddle factor notation, FIG. 8 is identical to FIG. 5. We can shift around the signal nodes in FIG. 5 and arrive at an 8-point decimation-in-time FFT as shown in FIG. 9. Notice that the input data in FIG. 9 is in its normal order and the output data indices are bit-reversed. In this case, a bit-reversal operation needs to be performed at the output of the FFT to unscramble the frequency-domain results.

FIG. 10 shows an FFT signal-flow structure that avoids the bit reversal problem altogether, and the graceful weave of the traditional FFT butterflies is replaced with a tangled, but effective, configuration.

Not too long ago, hardware implementations of the FFT spent most of their time (clock cycles) performing multiplications, and the bit-reversal process necessary to access data in memory wasn't a significant portion of the overall FFT computational problem. Now that high-speed multiplier/accumulator integrated circuits can multiply two numbers in a single clock cycle, FFT data multiplexing and memory addressing have become much more important. This has led to the development of efficient algorithms to perform bit reversal.

FIG. 8, 8-point decimation-in-time FFT with bit-reversed inputs.

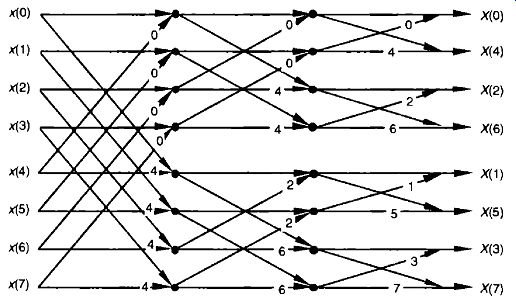

FIG. 9, 8-point decimation-in-time FFT with bit-reversed outputs.

There's another derivation for the FFT that leads to butterfly structures looking like those we've already covered, but the twiddle factors in the butter flies are different. This alternate FFT technique is known as the decimation-in frequency algorithm. Where the decimation-in-time FFT algorithm is based on subdividing the input data into its odd and even components, the decimation in-frequency FFT algorithm is founded upon calculating the odd and even output frequency samples separately. The derivation of the decimation-in frequency algorithm is straightforward and included in many tutorial papers and textbooks, so we won't go through the derivation here. We will, however, illustrate decimation-in-frequency butterfly structures (analogous to the structures in Figures 8 through 10) in Figures 11 though 13.

FIG. 10, 8-point decimation-in-time FFT with inputs and outputs in normal

order.

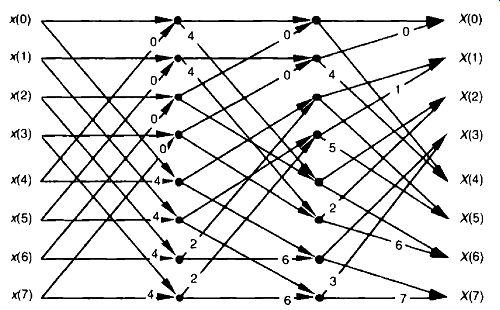

FIG. 11, 8-point decimation-in-frequency FFT with bit-reversed inputs.

FIG. 12, 8-point decimation-in-frequency FFT with bit-reversed outputs.

FIG. 13, 8-point decimation-in-frequency FFT with inputs and outputs in

nor mal order.

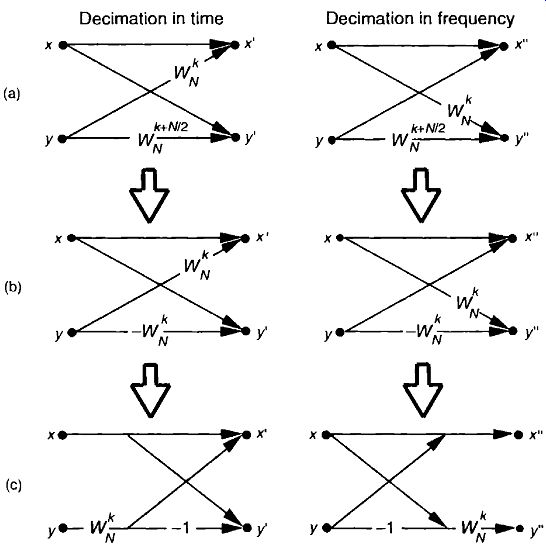

FIG. 14, Decimation-in-time and decimation-in-frequency butterfly structures:

(a) original form; (b) simplified form; (c) optimized form.

So an equivalent decimation-in-frequency FFT structure exists for each decimation-in-time FFT structure. It's important to note that the number of necessary multiplications to implement the decimation-in-frequency FFT algorithms is the same as the number necessary for the decimation-in-time FFT algorithms. There are so many different FFT butterfly structures described in the literature, that it's easy to become confused about which structures are decimation-in-time and which are decimation-in-frequency. Depending on how the material is presented, it's easy for a beginner to fall into the trap of believing that decimation-in-time FFTs always have their inputs bit-reversed and decimation-in-frequency FFTs always have their outputs bit-reversed.

This is not true, as the above figures show. Decimation--in-time or --frequency is determined by whether the DFT inputs or outputs are partitioned when de riving a particular FFT butterfly structure from the DFT equations.

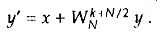

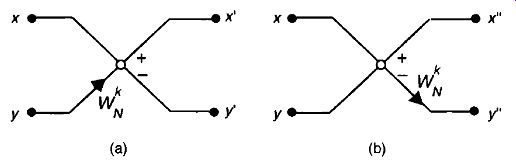

Let's take one more look at a single butterfly. The FFT butterfly structures in Figures 8, 9, 11, and 12 are the direct result of the derivations of the decimation-in-time and decimation-in-frequency algorithms. Although it's not very obvious at first, the twiddle factor exponents shown in these structures do have a consistent pattern. Notice how they always take the general forms shown in FIG. 14(a).t To implement the decimation-in-time butterfly of FIG. 14(a), we'd have to perform two complex multiplications and two complex additions. Well, there's a better way. Consider the decimation-in-time butterfly in FIG. 14(a). If the top input is x and the bottom input is y, the top butterfly output would be

(eqn. 26)

and the bottom butterfly output would be

(eqn. 27)

Remember, for simplicity the butterfly structures in Figures 4-8 through 4-13 show only the twiddle factor exponents, k and k+N/ 2, and not the entire complex twiddle factors.

FIG. 15 Alternate FFT butterfly notation: (a) decimation-in-time; (b)

decimation-in-frequency

Fortunately, the operations in Eqs. (eqn. 26) and (eqn. 27) can be simplified because the two twiddle factors are related by

(eqn. 28)

So we can replace the Wi t,711 /2 twiddle factors in FIG. 14(a) with -Wlk to give us the simplified butterflies shown in FIG. 14(b). Realizing that the twiddle factors in FIG. 14(b) differ only by their signs, the optimized butterflies in FIG. 14(c) can be used. Notice that these optimized butterflies re quire two complex additions but only one complex multiplication, thus reducing our computational workload.

We'll often see the optimized butterfly structures of FIG. 14(c) in the literature instead of those in FIG. 14(a). These optimized butterflies give us an easy way to recognize decimation-in-time and decimation-in-frequency algorithms. When we do come across the optimized butterflies from FIG. 14(c), we'll know that the algorithm is decimation-in-time if the twiddle factor precedes the -1, or else the algorithm is decimation-in-frequency if the twiddle factor follows the -1.

Sometimes we'll encounter FFT structures in the literature that use the notation shown in FIG. 15 [5,17]. These wingless butterflies are equivalent to those shown in FIG. 14(c). The signal-flow convention in FIG. 15 is such that the plus output of a circle is the sum of the two samples that enter the circle from the left, and the minus output of a circle is the difference of the samples that enter the circle. So the outputs of the decimation-in-time butterflies in FIG. 14(c) and FIG. 15(a) are given by

(eqn. 29)

It's because there are (N/2)log2N butterflies in an N-point FFT that we said the number of complex multiplications performed by an FFT is (N/2)log2 N in Eq. (eqn. 2).

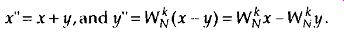

The outputs of the decimation-in-frequency butterflies in FIG. 14(c) and FIG. 15(b) are x''=

(eqn. 30)

So which HT structure is the best one to use? It depends on the application, the hardware implementation, and convenience. If we're using a soft ware routine to perform FFTs on a general-purpose computer, we usually don't have a lot of choices. Most folks just use whatever existing FFT routines happen to be included in their commercial software package. Their code may be optimized for speed, but you never know. Examination of the software code may be necessary to see just how the FFT is implemented. If we feel the need for speed, we should check to see if the software calculates the sines and cosines each time it needs a twiddle factor. Trigonometric calculations normally take many machine cycles. It may be possible to speed up the algorithm by calculating the twiddle factors ahead of time and storing them in a table. That way, they can be looked up, instead of being calculated each time they're needed in a butterfly. If we're writing our own software routine, checking for butterfly output data overflow and careful magnitude scaling may allow our FFT to be performed using integer arithmetic that can be faster on some machines. Care must be taken, however, when using integer arithmetic; some Reduced Instruction Set Computer (RISC) processors actually take longer to perform integer calculations because they're specifically de signed to operate on floating-point numbers.

If we're using commercial array processor hardware for our calculations, the code in these processors is always optimized because their purpose in life is high speed. Array processor manufacturers typically publicize their products by specifying the speed at which their machines perform a 1024- point FFT. Let's look at some of our options in selecting a particular FFT structure in case we're designing special-purpose hardware to implement an FFT.

[Overflow is what happens when the result of an arithmetic operation has too many bits, or digits, to be represented in the hardware registers designed to contain that result. [Ti data over-flow is described in Section 12.3. ]

The EFT butterfly structures previously discussed typically fall into one of two categories: in-place FFT algorithms and double-memory FFT algorithms. An in-place algorithm is depicted in FIG. 5. The output of a butterfly operation can be stored in the same hardware memory locations that previously held the butterfly's input data. No intermediate storage is necessary. This way, for an N-point FFT, only 2N memory locations are needed. (The 2 comes from the fact that each butterfly node represents a data value that has both a real and an imaginary part.) The rub with the in-place algorithms is that data routing and memory addressing is rather complicated.

A double-memory FFT structure is that depicted in FIG. 10. With this structure, intermediate storage is necessary because we no longer have the standard butterflies, and 4N memory locations are needed. However, data routing and memory address control is much simpler in double-memory FFT structures than the in-place technique. The use of high-speed, floating-point integrated circuits to implement pipelined FFT architectures takes better ad vantage of their pipelined structure when the double-memory algorithm is used [18]. There's another class of FFT structures, known as constant-geometry algorithms, that make the addressing of memory both simple and constant for each stage of the FFT. These structures are of interest to those folks who build special-purpose FFT hardware devices[4,19]. From the standpoint of general hardware the decimation-in-time algorithms are optimum for real input data sequences, and decimation-in-frequency is appropriate when the input is complex[8]. When the FFT input data is symmetrical in time, special FFT structures exist to eliminate unnecessary calculations. These special butterfly structures based on input data symmetry are described in the literature [20].

For two-dimensional FFT applications, such as processing photographic images, the decimation-in-frequency algorithms appear to be the optimum choice [21]. Your application may be such that FFT input and output bit reversal is not an important factor. Some FFT applications allow manipulating a bit-reversed FFT output sequence in the frequency domain without having to unscramble the FFT's output data. Then an inverse transform that's expecting bit-reversed inputs will give a time-domain output whose data sequence is correct. This situation avoids the need to perform any bit reversals at all. Multiplying two FFT outputs to implement convolution or correlation are examples of this possibility./ As we can see, finding the optimum FFT algorithm and hardware architecture for an FFT is a fairly complex problem to solve, but the literature provides guidance.

REFERENCES

[1] Cooley, J. and Tukey, J. "An Algorithm for the Machine Calculation of Complex Fourier Series," Math. Comput., Vol. 19, No. 90, Apr. 1965, pp. 297-301.

[2] Cooley, J., Lewis, P., and Welch, P. "Historical Notes on the Fast Fourier Transform," IEEE Trans. on Audio and Electroacoustics, Vol. AU-15, No. 2, June 1967.

[3] Harris, F. J. "On the Use of Windows for Harmonic Analysis with the Discrete Fourier Transform," Proceedings (!f the UT, Vol. 66, No. 1, p. 54, January 1978.

[4] Oppenheim, A. V., and Schafer, R. W. Discrete-Time Signal Processing, Prentice-Hall, Englewood Cliffs, New Jersey, 1989, p. 608.

[5] Rabiner, L. R. and Gold, B. Theory and Application of Digital Signal Processing, Prentice-Hall, Englewood Cliffs, New Jersey, 1975, p. 367.

[6] Stearns, S. Digital Signal Analysis, Hayden Book Co., Rochelle Park, New Jersey, 1975, p. 265.

[7] Programs for Digital Signal Processing, Section 1, IEEE Press, New York, 1979.

[8] Sorenson, H. V., Jones, D. L, Heideman, M. T., and Burrus, C. S. "Real-Valued Fast Fourier Transform Algorithms," IELL Trans. on Acoust. Speech, and Signal Proc., Vol. ASSP-35, No. 6, June 1987.

[9] Bracewell, R. The Fourier Transform and Its Applications, 2nd Edition, Revised, McGraw Hill, New York, 1986, p. 405.

[10] Cobb, F. "Use .Fast Fourier Transform Programs to Simplify, Enhance Filter Analysis," EDN, 8 March 1984.

[11] Carlin, F. " Ada and Generic EFT Generate Routines Tailored to Your Needs," EDN, 23 April 1992.

[12] Evans, D. "An Improved Digit-Reversal Permutation Algorithm for the Fast Fourier and Hartley Transforms," IEEE Trans. on Acoust. Speech, and Signal Pwc., Vol. ASSP-35, No. 8, August 1987.

[13] Burrus, C. S. "Unscrambling for Fast DFT Algorithms," IEEE Trans. on Acousl. Speech, and Signal Proc., Vol. 36, No. 7, July 1988.

[14] Rodriguez, J. J. "An Improved EFT Digit-Reversal Algorithm," IEEE Trans. on Amust. Speech, and Signal Proc., Vol. ASSP-37, No. 8, August 1989.

[15] Land, A. "Bit Reverser Scrambles Data for EFT," EnN, March 2, 1995.

[16] JG-AE Subcommittee on Measurement Concepts, "What Is the Fast Fourier Transform?," IEEE Trans. on Audio and Electroacoustics, Vol. AU-15, No. 2, June 1967.

[17] Cohen, R., and Perlman, R. "500 kHz Single-Board EFT System Incorporates DSP Optimized Chips," EDN, 31 October 1984.

[18] Eldon, J., and Winter, G. E. "Floating-point Chips Carve Out ITT Systems," Electronic De sign, 4 August 1983.

[19] Lamb, K. "CMOS Building Blocks Shrink and Speed Up EFT Systems," Llecinmic Design, 6 August 1987.

[20] Markel, J. D. "HT Pruning," IEEE Trans. on Audio and Flectroacoustics, Vol. AU-19, No. 4, December 1971.

[21] Wu, H. R., and Paoloni, F. J. "The Structure of Vector Radix Fast Fourier Transforms," IEEE Trans. on Acoust. Speech, and Signal Proc., Vol. ASSP-37, No. 8, August 1989.

[22] Ali, Z. M. "High Speed FFT Processor," IEEE Trans. on Communications, Vol. COM-26, No 5, May 1978.

[23] Bergland, G. "Fast Fourier Transform Hardware Implementations-An Overview," IEEE Trans. on Audio and Electroacoustics, Vol. AU-17, June 1969.

Related Articles -- Top of Page -- Home