AMAZON multi-meters discounts AMAZON oscilloscope discounts

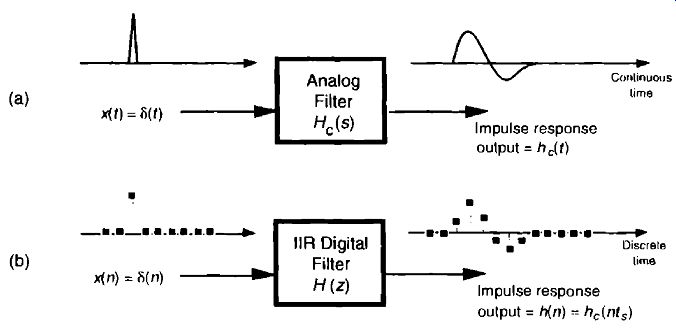

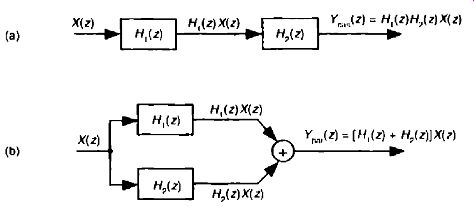

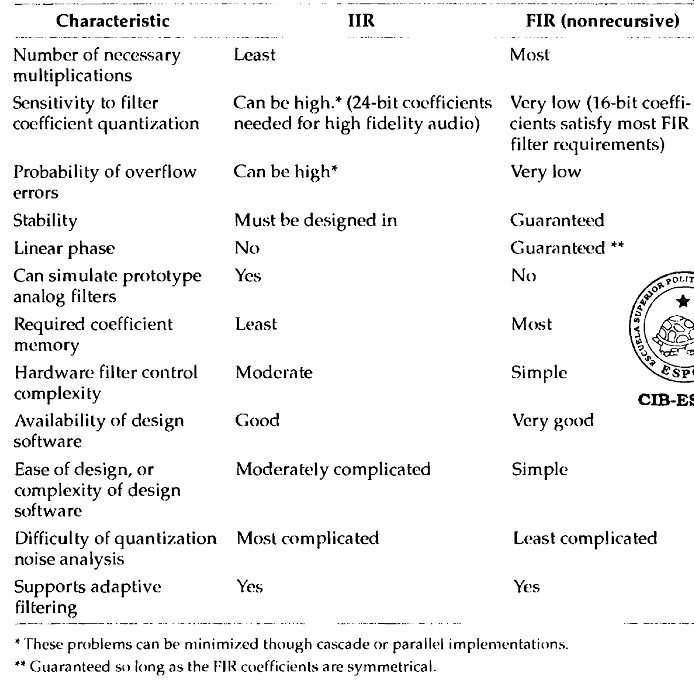

Infinite impulse response (IIR) digital filters are fundamentally different from FIR filters because practical IIR filters always require feedback. Where FIR filter output samples depend only on past input samples, each IIR filter output sample depends on previous input samples and previous filter output samples. IIR filters' memory of past outputs is both a blessing and a curse. Like all feedback systems, perturbations at the IIR filter input could, depending on the design, cause the filter output to become unstable and oscillate indefinitely. This characteristic of possibly having an infinite duration of nonzero output samples, even if the input becomes all zeros, is the origin of the phrase infinite impulse response. It's interesting at this point to know that, relative to FIR filters, HR filters have more complicated structures (block diagrams), are harder to design and analyze, and do not have linear phase responses. Why in the world, then, would anyone use an IIR filter? Because they are very efficient. IIR filters require far fewer multiplications per filter output sample to achieve a given frequency magnitude response. From a hardware standpoint, this means that UR filters can be very fast, allowing us to build real-time IIR filters that operate over much higher sample rates than FIR filters.

[At the end of this section, we briefly compare the advantages and disadvantages of IIR filters relative to FIR filters.]

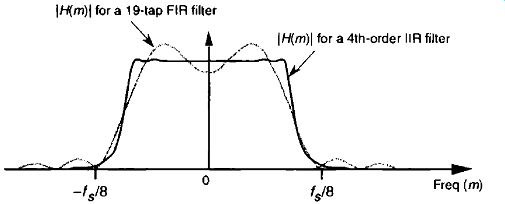

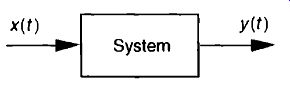

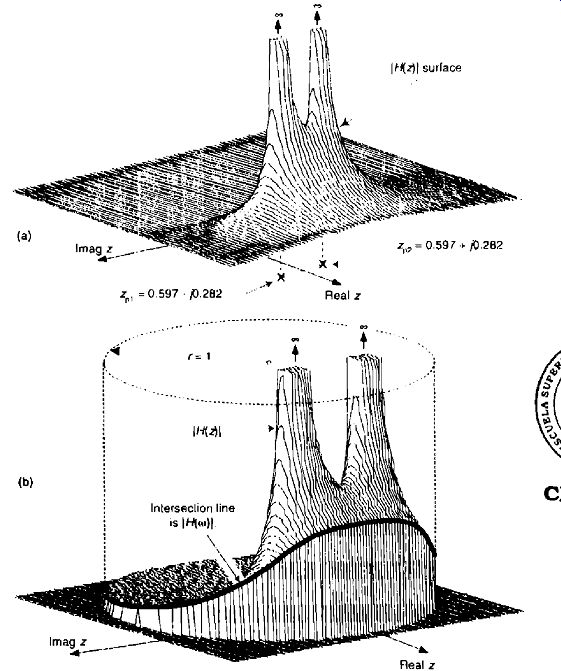

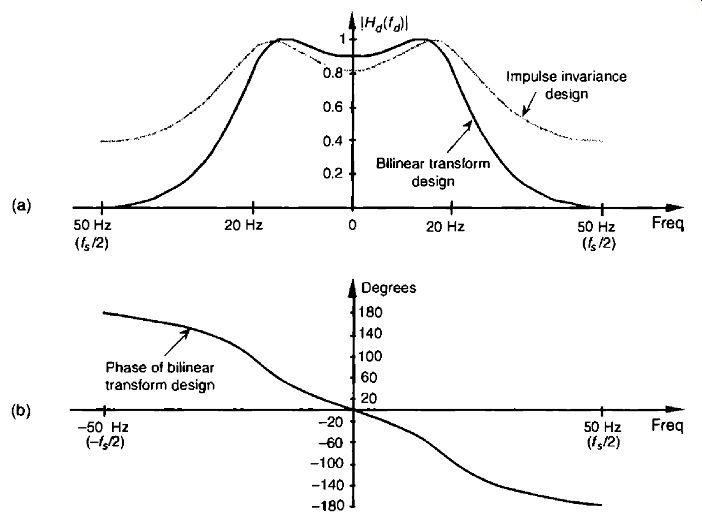

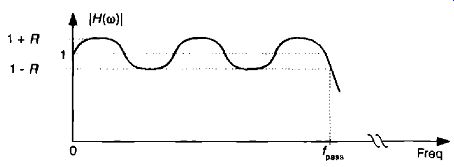

FIG. 1 Comparison of the frequency magnitude responses of a 19-tap

low-pass FIR filter and a 4th-order low-pass IIR filter.

To illustrate the utility of IIR filters, FIG. 1 contrasts the frequency magnitude responses of what's called a fourth-order low-pass IIR filter and the 19-tap FIR filter of Figure 19(b) from Section 5. Where the 19-tap FIR filter in FIG. 1 requires 19 multiplications per filter output sample, the fourth-order IIR filter requires only 9 multiplications for each filter output sample. Not only does the IIR filter give us reduced passband ripple and a sharper filter roll-off, it does so with less than half the multiplication workload of the FIR filter.

Recall from Section 5.3 that, to force an FIR filter's frequency response to have very steep transition regions, we had to design an FIR filter with a very long impulse response. The longer the impulse response, the more ideal our filter frequency response will become. From a hardware standpoint, the maxi mum number of FIR filter taps we can have (the length of the impulse response) depends on how fast our hardware can perform the required number of multiplications and additions to get a filter output value before the next filter input sample arrives. IIR filters, however, can be designed to have impulse responses that are longer than their number of taps! Thus, IIR filters can give us much better filtering for a given number of multiplications per output sample than FIR filters. With this in mind, let's take a deep breath, flex our mathematical muscles, and learn about IIR filters.

1. AN INTRODUCTION TO INFINITE IMPULSE RESPONSE FILTERS

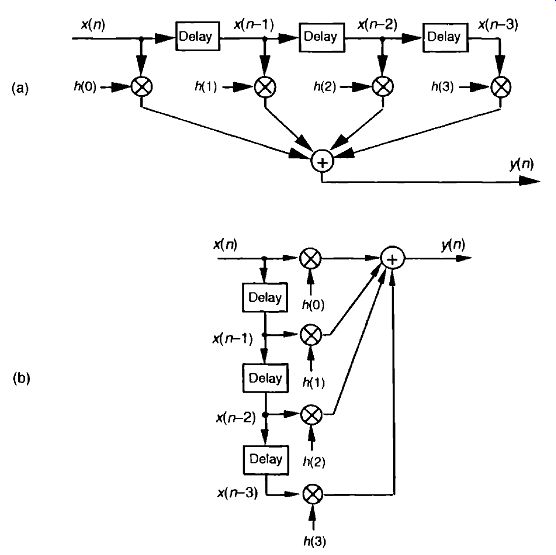

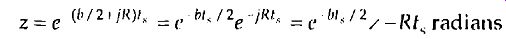

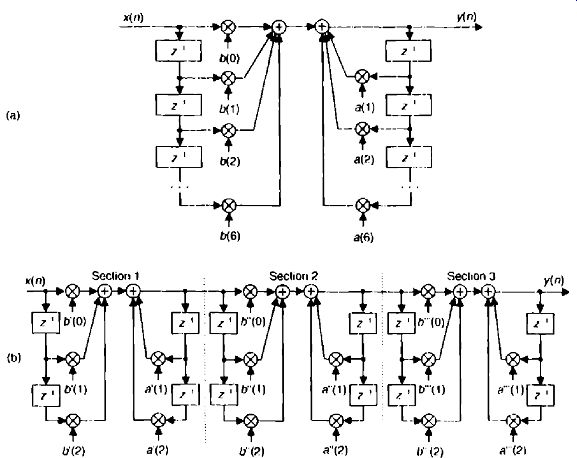

FIG. 2 FIR digital filter structures: (a) traditional FIR filter structure;

(b) re arranged, but equivalent. FIR filter structure.

IIR filters get their name from the fact that some of the filter's previous out put samples are used to calculate the current output sample. Given a finite duration of nonzero input values, the effect is that an IIR filter could have a infinite duration of nonzero output samples. So, if the IIR filter's input suddenly becomes a sequence of all zeros, the filter's output could conceivably remain nonzero forever. This peculiar attribute of IIR filters comes about be cause of the way they're realized, i.e., the feedback structure of their delay units, multipliers, and adders. Understanding IIR filter structures is straight forward if we start by recalling the building blocks of an FIR filter. FIG. 2(a) shows the now familiar structure of a 4-tap FIR digital filter that implements the time-domain FIR equation

y(n) = h(0)x(n) + h(1).x(n-1) + h(2)x(n-2) + h(3)x(n -3) .

(eqn. 1)

Although not specifically called out as such in Section 5, Eq. (eqn. 1) is known as a difference equation. To appreciate how past filter output samples are used in the structure of IIR filters, let's begin by reorienting our FIR structure in FIG. 2(a) to that of FIG. 2(b). Notice how the structures in FIG. 2 are computationally identical, and both are implementations, or realizations, of Eq. (eqn. 1).

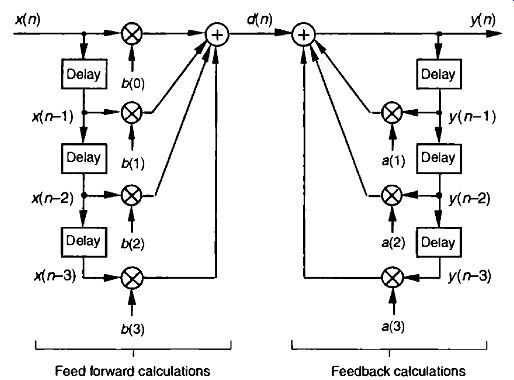

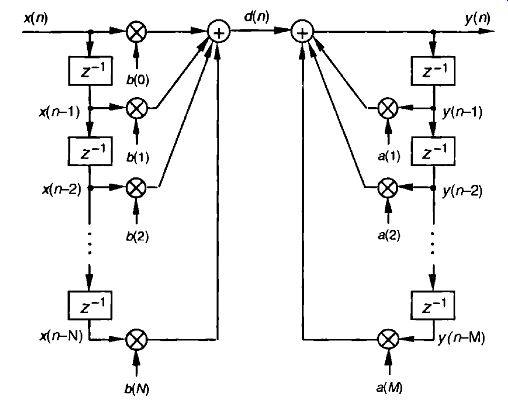

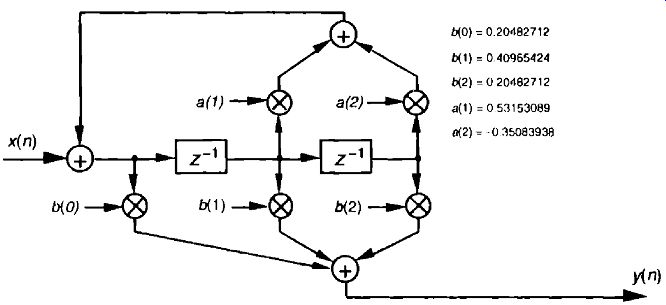

FIG. 3 IIR digital filter structure showing feed forward and feedback

calculations.

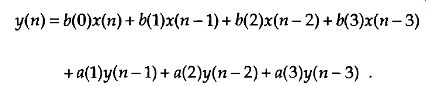

We can now show how past filter output samples are combined with past input samples by using the IIR filter structure in FIG. 3. Because IIR filters have two sets of coefficients, we'll use the standard notation of the variables b(k) to denote the feed forward coefficients and the variables a(k) to indicate the feedback coefficients in Figure 3. OK, the difference equation describing the IIR filter in FIG. 3 is

(eqn. 2)

Look at FIG. 3 and Eq. (eqn. 2) carefully. It's important to convince our selves that FIG. 3 really is a valid implementation of Eq. (eqn. 2) and that, conversely, difference equation Eq. (eqn. 2) fully describes the IIR filter structure in FIG. 3. Keep in mind now that the sequence y(n) in FIG. 3 is not the same y(n) sequence that's shown in FIG. 2. The d(n) sequence in FIG. 3 is equal to the y(n) sequence in FIG. 2.

By now you're probably wondering, "Just how do we determine those a(k) and b(k) IIR filter coefficients if we actually want to design an IIR filter?" Well, fasten your seat belt because this is where we get serious about under standing IIR filters. Recall from the last section concerning the window method of low-pass FIR filter design that we defined the frequency response of our desired FIR filter, took the inverse Fourier transform of that frequency response, and then shifted that transform result to get the filter's time domain impulse response. Happily, due to the nature of transversal FIR filters, the desired h(k) filter coefficients turned out to be exactly equal to the impulse response sequence. Following that same procedure with IIR filters, we could define the desired frequency response of our IIR filter and then take the inverse Fourier transform of that response to yield the filter's time-do main impulse response. The bad news is that there's no direct method for computing the filter's a(k) and b(k) coefficients from the impulse response! Unfortunately, the FIR filter design techniques that we've learned so far simply cannot be used to design HR filters. Fortunately for us, this wrinkle can be ironed out by using one of several available methods of designing IIR filters.

Standard IIR filter design techniques fall into three basic classes: the impulse invariance, bilinear transform, and optimization methods. These design methods use a discrete sequence, mathematical transformation process known as the z-transform whose origin is the Laplace transform traditionally used in the analyzing of continuous systems. With that in mind, let's start this UR filter analysis and design discussion by briefly reacquainting ourselves with the fundamentals of the Laplace transform.

[Heaviside (1850-1925), who was interested in electrical phenomena, developed an efficient algebraic process of solving differential equations. He initially took a lot of heat from his contemporaries because they thought his work was not sufficiently justified from a mathematical standpoint. However, the discovered correlation of Heaviside's methods with the rigorous mathematical treatment of the French mathematician Marquis Pierre Simon de Laplace's (1749-1827) operational calculus verified the validity of Heaviside's techniques.]

2. THE LAPLACE TRANSFORM

The Laplace transform is a mathematical method of solving linear differential equations that has proved very useful in the fields of engineering and physics. This transform technique, as it's used today, originated from the work of the brilliant English physicist Oliver Heaviside. The fundamental process of using the Laplace transform goes something like the following:

Step 1: A time-domain differential equation is written that describes the input/output relationship of a physical system (and we want to find the output function that satisfies that equation with a given input).

Step 2: The differential equation is Laplace transformed, converting it to an algebraic equation.

Step 3: Standard algebraic techniques are used to determine the desired out put function's equation in the Laplace domain.

Step 4: The desired Laplace output equation is, then, inverse Laplace trans formed to yield the desired time-domain output function's equation.

This procedure, at first, seems cumbersome because it forces us to go the long way around, instead of just solving a differential equation directly. The justification for using the Laplace transform is that although solving differential equations by classical methods is a very powerful analysis technique for all but the most simple systems, it can be tedious and (for some of us) error prone. The reduced complexity of using algebra outweighs the extra effort needed to perform the required forward and inverse Laplace transformations.

This is especially true now that tables of forward and inverse Laplace trans forms exist for most of the commonly encountered time functions. Well known properties of the Laplace transform also allow practitioners to decompose complicated time functions into combinations of simpler functions and, then, use the tables. (Tables of Laplace transforms allow us to translate quickly back and forth between a time function and its Laplace transform--analogous to, say, a German-English dictionary if we were studying the German language.) Let's briefly look at a few of the more important characteristics of the Laplace transform that will prove useful as we make our way toward the discrete z-transform used to design and analyze IIR digital filters.

[Although tables of commonly encountered Laplace transforms are included in almost every system analysis textbook, very comprehensive tables are also available]. ]

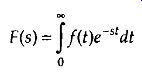

The Laplace transform of a continuous time-domain function f(t), where f(t) is defined only for positive time (t > 0), is expressed mathematically as

(eqn. 3)

F(s) is called "the Laplace transform of f(t)," and the variable s is the complex number

s =δ + j ω .

(eqn. 4)

A more general expression for the Laplace transform, called the bilateral or two-sided transform, uses negative infinity (- .) as the lower limit of integration. However, for the systems that we'll be interested in, where system conditions for negative time (t < 0) are not needed in our analysis, the one-sided Eq. (eqn. 3) applies. Those systems, often referred to as causal systems, may have initial conditions at t = 0 that must be taken into account (velocity of a mass, charge on a capacitor, temperature of a body, etc.) but we don't need to know what the system was doing prior to t = 0.

In Eq. (eqn. 4), is a real number and co is frequency in radians/ second. Because et is dimensionless, the exponent term s must have the dimension of 1/time, or frequency. That's why the Laplace variable s is often called a complex frequency.

To put Eq. (eqn. 3) into words, we can say that it requires us to multiply, point for point, the function f(t) by the complex function e^-5' for a given value of s. (We'll soon see that using the function et here is not accidental; e^-st is used because it's the general form for the solution of linear differential equations.) After the point-for-point multiplications, we find the area under the curve of the function f(t)e 5f by summing all the products. That area, a complex number, represents the value of the Laplace transform for the particular value of s = δ + jw chosen for the original multiplications. If we were to go through this process for all values of s, we'd have a full description of F(s) for every value of s.

I like to think of the Laplace transform as a continuous function, where the complex value of that function for a particular value of s is a correlation of f(t) and a damped complex c^st sinusoid whose frequency is co and whose damping factor is a. What do these complex sinusoids look like? Well, they are rotating phasors described by

(eqn. 5)

From our knowledge of complex numbers, we know that e j" is a unity magnitude phasor rotating clockwise around the origin of a complex plane at a frequency of co radians per second. The denominator of Eq. (eqn. 5) is a real number whose value is one at time t = 0. As t increases, the denominator et gets larger (when a is positive), and the complex e phasor's magnitude gets smaller as the phasor rotates on the complex plane. The tip of that phasor traces out a curve spiraling in toward the origin of the complex plane. One way to visualize a complex sinusoid is to consider its real and imaginary parts individually. We do this by expressing the complex e -51 sinusoid from Eq. (eqn. 5) in rectangular form as

(eqn. 5')

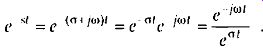

FIG. 4 shows the real parts (cosine) of several complex sinusoids with different frequencies and different damping factors. In FIG. 4(a), the complex sinusoid's frequency is the arbitrary co', and the damping factor is the arbitrary a'. So the real part of F(s), at s + jo)' , is equal to the correlation of f(t) and the wave in FIG. 4(a). For different values of s, we'll correlate f(t) with different complex sinusoids as shown in FIG. 4. (As we'll see, this correlation is very much like the correlation of f(t) with various sine and cosine waves when we were calculating the discrete Fourier transform.) Again, the real part of F(s), for a particular value of s, is the correlation off(t) with a cosine wave of frequency w and a damping factor of a, and the imaginary part of F(s) is the correlation off(t) with a sinewave of frequency co and a damping factor of a.

FIG. 4 Real part (cosine) of various e^-st functions, where s = δ +

jo, to be correlated with f (t).

Now, if we associate each of the different values of the complex s vari able with a point on a complex plane, rightfully called the s-plane, we could plot the real part of the F(s) correlation as a surface above (or below) that s-plane and generate a second plot of the imaginary part of the F(s) correlation as a surface above (or below) the s-plane. We can't plot the full complex F(s) surface on paper because that would require four dimensions. That's because s is complex, requiring two dimensions, and F(s) is itself complex and also requires two dimensions. What we can do, however, is graph the magnitude I F(s) I as a function of s because this graph requires only three dimensions. Let's do that as we demonstrate this notion of an I F(s) I surface by illustrating the Laplace transform in a tangible way. Say, for example, that we have the linear system shown in FIG. 5.

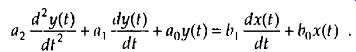

Also, let's assume that we can relate the x(t) input and the y(t) output of the linear time invariant physical system in FIG. 5 with the following messy homogeneous constant-coefficient differential equation

FIG. 5 System described by Eq. (eqn. 6). The system's input and output

are the continuous time functions x(t) and y(t) respectively.

(eqn. 6)

We'll use the Laplace transform toward our goal of figuring out how the sys tem will behave when various types of input functions are applied, i.e., what the y(t) output will be for any given x(t) input.

Let's slow down here and see exactly what FIG. 5 and Eq. (eqn. 6) are telling us. First, if the system is time invariant, then the a,, and h„ coefficients in Eq. (eqn. 6) are constant. They may be positive or negative, zero, real or complex, but they do not change with time. If the system is electrical, the coefficients might be related to capacitance, inductance, and resistance. If the system is mechanical with masses and springs, the coefficients could be related to mass, coefficient of damping, and coefficient of resilience. Then, again, if the system is thermal with masses and insulators, the coefficients would be related to thermal capacity and thermal conductance. To keep this discussion general, though, we don't really care what the coefficients actually represent.

OK, Eq. (eqn. 6) also indicates that, ignoring the coefficients for the moment, the sum of the y(t) output plus derivatives of that output is equal to the sum of the x(t) input plus the derivative of that input. Our problem is to determine exactly what input and output functions satisfy the elaborate relationship in Eq. (eqn. 6). (For the stout hearted, classical methods of solving differential equations could be used here, but the Laplace transform makes the problem much simpler for our purposes.) Thanks to Laplace, the complex exponential time function of co is the one we'll use. It has the beautiful property that it can be differentiated any number of times without destroying its original form. That is

(eqn. 7)

If we let x(t) and y(t) be functions of e51 , x(c 4 ) and y(c , and use the properties shown in Eq. (eqn. 7), Eq. (eqn. 6) becomes

(eqn. 8)

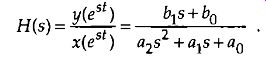

Although it's simpler than Eq. (eqn. 6), we can further simplify the relationship in the last line in Eq. (eqn. 8) by considering the ratio of y(est) over x(est) as the Laplace transfer function of our system in FIG. 5. If we call that ratio of polynomials the transfer function H(s),

(eqn. 9)

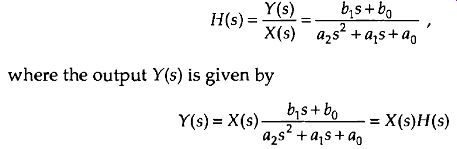

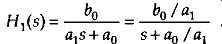

To indicate that the original x(t) and y(t) have the identical functional form of est, we can follow the standard Laplace notation of capital letters and show the transfer function as

(eqn. 10)

where the output Y(s) is given by

(eqn. 11)

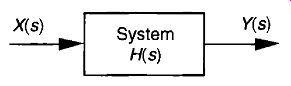

Equation (eqn. 11) leads us to redraw the original system diagram in a form that highlights the definition of the transfer function H(s) as shown in FIG. 6.

The cautious reader may be wondering, "Is it really valid to use this Laplace analysis technique when it's strictly based on the system's x(t) input being some function of est, or x(est)?"

The answer is that the Laplace analysis technique, based on the complex exponential x(est), is valid because all practical x(t) input functions can be represented with complex exponentials. For ex ample,

• a constant, c = cew ,

• sinusoids, sin(wt) = eipot _ e-iwt)/2] or cos(wt) = (el(nt +

• a monotonic exponential, eat, and

• an exponentially varying sinusoid, cat cos(o.)t).

FIG. 6 Linear system described by Eqs, (eqn. 10) and (eqn. 11) . The system's

input is the Laplace function X(s), its output is the Laplace function

Y(s), and the system transfer function is H(s).

With that said, if we know a system's transfer function H(s), we can take the Laplace transform of any x(t) input to determine X(s), multiply that X(s) by H(s) to get Y(s), and then inverse Laplace transform Y(s) to yield the time-domain expression for the output y(t). In practical situations, however, we usually don't go through all those analytical steps because it's the system's transfer function H(s) in which we're most interested. Being able to ex press H(s) mathematically or graph the surface I H(s) I as a function of s will tell us the two most important properties we need to know about the system under analysis: Is the system stable, and if so, what is its frequency response?

"But wait a minute," you say. "Equations (eqn. 10) and (eqn. 11) indicate that we have to know the Y(s) output before we can determine H(s)!" Not really. All we really need to know is the time-domain differential equation like that in Eq. (eqn. 6). Next we take the Laplace transform of that differential equation and rearrange the terms to get the H(s) ratio in the form of Eq. (eqn. 10). With practice, systems designers can look at a diagram (block, circuit, mechanical, whatever) of their system and promptly write the Laplace expression for H(s). Let's use the concept of the Laplace transfer function H(s) to determine the stability and frequency response of simple continuous systems.

2.1 Poles and Zeros on the s-Plane and Stability

One of the most important characteristics of any system involves the concept of stability. We can think of a system as stable if, given any bounded input, the output will always be bounded. This sounds like an easy condition to achieve because most systems we encounter in our daily lives are indeed stable. Nevertheless, we have all experienced instability in a system containing feedback. Recall the annoying howl when a public address system's micro phone is placed too close to the loudspeaker. A sensational example of an un stable system occurred in western Washington when the first Tacoma Narrows Bridge began oscillating on the afternoon of November 7th, 1940. Those oscillations, caused by 42 mph winds, grew in amplitude until the bridge destroyed itself. For IIR digital filters with their built-in feedback, in stability would result in a filter output that's not at all representative of the filter input; that is, our filter output samples would not be a filtered version of the input; they'd be some strange oscillating or pseudorandom values. A situation we'd like to avoid if we can, right? Let's see how.

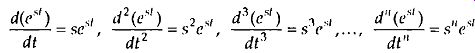

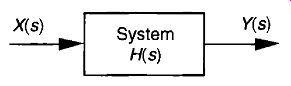

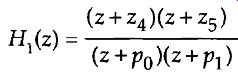

We can determine a continuous system's stability by examining several different examples of H(s) transfer functions associated with linear time invariant systems. Assume that we have a system whose Laplace transfer function is of the form of Eq. (eqn. 10), the coefficients are all real, and the coefficients b1 and a2 are equal to zero. We'll call that Laplace transfer function Hi (s), where

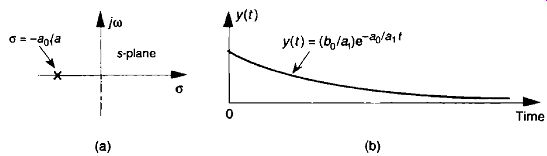

(eqn. 12)

Notice that if 5 = -a0 /a1 , the denominator in Eq. (eqn. 12) equals zero and H1 (s) would have an infinite magnitude. This s = -a0 /a1 point on the s-plane is called a pole, and that pole's location is shown by the "x" in FIG. 7(a). Notice that the pole is located exactly on the negative portion of the real a axis. If the system described by H1 were at rest and we disturbed it with an impulse like x(t) input at time t = 0, its continuous time-domain y(t) output would be the damped exponential curve shown in FIG. 7(b). We can see that H (s) is stable because its y(t) output approaches zero as time passes. By the way, the distance of the pole from the a = 0 axis, a0 / a1 for our Hi (s), gives the decay rate of the y(t) impulse response. To illustrate why the term pole is appropriate, FIG. 8(b) depicts the three-dimensional surface of I Hi (s) I above the s-plane. Look at FIG. 8(b) carefully and see how we've reoriented the s-plane axis. This new axis orientation allows us to see how the Hi (s) system's frequency magnitude response can be determined from its three-dimensional s-plane surface. If we examine the I H1 (5) I surface at a = 0, we get the bold curve in FIG. 8(b). That bold curve, the intersection of the vertical a = 0 plane (the jœ axis plane) and the I Hi (s) I surface, gives us the frequency magnitude response I H1 (0)) P of the system-and that's one of the things we're after here. The bold I Hi (co) I curve in FIG. 8(b) is shown in a more conventional way in FIG. 8(c). FIGs. 8(b) and 6-8(c) high light the very important property that the Laplace transform is a more general case of the Fourier transform because if a = 0, then s = joe. In this case, the I H1 (5) I curve for a = 0 above the s-plane becomes the I H1 (w) curve above the jo) axis in FIG. 8(c).

FIG. 7 Descriptions of Hi (s): (a) pole located at s = a + je = -c.ro/ai

+ JO on the s-plane; (b) time-domain y(t) impulse response of the system.

FIG. 8 Further depictions of Hi (s): (a) pole located at o = -ado,

on the s-plane; (b) I Hi (s) I surface; (c) curve showing the intersection

of the I Hi (s) I surface and the vertical o = 0 plane. This is the conventional

depiction of the |H (w) I frequency magnitude response.

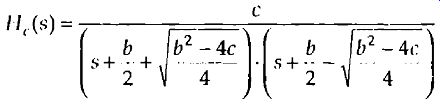

Another common system transfer function leads to an impulse response that oscillates. Let's think about an alternate system whose Laplace transfer function is of the form of Eq. (eqn. 10), the coefficient bo equals zero, and the coefficients lead to complex terms when the denominator polynomial is factored. We'll call this particular second-order transfer function H 2 (s), where

(eqn. 13)

(By the way, when a transfer function has the Laplace variable s in both the numerator and denominator, the order of the overall function is defined by the largest exponential order of s in the denominator polynomial. So our H2(s) is a second-order transfer function.) To keep the following equations from be coming too messy, let's factor its denominator and rewrite Eq. (eqn. 13) as

(eqn. 14)

where A = b1 /a2, p = vreal

+ jpimas, and p* = p„al - jpirnag (complex conjugate r of p).

Notice that, if s is equal to -p or -p*, one of the polynomial roots in the denominator of Eq. (eqn. 14) will equal zero, and H2 (s) will have an infinite magnitude. Those two complex poles, shown in FIG. 9(a), are located off the negative portion of the real a axis. If the H2 system were at rest and we disturbed it with an impulse like x(t) input at time t = 0, its continuous time domain y(t) output would be the damped sinusoidal curve shown in FIG. 9(b). We see that H2 (s) is stable because its oscillating y(t) output, like a plucked guitar string, approaches zero as time increases. Again, the distance of the poles from the a = 0 axis ( v_real,) gives the decay rate of the sinusoidal y(t) impulse response. Likewise, the distance of the poles from the /co = 0 axis (±pimag) gives the frequency of the sinusoidal y(t) impulse response. Notice something new in FIG. 9(a). When s = 0, the numerator of Eq. (eqn. 14) is zero, making the transfer function H2 (s) equal to zero. Any value of s where H2 (s) = 0 is sometimes of interest and is usually plotted on the s plane as the little circle, called a "zero," shown in FIG. 9(a). At this point we're not very interested in knowing exactly what p and p* are in terms of the coefficients in the denominator of Eq. (eqn. 13). However, an energetic reader could determine the values of p and p* in terms of /1 0, al , and a2 by using the following well-known quadratic factorization formula: Given the second order polynomial

f(s) = as2 + bs + c, then f(s) can be factored as

(eqn. 15)

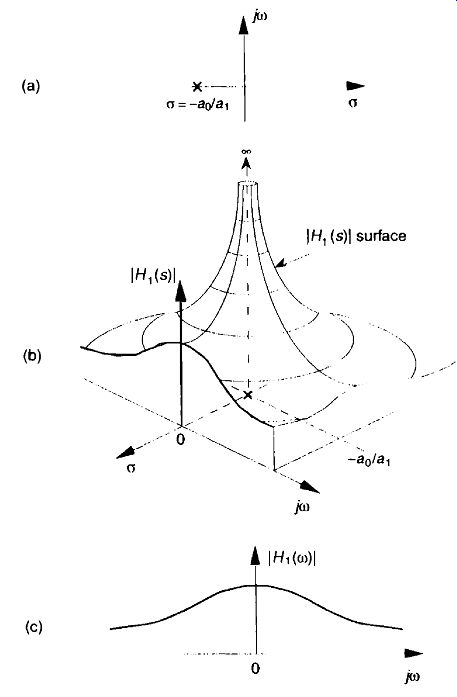

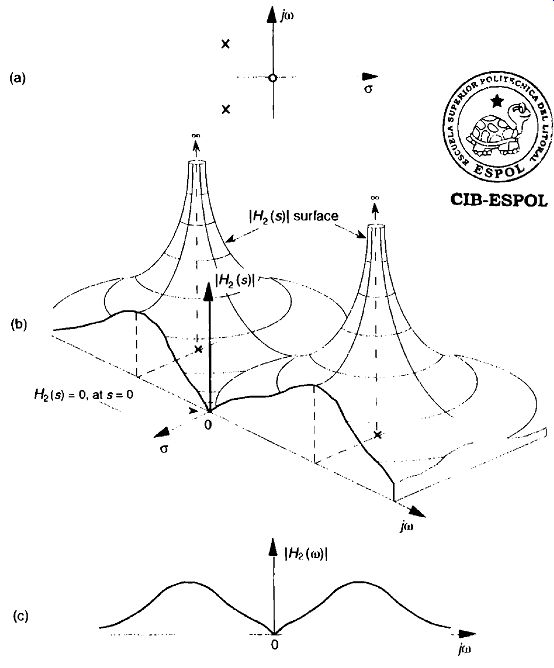

FIG. 10(b) illustrates the I II2 (s) I surface above the s-plane. Again, the bold I H2 (o) I curve in FIG. 10(b) is shown in the conventional way in

FIG. 9 Descriptions of H2 (s): (a) poles located at s = D_real ± jpirnag

on the s-plane; (b) time domain y(t) impulse response of the system.

FIG. 10 Further depictions of H2(s): (a) poles and zero locations on

the s-plane; (b) I H2(s)I surface: (c) I H7 (o) I frequency magnitude

response curve.

FIG. 10(c) to indicate the frequency magnitude response of the system described by Eq. (eqn. 13). Although the three-dimensional surfaces in Figures 8(h) and 10(b) are informative, they're also unwieldy and unnecessary.

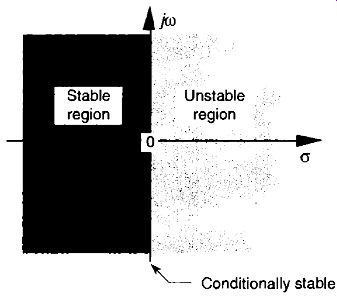

We can determine a system's stability merely by looking at the locations of the poles on the two-dimensional s-plane.

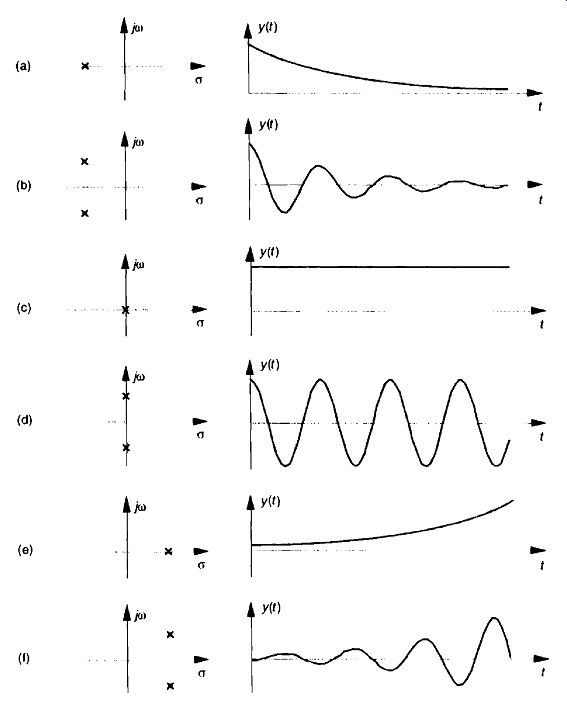

To further illustrate the concept of system stability FIG. 11 shows the s-plane pole locations of several example Laplace transfer functions and their corresponding time-domain impulse responses. We recognize Figures 11(a) and 11(b), from our previous discussion, as indicative of stable systems.

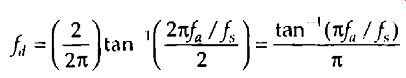

When disturbed from their at-rest condition they respond and, at some later time, return to that initial condition. The single pole location at s = 0 in FIG. 11(c) is indicative of the 1/s transfer function of a single element of a linear system. In an electrical system, this 1/s transfer function could be a capacitor that was charged with an impulse of current, and there's no discharge path in the circuit. For a mechanical system, FIG. 11(c) would describe a kind of spring that's compressed with an impulse of force and, for some reason, re mains under compression. Notice, in FIG. 11(d), that, if an H(s) transfer function has conjugate poles located exactly on the je.) axis (a = 0), the system will go into oscillation when disturbed from its initial condition. This situation, called conditional stability, happens to describe the intentional transfer function of electronic oscillators. Instability is indicated in Figures 11(e) and 11. Here, the poles lie to the right of the ja) axis. When disturbed from their initial at-rest condition by an impulse input, their outputs grow without bound. See how the value of a, the real part of s, for the pole locations is the key here? When a < 0, the system is well behaved and stable; when a = 0, the system is conditionally stable; and when a> 0 the system is unstable. So we can say that, when a is located on the right half of the s-plane, the system is unstable. We show this characteristic of linear continuous systems in FIG. 12. Keep in mind that real-world systems often have more than two poles, and a system is only as stable as its least stable pole. For a system to be stable, all of its transfer-function poles must lie on the left half of the s-plane.

[Impulse response testing in a laboratory can be an important part of the system design process. The difficult part is generating a true impulse-like input. lithe system is electrical, for example, al though somewhat difficult to implement, the input x(t) impulse would be a very short duration voltage or current pulse. lf, however, the system were mechanical, a whack with a hammer would suffice as an x(t) impulse input. For digital systems, on the other hand, an impulse input is easy to generate; it's a single unity-valued sample preceded and followed by all zero-valued samples. ]

FIG. 11 Various H(s) pole locations and their time-domain impulse responses:

(a) single pole at a < (b) conjugate poles at delta <0; (c) single

pole located at n = 0; (d) conjugate poles located at a = 0; (e) single

pole at delta > 0, (f) conjugate poles at delta > 0.

FIG. 12 The Laplace s-plane showing the regions of stability and instability

for pole locations for linear continuous systems.

To consolidate what we've learned so far: H(s) is determined by writing a linear system's time-domain differential equation and taking the Laplace transform of that equation to obtain a Laplace expression in terms of X(s), Y(s), s, and the system's coefficients. Next we rearrange the Laplace expression terms to get the H(s) ratio in the form of Eq. (eqn. 10). (The really slick part is that we do not have to know what the time-domain x(t) input is to analyze a linear system!) We can get the expression for the continuous frequency response of a system just by substituting jw for s in the H(s) equation. To deter mine system stability, the denominator polynomial of ii(s) is factored to find each of its roots. Each root is set equal to zero and solved for s to find the location of the system poles on the s-plane. Any pole located to the right of the jw axis on the s-plane will indicate an unstable system.

OK, returning to our original goal of understanding the z-transform, the process of analyzing TIR filter systems requires us to replace the Laplace transform with the z-transform and to replace the s-plane with a z-plane. Let's introduce the z-transform, determine what this new z-plane is, discuss the stability of IIR filters, and design and analyze a few simple IIR filters.

[In the early 1960s, James Kaiser, after whom the Kaiser window function is named, consolidated the theory of digital filters using a mathematical description known as the z-transform. Until that time, the use of the z-transform had generally been restricted to the field of discrete control systems. ]

3. THE Z-TRANSFORM

The z-transform is the discrete-time cousin of the continuous Laplace transform. While the Laplace transform is used to simplify the analysis of continuous differential equations, the z-transform facilitates the analysis of discrete difference equations. Let's define the z-transform and explore its important characteristics to see how it's used in analyzing IIR digital filters.

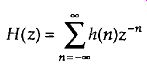

The z-transform of a discrete sequence h(n), expressed as H(z), is defined a:

(eqn. 16)

where the variable z is complex. Where Eq. (eqn. 3) allowed us to take the Laplace transform of a continuous signal, the z-transform is performed on a discrete h(n) sequence, converting that sequence into a continuous function H(z) of the continuous complex variable z. Similarly, as the function e is the general form for the solution of linear differential equations, z - " is the general form for the solution of linear difference equations. Moreover, as a Laplace function F(s) is a continuous surface above the s-plane, the z-transform function Fir(z) is a continuous surface above a z-plane. To whet your appetite, we'll now state that, if H(z) represents an IIR filter's z-domain transfer function, evaluating the H(z) surface will give us the filter's frequency magnitude response, and H(z)'s pole and zero locations will determine the stability of the filter.

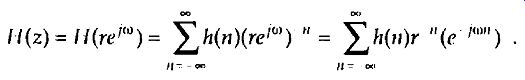

We can determine the frequency response of an IIR digital filter by expressing z in polar form as z = re I"), where r is a magnitude and to is the angle.

In this form, the z-transform equation becomes

(eqn. 17)

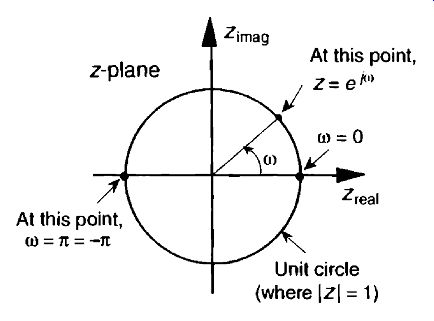

FIG. 13 Unit circle on the complex z-plane.

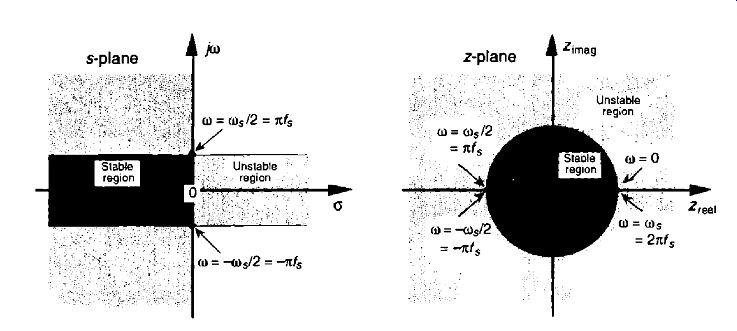

FIG. 14 Mapping of the Laplace s-plane to the z-plane. All frequency

values are in radians/s.

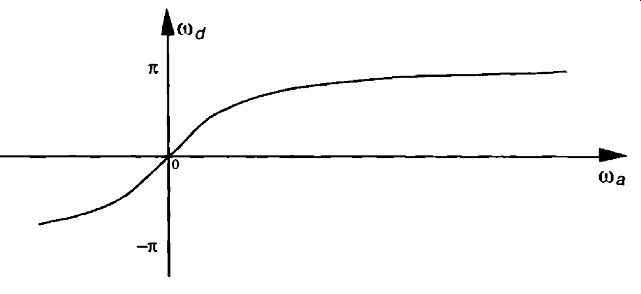

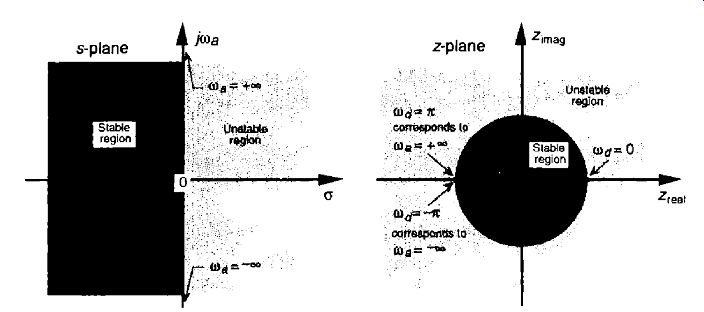

Equation (eqn. 17) can be interpreted as the Fourier transform of the product of the original sequence h(n) and the exponential sequence r ^-n . When r equals one, Eq. (eqn. 17) simplifies to the Fourier transform. Thus on the z-plane, the contour of the H(z) surface for those values where |z| = I is the Fourier trans form of h(n). If h(n) represents a filter impulse response sequence, evaluating the H(z) transfer function for |z| = 1 yields the frequency response of the filter. So where on the z-plane is |z| = 1? It's a circle with a radius of one, centered about the z = 0 point. This circle, so important that it's been given the name unit circle, is shown in FIG. 13. Recall that the JO) frequency axis on the continuous Laplace s-plane was linear and ranged from - to + 00 radians/s. The co frequency axis on the complex z-plane, however, spans only the range from -it to +1C radians. With this relationship between the je.) axis on the Laplace s-plane and the unit circle on the z-plane, we can see that the z-plane frequency axis is equivalent to coiling the s-plane's jo.) axis about the unit circle on the z-plane as shown in FIG. 14.

Then, frequency co on the z-plane is not a distance along a straight line, but rather an angle around a circle. With o.) in FIG. 13 being a general normalized angle in radians ranging from - pi to + pi , we can relate co to an equivalent f, sampling rate by defining a new frequency variable cos = 27cfs in radians/s. The periodicity of discrete frequency representations, with a period of (o, = 27cfs radians/s or I", Hz, is indicated for the z-plane in FIG. 14.

Where a 'walk along the je.) frequency axis on the s-plane could take us to infinity in either direction, a trip on the co frequency path on the z-plane leads us in circles (on the unit circle). FIG. 14 shows us that only the -Tcf, to +Tcf, radians/s frequency range for co can be accounted for on the z-plane, and this is another example of the universal periodicity of the discrete frequency do main. (Of course the -Tcf s to +7cfs radians/s range corresponds to a cyclic frequency range of -4/2 to +4/2.) With the perimeter of the unit circle being z = ei 4), later, we'll show exactly how to substitute eia) for z in a filter's H(z) transfer function, giving us the filter's frequency response.

3.1 Poles and Zeros on the z-Plane and Stability

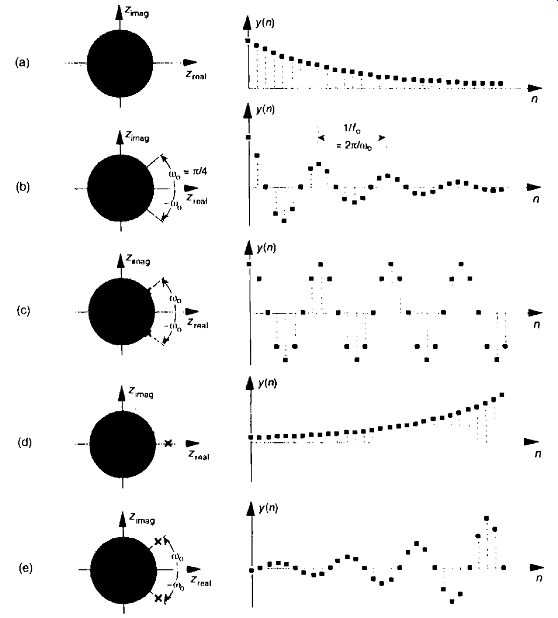

FIG. 15 Various H(z) pole locations and their discrete time-domain

impulse responses: (a) single pole inside the unit circle: (b) conjugate

poles located inside the unit circle; (c) conjugate poles located on the

unit circle: (d) single pole outside the unit circle: (e) conjugate poles

located outside the unit circle.

One of the most important characteristics of the z-plane is that the region of filter stability is mapped to the inside of the unit circle on the z-plane. Given the H(z) transfer function of a digital filter, we can examine that function's pole locations to determine filter stability. If all poles are located inside the unit circle, the filter will be stable. On the other hand, if any pole is located outside the unit circle, the filter will be unstable. FIG. 15 shows the z-plane pole locations of several example z-domain transfer functions and their corresponding discrete time-domain impulse responses. It's a good idea for the reader to compare the z-plane and discrete-time responses of FIG. 15 with the s-plane and the continuous time responses of FIG. 11. The y(n) outputs in FIGs. 15(d) and (e) show examples of how unstable filter outputs increase in amplitude, as time passes, whenever their x(n) inputs are nonzero. To avoid this situation, any IIR digital filter that we design should have an H(z) transfer function with all of its individual poles inside the unit circle. Like a chain that's only as strong as its weakest link, an IIR filter is only as stable as its least stable pole.

The coo oscillation frequency of the impulse responses in FIGs. 15(c)and (e) is, of course, proportional to the angle of the conjugate pole pairs from the zreal axis, or coo radians/s corresponding to fo = co0 /21t Hz. Because the intersection of the -zreal axis and the unit circle, point z = -1, corresponds to it radians (or 7tf, radians/s =fs /2 Hz), the coo angle of 7c/4 in FIG. 15 means that fo = f/8 and our y(n) will have eight samples per cycle of f.

FIG. 16 Output sequence y(n) equal to a unit delayed version of the

input x (n) sequence,

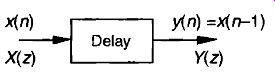

3.2 Using the z-Transform to Analyze IIR Filters

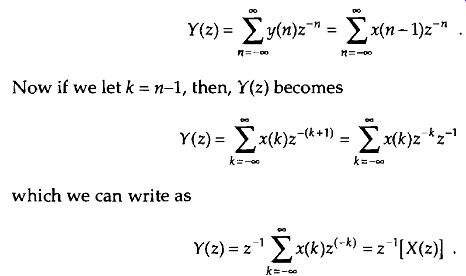

We have one last concept to consider before we can add the z-transform to our collection of digital signal processing tools. We need to determine what the time delay operation in FIG. 3 is relative to the z-transform. To do this, assume we have a sequence x(n) whose z-transform is X(z) and a sequence y(n) = x(n-1) whose z-transform is Y(z) as shown in FIG. 16. The z-transform of y(n) is, by definition,

(eqn. 18)

(eqn. 19)

(eqn. 20)

Thus, the effect of a single unit of time delay is to multiply the z-transform by z-1.

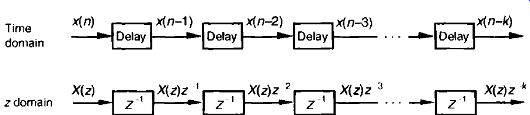

Interpreting a unit time delay to be equivalent to the z I operator leads us to the relationship shown in FIG. 17, where we can say X(z)z° X(z) is the z-transform of x(n), X(z)z is the z-transform of x(n) delayed by one sample, X(z)z" 2 is the z-transform of x(n) delayed by two samples, and X(z)z' is the z-transform of x(n) delayed by k samples. So a transfer function of z k is equivalent to a delay of kt, seconds from the instant when t = 0, where t, is the period between data samples, or one over the sample rate. Specifically, 1/J. Because a delay of one sample is equivalent to the factor z 1 , the unit time delay symbol used in FIGs. 2 and 3 is usually indicated by the z -1 operator.

Let's pause for a moment and consider where we stand so far. Our acquaintance with the Laplace transform with its s-plane, the concept of stability based on H(s) pole locations, the introduction of the z-transform with its z-plane poles, and the concept of the z -1 operator signifying a single unit of time delay has led us to our goal: the ability to inspect art IIR filter difference equation or filter structure and immediately write the filter's z-domain transfer function H(z). Accordingly, by evaluating an IIR filter's H(z) transfer function appropriately, we can determine the filter's frequency response and its stability. With those ambitious thoughts in mind, let's develop the z-domain equations we need to analyze IIR filters.

FIG. 17 Correspondence of the delay operation in the time domain and

the z k operation in the z-domain,

FIG. 18 General (Direct Form 1) structure of an Mth-order IIR filter,

having N feed forward stages and M feedback stages, with the z -1 operator

indicating a unit time delay.

Using the relationships of FIG. 17, we redraw FIG. 3 as a general Mth-order HR filter using the z--1 operator as shown in FIG. 18. (In hardware, those z operations are memory locations holding successive filter input and output sample values. When implementing an IIR filter in a soft ware routine, the z" 1 operation merely indicates sequential memory locations where input and output sequences are stored.) The IIR filter structure in FIG. 18 is often called the Direct Form I structure.

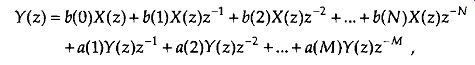

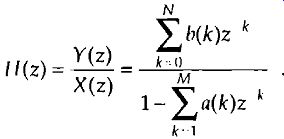

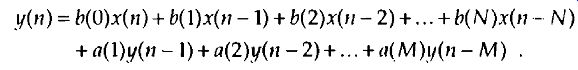

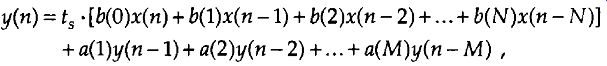

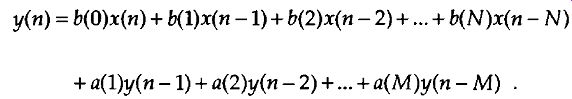

The time-domain difference equation describing the general Mth-order HR filter, having N feed forward stages and M feedback stages, in FIG. 18 is

(eqn. 21)

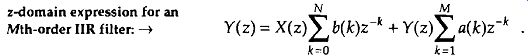

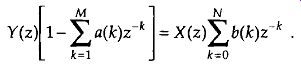

In the z-domain, that IIR filter's output can be expressed by

(eqn. 22)

where Y(z) and X(z) represent the z-transform of y(n) and x(n). Look Eqs. (eqn. 21) and (eqn. 22) over carefully and see how the unit time delays translate to negative powers of z in the z-domain expression. A more compact form for Y(z) is

(eqn. 23)

OK, now we've arrived at the point where we can describe the transfer function of a general IIR filter. Rearranging Eq. (eqn. 23) to collect like terms,

(24)

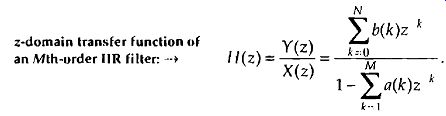

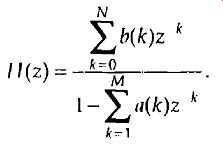

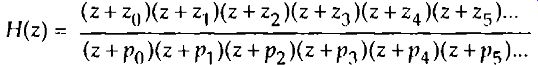

Finally, we define the filter's z-domain transfer function as H(z) = Y(z)/X(z), where H(z) is given by

(eqn. 25)

(just like Laplace transfer functions, the order of our z-domain transfer function is defined by the largest exponential order of z in the denominator, in this case M.) Equation (eqn. 25) tells us all we need to know about an IIR filter. We can evaluate the denominator of Eq. (eqn. 25) to determine the positions of the filter's poles on the z-plane indicating the filter's stability.

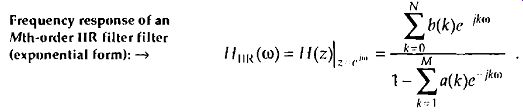

Remember, now, just as the Laplace transfer function H(s) in Eq. (eqn. 9) was a complex-valued surface on the s-plane, H(z) is a complex-valued surface above, or below, the z-plane. The intersection of that H(z) surface and the perimeter of a cylinder representing the z = eitù unit circle is the filter's complex frequency response. This means that substituting ei'" for z in Eq. (eqn. 25)'s transfer function gives us the expression for the filter's Hu(co) frequency response as

(eqn. 26)

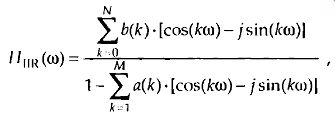

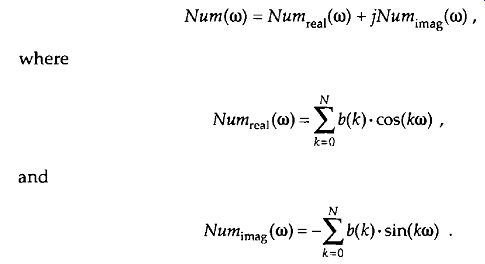

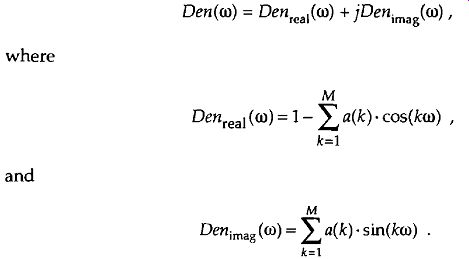

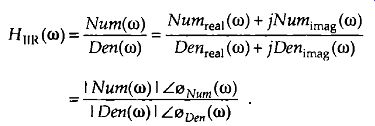

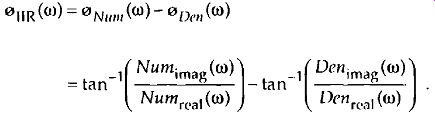

Let's alter the form of Eq. (eqn. 26) to obtain more useful expressions for HIIR (co)'s frequency magnitude and phase responses. Because a typical BR filter frequency response HIIR (w) is the ratio of two complex functions, we can express H(w) in its equivalent rectangular form as

(eqn. 27), (eqn. 28)

It's usually easier and, certainly, more useful, to consider the complex frequency response expression in terms of its magnitude and phase. Let's do this by representing the numerator and denominator in Eq. (eqn. 28) as two complex functions of radian frequency co. Calling the numerator of Eq. (eqn. 28)

Num(w), then,

(eqn. 29)

(eqn. 30)

(eqn. 31), (eqn. 32)

These Num(w) and Den(w) definitions allow us to represent 1-/TIR(w) in the more simple forms of

(eqn. 33), (eqn. 33')

These Num(w) and Den(w) definitions allow us to represent 1-/TIR(w) in the more simple forms of

Given the form in Eq. (eqn. 33) and the rules for dividing one complex number by another, provided by Eqs. (A-2) and (A-19'), the frequency magnitude response of a general HR filter is

(eqn. 34)

Furthermore, the filter's phase response ø(o) is the phase of the numerator minus the phase of the denominator, or

(eqn. 35)

To reiterate our intent here, we've gone through the above algebraic gymnastics to develop expressions for an IIR filter's frequency magnitude response | Hu(co) | and phase response ki lTR(co) in terms of the filter coefficients in Eqs. (eqn. 30) and (eqn. 32). Shortly, we'll use these expressions to analyze an actual [ER filter.

FIG. 19 Second-order low-pass IIR filter example.

Pausing a moment to gather our thoughts, we realize that we can use Eqs. (eqn. 34) and (eqn. 35) to compute the magnitude and phase response of IIR filters as a function of the frequency to. And again, just what is to? It's the normalized radian frequency represented by the angle around the unit circle in FIG. 13, having a range of --rt +TC. In terms of a discrete sampling frequency (.1)„ measured in radians/s, from FIG. 14, we see that co covers the range -o.),/2 +o.),/2. In terms of our old friend f, Hz, Eqs. (eqn. 34) and (eqn. 35) apply over the equivalent frequency range of -f12 to Als /2 Hz. So, for example, if digital data is arriving at the filter's input at a rate of f, = 1000 samples/s, we could use Eq. (eqn. 34) to plot the filter's frequency magnitude response over the frequency range of -500 Hz to +500 Hz.

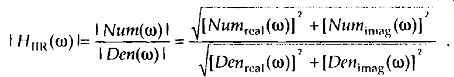

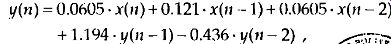

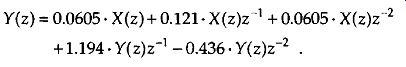

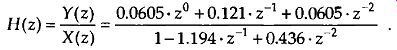

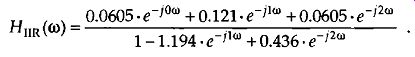

Although the equations describing the transfer function 1-1 /1R (w), its magnitude response I H o(w) I, and phase response o(o) look somewhat complicated at first glance, let's illustrate their simplicity and utility by analyzing the simple second-order low-pass IIR filter in FIG. 19 whose positive cut off frequency is cos /10. By inspection, we can write the filter's time-domain difference equation as

(eqn. 36)

or the z-domain expression as

(eqn. 37)

Using Eq. (eqn. 25), we write the z-domain transfer function H(z) as

(eqn. 38)

Replacing z with ejw, we see that the frequency response of our example IIR filter is

(eqn. 39)

We're almost there. Remembering Euler's equations and that cos(0) = 1 and sin(0) = 0, we can write the rectangular form of 1-1 11R(w) as

(eqn. 40)

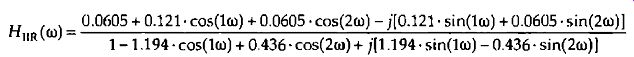

FIG. 20 Frequency responses of the example Ill? filter (solid line)

in FIG. 19 and a 5-tap FIR filter (dashed line): (a) magnitude responses;

(b) phase responses.

Equation (eqn. 40) is what we're after here, and if we calculate its magnitude over the frequency range of -1C (.0 IC, we get the I 1-1HR(w)1 shown as the solid curve in FIG. 20(a). For comparison purposes we also show a 5-tap low-pass FIR filter magnitude response in FIG. 20(a). Although both filters require the same computational workload, five multiplications per filter output sample, the low-pass IIR filter has the superior frequency magnitude response. Notice the steeper roll-off and lower sidelobes of the IIR filter relative to the FIR filter.± A word of warning here. It's easy to reverse some of the signs for the terms in the denominator of Eq. (eqn. 40), so be careful if you attempt these calculations at home. Some authors avoid this problem by showing the a(k) coefficients in FIG. 18 as negative values, so that the summation in the denominator of Eq. (eqn. 25) is always positive. Moreover, some commercial software HR design routines provide a(k) coefficients whose signs must be re versed before they can be applied to the IIR structure in FIG. 18. (If, while using software routines to design or analyze IIR filters, your results are very strange or unexpected, the first thing to do is reverse the signs of the a(k) coefficients and see if that doesn't solve the problem.)

[To make this IIR and FIR filter comparison valid, the coefficients used for both filters were chosen so that each filter would approximate the ideal low-pass frequency response shown in Figure 5-17(a). ]

The solid curve in FIG. 20(b) is our IIR filter's theta_IIR(w) phase response. Notice its nonlinearity relative to the FIR filter's phase response. (Re member now, we're only interested in the filter phase responses over the low-pass filters' passband. So those phase discontinuities for the FIR filter are of no consequence.) Phase nonlinearity is inherent in IIR filters and, based on the ill effects of nonlinear phase introduced in the group delay discussion of Section 5.8, we must carefully consider its implications whenever we decide to use an IIR filter instead of an FIR filter in any given application. The question any filter designer must ask and answer, is "How much phase distortion can I tolerate to realize the benefits of the reduced computational load and high data rates afforded by IIR filters?" To determine our IIR filter's stability, we must find the roots of the Eq. (eqn. 38) H(z)'s denominator second-order polynomial. Those roots are the poles of H(z) if their magnitudes are less than one, the filter will be stable. To deter mine the two poles zp1 and zp21 first we multiply H(z) by z2 /z2 to obtain polynomials with positive exponents. After doing so, H(z)'s denominator is H(z) denominator:

z2 -1.194z + 0.436. (eqn. 41)

Factoring Eq. (eqn. 41) using the quadratic equation from Eq. (eqn. 15), we obtain the factors H(z) denominator: (z + zpi )(z + zp2 ) = (z -0.597 +j0.282)(z -0.597 -j0.282).

(eqn. 42)

So when z = = 0.597 -j0.282 or z = -z p2 = 0.597 +j0.282, the filter's H(z)

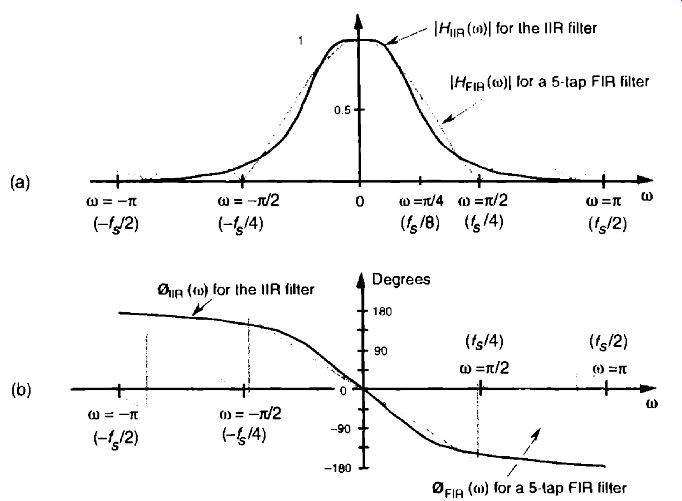

transfer function's denominator is zero and H(z) is infinite. We show the 0.597 +j0.282 and 0.597 -j0.282 pole locations, along with a crude depiction of the |H(z)| surface, in FIG. 21(a). Because those pole locations are inside the unit circle (their magnitudes are less than one), our example TIR filter is stable.

While we're considering at the 1H(z) I surface, we can show its intersection with the unit circle as the bold curve in FIG. 21(b). Because z = rejw, with r restricted to unity then z = eiw and the bold curve is

I H(z) I izi=1=

I H(co) I, representing the lowpass filter's frequency magnitude response on the z-plane. The I H(w) I curve corresponds to the I HI/R (w) I in FIG. 20(a).

FIG. 21 IIR filter z-domain: (a) pole locations: (b) frequency magnitude

response.

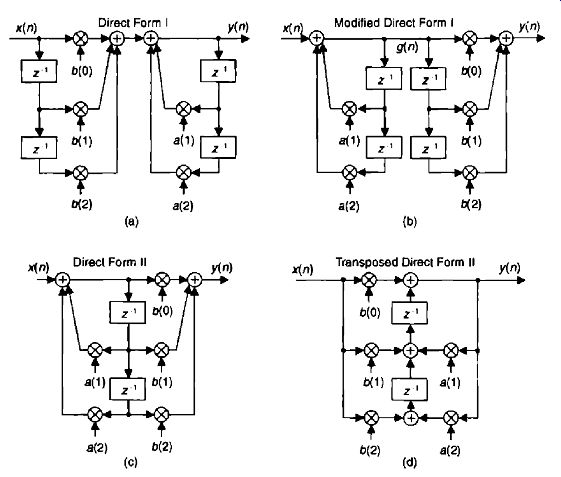

3.3 Alternate IIR Filter Structures

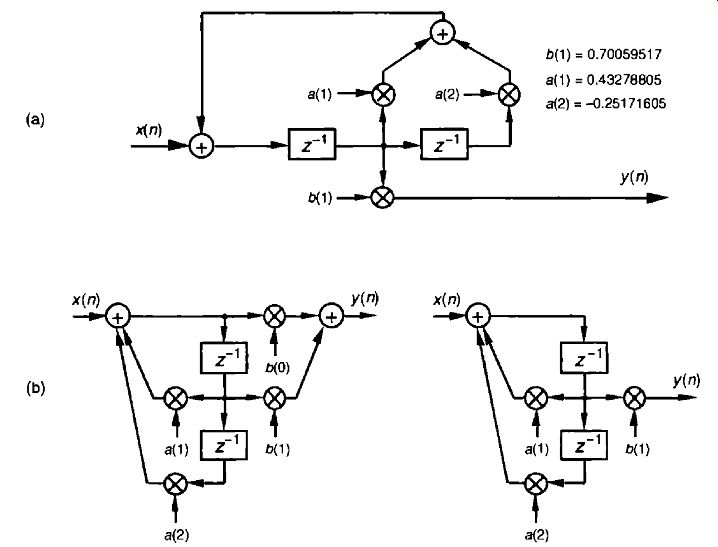

FIG. 22 Rearranged 2nd-order IIR filter structures: (a) Direct Form

I; (b) modified Direct Form I; (c) Direct Form II; (d) transposed Direct

Form II.

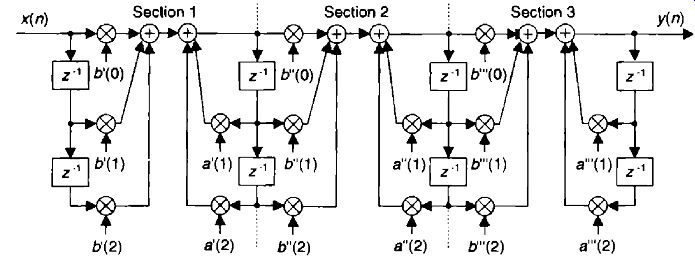

The Direct Form I structure of the TIR filter in FIG. 18 can be converted to alternate forms. It's easy to explore this idea by assuming that there is an equal number of feed forward and feedback stages, letting M = N = 2 as in FIG. 22(a), and thinking of the feed forward and feedback stages as two separate filters. Because both halves of the filter are linear we can swap them, as shown in FIG. 22(b), with no change in the final y(n) output.

The two identical delay paths in FIG. 22(b) provide the motivation for this reorientation. Because the sequence g(n) is being shifted down along both delay paths in FIG. 22(b), we can eliminate one of the paths and arrive at the simplified Direct Form II filter structure shown in FIG. 22(c), where only half the delay storage registers are required compared to the Direct Form I structure.

Another popular IIR structure is the transposed form of the Direct Form II filter. We obtain a transposed form by starting with the Direct Form II filter, convert its signal nodes to adders, convert its adders to signal nodes, reverse the direction of its arrows, and swap x(n) and y(n). (The transposition steps can also be applied to FIR filters.) Following these steps yields the transposed Direct Form II structure given in FIG. 22(d). The filters in FIG. 22 all have the same performance just so long as infinite precision arithmetic is used. However; using quantized filter coefficients, and with truncation or rounding being used to combat binary overflow errors, the various filters exhibit different noise and stability characteristics. In fact, the transposed Direct Form II structure was developed because it has improved behavior over the Direct Form II structure when integer arithmetic is used. Common consensus among IIR filter designers is that the Direct Form I filter has the most resistance to coefficient quantization and stability problems.

We'll revisit these finite-precision arithmetic issues in Section 7.

By the way, because of the feedback nature of IIR filters, they're often referred to as recursive filters. Similarly, FIR filters are often called non-recursive filters. A common misconception is that all recursive filters are TIR. This not true, FIR filters can be designed with recursive structures. So, the terminology recursive and non-recursive should be applied to a filter's structure, and the IIR and FIR should be used to describe the duration of the filter's impulse response.

[Due to its popularity, throughout the rest of this section we'll use the phrase analog filter to designate those hardware filters made up of resistors, capacitors, and (maybe) operational amplifiers. ]

Now that we have a feeling for the performance and implementation structures of IIR filters, let's briefly introduce three filter design techniques.

These IIR design methods go by the impressive names of impulse invariance, bilinear transform, and optimized methods. The first two methods use analytical (pencil and paper algebra) filter design techniques to approximate continuous analog filters. Because analog filter design methods are very well understood, designers can take advantage of an abundant variety of analog filter design techniques to design, say, a digital IIR Butterworth filter with its very flat passband response, or perhaps go with a Chebyshev filter with its fluctuating passband response and sharper passband-to-stopband cutoff characteristics. The optimized methods (by far the most popular way of designing IIR filters) comprise linear algebra algorithms available in commercial filter de sign software packages.

The impulse invariance, bilinear transform design methods are somewhat involved, so a true DSP beginner is justified in skipping those subjects upon first reading this guide. However, those filter design topics may well be valuable sometime in your future as your DSP knowledge, experience, and challenges grow.

FIG. 23 Impulse invariance design equivalence of (a) analog filter

continuous impulse response; (b) digital filter discrete impulse response.

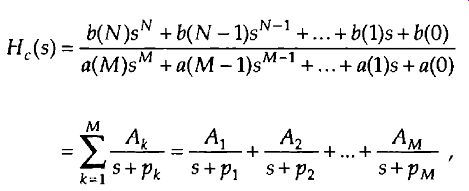

4. IMPULSE INVARIANCE IIR FILTER DESIGN METHOD

The impulse invariance method of IIR filter design is based upon the notion that we can design a discrete filter whose time-domain impulse response is a sampled version of the impulse response of a continuous analog filter. If that analog filter (often called the prototype filter) has some desired frequency response, then our IIR filter will yield a discrete approximation of that desired response. The impulse response equivalence of this design method is depicted in FIG. 23, where we use the conventional notation of 6 to represent an impulse function and h(t) is the analog filter's impulse response. We use the subscript "c" in FIG. 23(a) to emphasize the continuous nature of the analog filter. FIG. 23(b) illustrates the definition of the discrete filter's impulse response: the filter's time-domain output sequence when the input is a single unity-valued sample (impulse) preceded and followed by all zero valued samples. Our goal is to design a digital filter whose impulse response is a sampled version of the analog filter's continuous impulse response. Implied in the correspondence of the continuous and discrete impulse responses is the property that we can map each pole on the s-plane for the analog filter's He(s) transfer function to a pole on the z-plane for the discrete IIR filter's H(z) transfer function. What designers have found is that the impulse invariance method does yield useful IIR filters, as long as the sampling rate is high relative to the bandwidth of the signal to be filtered. In other words, IIR filters designed using the impulse invariance method are susceptible to aliasing problems be cause practical analog filters cannot be perfectly band-limited. Aliasing will occur in an IIR filter's frequency response as shown in FIG. 24.

FIG. 24 Aliasing in the impulse invariance design method: (a) prototype analog filter magnitude response; (b) replicated magnitude responses where H11 (œ) is the discrete Fourier transform of h(n) = hc(nts ); (c) potential resultant IIR filter magnitude response with aliasing effects.

From what we've learned in Section 2 about the spectral replicating effects of sampling, if FIG. 4(a) is the spectrum of the continuous MI) impulse response, then the spectrum of the discrete hc (nt) sample sequence is the replicated spectra in FIG. 24(b). in FIG. 24(c) we show the possible effect of aliasing where the dashed curve is a desired Hirm(c)) frequency magnitude response. However, the actual frequency magnitude response, indicated by the solid curve, can occur when we use the impulse invariance design method. For this reason, we prefer to make the sample frequency f, as large as possible to minimize the overlap between the primary frequency response curve and its replicated images spaced at multiples of ±f, Hz. To see how aliasing can affect IIR filters de signed with this method, let's list the necessary impulse invariance design steps and then go through a filter design example.

[In a low-pass filter design, for example, the filter type (Chebyshev, Butterworth, elliptic), filter order (number of poles), and the cutoff frequency are parameters to be defined in this step. ]

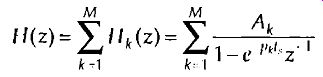

There are two different methods for designing HR filters using impulse invariance. The first method, which we'll call Method 1, requires that an in verse Laplace transform as well as a z-transform be performed. The second impulse invariance design technique, Method 2, uses a direct substitution process to avoid the inverse Laplace and z-transformations at the expense of needing partial fraction expansion algebra necessary to handle polynomials. Both of these methods seem complicated when de scribed in words, but they're really not as difficult as they sound. Let's com pare the two methods by listing the steps required for each of them. The impulse invariance design Method I goes like this: Method 1, Step 1: Design (or have someone design for you) a prototype analog filter with the desired frequency response. The result of this step is a continuous Laplace transfer function H,(s) expressed as the ratio of two polynomials, such as

(eqn. 43)

which is the general form of Eq. (eqn. 10) with N <M, and a(k) and b(k) are constants.

Method 1, Step 2: Determine the analog filter's continuous time-domain impulse response h (I) from the H,.(s) Laplace transfer function. I hope this can be done using Laplace tables as op posed to actually evaluating an inverse Laplace transform equation.

Method 1, Step 3: Determine the digital filter's sampling frequency 4, and calculate the sample period as t, = 18 - 5 . The f, sampling rate is chosen based on the absolute frequency', in Hz, of the prototype analog filter. Because of the aliasing problems associated with this impulse invariance design method, later, we'll see why fs should be made as large as practical.

Method 1, Step 4: Find the z-transform of the continuous h(t) to obtain the UR filter's z-domain transfer function H(z) in the form of a ratio of polynomials in z.

Method 1, Step 5: Substitute the value (not the variable) t, for the continuous variable t in the H(z) transfer function obtained in Step 4.

In performing this step, we are ensuring, as in FIG. 23, that the TIR filter's discrete h(n) impulse response is a sampled version of the continuous filter's h(t) impulse response so that h(n) = hc (nt,), for 0 .S n Method 1, Step 6: Our H(z) from Step 5 will now be of the general form

(eqn. 44)

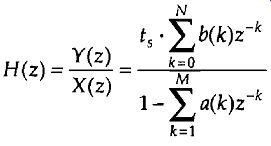

Because the process of sampling the continuous impulse response results in a digital filter frequency response that's scaled by a factor of 1/t, many filter designers find it appropriate to include the t, factor in Eq. (eqn. 44). So we can rewrite Eq. (eqn. 44) as

(eqn. 45)

Incorporating the value of t, in Eq. (eqn. 45), then, makes the TIR filter time-response scaling independent of the sampling rate, and the discrete filter will have the same gain as the prototype analog filter.' Method 1, Step 7: Because Eq. (eqn. -44) is in the form of Eq. (eqn. 25), by inspection, we can express the filter's time-domain difference equation in the general form of Eq. (eqn. 21) as

(eqn. 46)

Choosing to incorporate ts , as in Eq. (eqn. 45), to make the digital filter's gain equal to the prototype analog filter's gain by multiplying the b(k) coefficients by the sample period t, leads to an HR filter time-domain expression of the form

(eqn. 47)

Notice how the signs changed for the a(k) coefficients from Eqs. (eqn. 44) and (eqn. 45) to Eqs. (eqn. 46) and (eqn. -47). These sign changes always seem to cause problems for beginners, so watch out. Also, keep in mind that the time-domain expressions in Eq. (eqn. 46) and Eq. (eqn. 47)

apply only to the filter structure in FIG. 18. The a(k) and b(k), or t, • b(k), coefficients, however, can be applied to the improved IIR structure shown in FIG. 22 to complete our design.

Before we go through an actual example of this design process, let's discuss the other impulse invariance design method.

[Some authors have chosen to include the 1, factor in the discrete h(u) impulse response in the above Step 4, that is, make h(n) = 1,11,(nt,). The final result of this, of course, is the same as that obtained by including t, as described in Step 5. ]

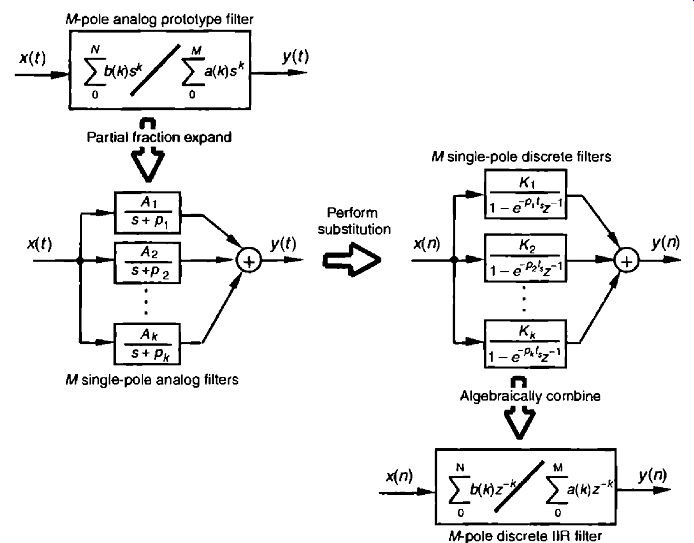

FIG. 25 Mathematical flow of the impulse invariance design Method 2.

The impulse invariance Design Method 2, also called the standard z transform method, takes a different approach. It mathematically partitions the prototype analog filter into multiple single-pole continuous filters and then approximates each one of those by a single-pole digital filter. The set of M single-pole digital filters is then algebraically combined to form an M-pole, Mth-ordered IIR filter. This process of breaking the analog filter to discrete filter approximation into manageable pieces is shown in FIG. 25. The steps necessary to perform an impulse invariance Method 2 design are:

Method 2, Step 1: Obtain the Laplace transfer function 1-1,(s) for the proto type analog filter in the form of Eq. (eqn. 43). (Same as Method 1, Step 1.) Method 2, Step 2: Select an appropriate sampling frequency J. , and calculate the sample period as ts 1/4. (Same as Method 1, Step 3.) Method 2, Step 3: Express the analog filter's Laplace transfer function H,(s) as the sum of single-pole filters. This requires us to use partial fraction expansion methods to express the ratio of polynomials in Eq. (eqn. 43) in the form of

(eqn. 48)

where the individual A k factors are constants and the kth pole is located at -pk on the s-plane. We'll denote the kth single-pole analog filter as Ws), or

(eqn. 49)

Method 2, Step 4: Substitute 1 -- e- Pki , z -1 for s + pk in Eq. (eqn. 48). This mapping of each Hk (s) pole, located at s = -pi on the s-plane, to the z e-Pki , location on the z-plane is how we approximate the impulse response of each single-pole analog filter by a single-pole digital filter. (The reader can find the derivation of this 1 - e - Pkt , z -1 substitution, illustrated in our FIG. 25, in references [14i through [ 16].) So, the kth analog single-pole filter Hk (s) is approximated by a single-pole digital filter whose z-domain transfer function is

(eqn. 50)

The final combined discrete filter transfer function 1-1(z) is the sum of the single-poled discrete filters, or

(eqn. 51)

Keep in mind that the above H(z) is not a function of time.

The t s factor in Eq. (eqn. 51) is a constant equal to the discrete-time sample period.

Method 2, Step 5: Calculate the z-domain transfer function of the sum of the M single-pole digital filters in the form of a ratio of two polynomials in z. Because the H(z) in Eq. (eqn. 51) will be a series of fractions, we'll have to combine those fractions over a common denominator to get a single ratio of polynomials in the familiar form of

(eqn. 52)

Method 2, Step 6: Just as in Method 1 Step 6, by inspection, we can express the filter's time-domain equation in the general form of

(eqn. 53)

Again, notice the a(k) coefficient sign changes from Eq. (eqn. 52) to Eq. (eqn. 53). As described in Method 1 Steps 6 and 7, if we choose to make the digital filter's gain equal to the prototype analog filter's gain by multiplying the b(k) coefficients by the sample period t„ then the IIR filter's time domain expression will be in the form

(eqn. 54)

yielding a final H(z) z-domain transfer function of

(eqn. 54')

Finally, we can implement the improved IIR structure shown in FIG. 22 using the a(k) and b(k) coefficients from Eq. (eqn. 53) or the a(k) and t,- b(k) coefficients from Eq. (eqn. 54). To provide a more meaningful comparison between the two impulse in variance design methods, let's dive in and go through an IIR filter design ex ample using both methods.

4.1 Impulse Invariance Design Method 1 Example

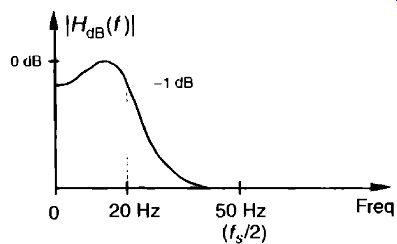

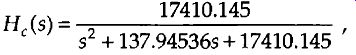

Assume that we need to design an IIR filter that approximates a second-order Chebyshev prototype analog low-pass filter whose passband ripple is 1 dB. Our f, sampling rate is 100 Hz (t, = 0.01), and the filter's 1 dB cutoff frequency is 20 Hz. Our prototype analog filter will have a frequency magnitude response like that shown in FIG. 26.

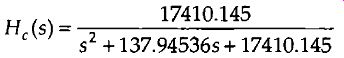

Given the above filter requirements, assume that the analog prototype filter design effort results in the H e (s) Laplace transfer function of

(eqn. 55)

It's the transfer function in Eq. (eqn. 55) that we intend to approximate with our discrete IIR filter. To find the analog filter's impulse response, we'd like to get He (s) into a form that allows us to use Laplace transform tables to find h(t).

FIG. 26 Frequency magnitude response of the example prototype analog

filter.

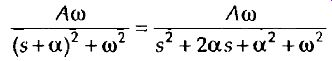

Searching through systems analysis textbooks we find the following Laplace transform pair:

(eqn. 56)

Our intent, then, is to modify Eq. (eqn. 55) to get it into the form on the left side of Eq. (eqn. 56). We do this by realizing that the Laplace transform expression in Eq. (eqn. 56) can be rewritten as

(eqn. 57)

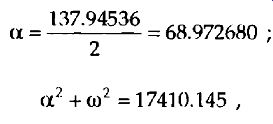

If we set a, and for A, Eq. (eqn. 55) equal to the right side of Eq. (eqn. 57), we can solve w. Doing that,

(eqn. 59)

(eqn. 60)

(eqn. 61)

[...]

OK, hang in there; we're almost finished. Here are the final steps of Method 1.

Because of the transfer function H(z) = Y(z)/X(z), we can cross-multiply the denominators to rewrite the bottom line of Eq. (eqn. 67) as

By inspection of Eq. (eqn. 68), we can now get the time-domain expression for our IIR filter. Performing Method 1, Steps 6 and 7, we multiply the x(n--1) co efficient by the sample period value of t, = 0.01 to allow for proper scaling as … and there we (finally) are. The coefficients from Eq. (eqn. 69) are what we use in implementing the improved IIR structure shown in FIG. 22 to approximate the original second-order Chebyshev analog low-pass filter.

Let's see if we get the same result if we use the impulse invariance de sign Method 2 to approximate the example prototype analog filter.

4.2 Impulse Invariance Design Method 2 Example

Given the original prototype filter's Laplace transfer function as

(eqn. 70)

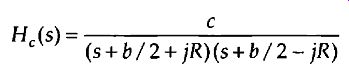

and the value of t, = 0.01 for the sample period, we're ready to proceed with Method 2's Step 3. To express tic (s) as the sum of single-pole filters, we'll have to factor the denominator of Eq. (eqn. 70) and use partial fraction expansion methods. For convenience, let's start by replacing the constants in Eq. (eqn. 70) with variables in the form of

(eqn. 71)

where b = 137.94536, and c = 17410.145. Next, using Eq. (eqn. -15) with a = 1, we can factor the quadratic denominator of Eq. (eqn. 71) into

(eqn. 72)

If we substitute the values for b and c in Eq. (eqn. 72), we'll find that the quantity under the radical sign is negative. This means that the factors in the denominator of Eq. (eqn. 72) are complex. Because we have lots of algebra ahead of us, let's replace the radicals in Eq. (eqn. 72) with the imaginary term jR, where j = -nTT1 and R = 1 (b2-4c)/41, such that

(eqn. 73)

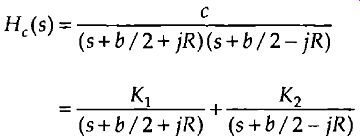

OK, partial fraction expansion methods allow us to partition Eq. (eqn. 73) into two separate fractions of the form

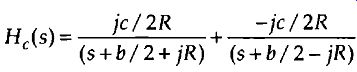

(eqn. 74)

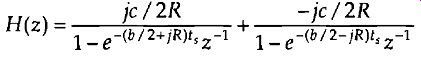

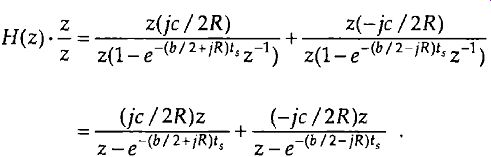

where the K1 constant can be found to be equal to jc /2R and constant K2 is the complex conjugate of K1 , or K2 = -jc / 2R. (To learn the details of partial fraction expansion methods, the interested reader should investigate standard college algebra or engineering mathematics textbooks.) Thus, He (s) can be of the form in Eq. (eqn. 48) or

(eqn. 75)

We can see from Eq. (eqn. 75) that our second-order prototype filter has two poles, one located at p1 = -6/2 - jR and the other at p2 = -b/2 + jR. We're now ready to map those two poles from the s-plane to the z-plane as called out in Method 2, Step 4. Making our 1 - er-Pkts z-1 substitution for the s +pk terms in Eq. (eqn. 75), we have the following expression for the z-domain single-pole dig ital filters,

(eqn. 76)

Our objective in Method 2, Step 5 is to massage Eq. (eqn. 76) into the form of Eq. (eqn. 52), so that we can determine the hR filter's feed forward and feedback coefficients. Putting both fractions in Eq. (eqn. 76) over a common denominator gives us

Collecting like terms in the numerator and multiplying out the denominator gives us

(eqn. 78)

Factoring the exponentials and collecting like terms of powers of z in Eq. (eqn. 78),

(eqn. 79)

Continuing to simplify our 11(z) expression by factoring out the real part of the exponentials,

(eqn. 80)

We now have H(z) in a form with all the like powers of z combined into single terms, and Eq. (eqn. 80) looks something like the desired form of Eq. (eqn. 52). Knowing that the final coefficients of our I1R filter must be real numbers, the question is "What do we do with those imaginary j terms in Eq. (eqn. 80)?" Once again, Euler to the rescue. Using Euler's equations for sinusoids, we can eliminate the imaginary exponentials and Eq. (eqn. 80) become ...

If we plug the values c = 17410.145, b = 137.94536, R .= 112.48517, and ts = 0.01 into Eq. (eqn. 81), we get the following IIR filter transfer function:

Because the transfer function H(z) = Y(z)/X(z), we can again cross-multiply the denominators to rewrite Eq. (eqn. 82) as

. (eqn. 83)

Now we take the inverse z-transform of Eq. (eqn. 83), by inspection, to get the time-domain expression for our IIR filter as

One final step remains. To force the IIR filter gain equal to the prototype analog filter's gain, we multiply the x(n-1) coefficient by the sample period t_s as suggested in Method 2, Step 6. In this case, there's only one x(n) coefficient, giving us

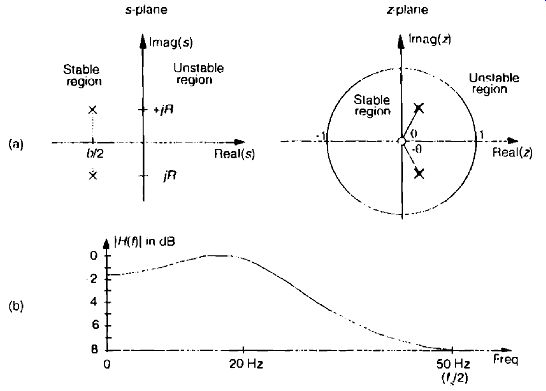

(eqn. 85)

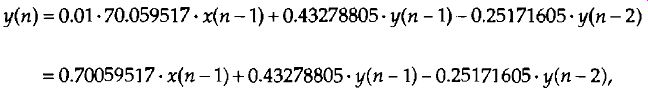

that compares well with the Method 1 result in Eq. (eqn. 69). (Isn't it comforting to work a problem two different ways and get the same result?) FIG. 27 shows, in graphical form, the result of our IIR design example. The s-plane pole locations of the prototype filter and the z-plane poles of the IIR filter are shown in FIG. 27(a). Because the s-plane poles are to the left of the origin and the z-plane poles are inside the unit circle, both the prototype analog and the discrete TIR filters are stable. We find the prototype filter's s-plane pole locations by evaluating He(s) in Eq. (eqn. 75). When s = -b/2 -JR. the denominator of the first term in Eq. (eqn. 75) becomes zero and He(s) is infinitely large. That s = 4/2 - JR value is the location of the lower s-plane pole in FIG. 27(a). When s = -b/2 + jR, the denominator of the second term in Eq. (eqn. 75) becomes zero and s = -b/2 + JR is the location of the second s-plane pole.

The IIR filter's z-plane pole locations are found from Eq. (eqn. 76). If we multiply the numerators and denominators of Eq. (eqn. 76) by z,

(eqn. 86)

FIG. 27 Impulse invariance design example filter characteristics: (a)

s-plane pole locations of prototype analog filter and z-plane pole locations

of discrete IIR filter; (b) frequency magnitude response of the discrete

I1R filter,

In Eq. (eqn. 86), when z is set equal to e( 112 the denominator of the first term in Eq. (eqn. 86) becomes zero and H(z) becomes infinitely large. The value of z of

(eqn. 87)

defines the location of the lower z-plane pole in FIG. 27(a). Specifically, this lower pole is located at a distance of e -1112 = 0.5017 from the origin, at an angle of O = --Rt s radians, or -64.45°. Being conjugate poles, the upper z-plane pole is located the same distance from the origin at an angle of O ,= Rt . radians, Or +64.45'. FIG. 27(b) illustrates the frequency magnitude response of the IIR filter in Hz.

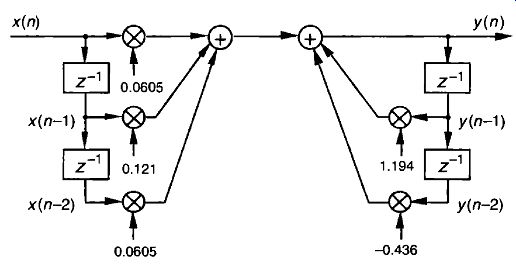

Two different implementations of our IIR filter are shown in FIG. 28.

FIG. 28(a) is an implementation of our second-order HR filter based on the general III( structure given in FIG. 22, and FIG. 28 (3) shows the second-order TIR filter implementation based on the alternate structure from FIG. 21(b). Knowing that the b(0) coefficient on the left side of FIG. 28(b) is zero, we arrive at the simplified structure on the right side of FIG. 28(b). Looking carefully at FIG. 28(a) and the right side of FIG. 28(b), we can see that they are equivalent.

FIG. 28 Implementations of the impulse invariance design example filter.

Although both impulse invariance design methods are covered in the literature, we might ask, "Which one is preferred?" There's no definite answer to that question because it depends on the Hr(s) of the prototype analog filter.

Although our Method 2 example above required more algebra than Method 1, if the prototype filter's s-domain poles were located only on the real axis, Method 2 would have been much simpler because there would be no complex variables to manipulate. In general, Method 2 is more popular for two reasons: (1) the inverse Laplace and z-transformations, although straightforward in our Method 1 example, can be very difficult for higher order filters, and (2) unlike Method 1, Method 2 can be coded in a software routine or a computer spreadsheet.

[A piece of advice: whenever you encounter any frequency representation (be it a digital filter magnitude response or a signal spectrum) that has nonzero values at +4/2, be suspicious-be very suspicious-that aliasing is taking place. ]

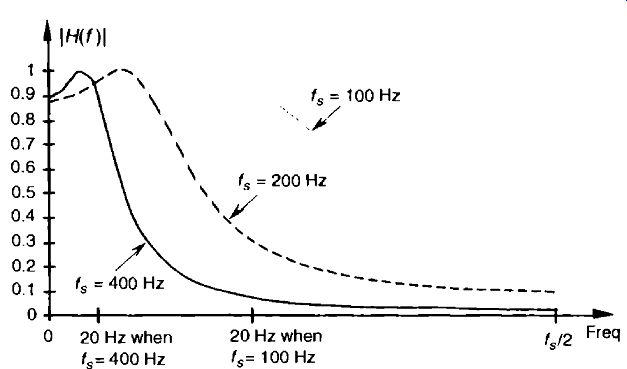

FIG. 29 IIR filter frequency magnitude response, on a linear scale,

at three separate sampling rates. Notice how the filter's absolute cutoff

frequency of 20 Hz shifts relative to the different fs sampling rates.

Upon examining the frequency magnitude response in FIG. 27(b), we can see that this second-order IIR filter's roll-off is not particularly steep.

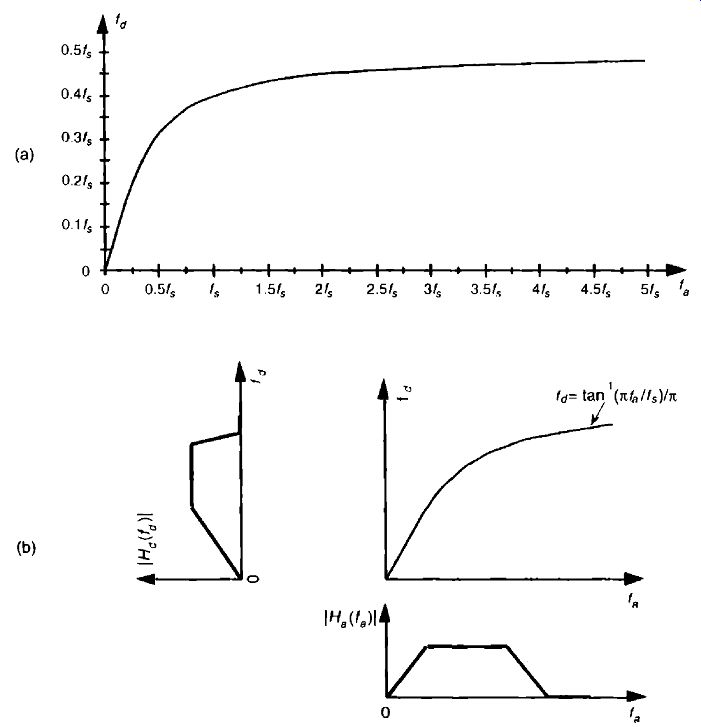

This is, admittedly, a simple low-order filter, but its attenuation slope is so gradual that it doesn't appear to be of much use as a low-pass filter. We can also see that the filter's passband ripple is greater than the desired value of 1 dB in FIG. 26. What we'll find is that it's not the low order of the filter that contributes to its poor performance, but the sampling rate used. That second-order IIR filter response is repeated as the shaded curve in FIG. 29. If we increased the sampling rate to 200 Hz, we'd get the frequency response shown by the dashed curve in FIG. 29. Increasing the sampling rate to 400 Hz results in the much improved frequency response indicated by the solid line in the figure. Sampling rate changes do not affect our filter order or implementation structure. Remember, if we change the sampling rate, only the sample period t s changes in our design equations, resulting in a different set of filter coefficients for each new sampling rate. So we can see that the smaller we make ts (larger f) the better the resulting filter when either impulse invariance design method is used because the replicated spectral over lap indicated in FIG. 24(b) is reduced due to the larger f, sampling rate.

The bottom line here is that impulse invariance HR filter design techniques are most appropriate for narrowband filters; that is, low-pass filters whose cutoff frequencies are much smaller than the sampling rate.

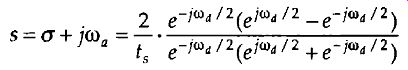

The second analytical technique for analog filter approximation, the bi linear transform method, alleviates the impulse invariance method's aliasing problems at the expense of what's called frequency warping. Specifically, there's a nonlinear distortion between the prototype analog filter's frequency scale and the frequency scale of the approximating I IR filter designed using the bilinear transform. Let's see why.

5. BILINEAR TRANSFORM IIR FILTER DESIGN METHOD

There's a popular analytical RR filter design technique known as the bilinear transform method. Like the impulse invariance method, this design technique approximates a prototype analog filter defined by the continuous Laplace transfer function He(s) with a discrete filter whose transfer function is H(z). However, the bilinear transform method has great utility because

• it allows us simply to substitute a function of z for s in He(s) to get H(z), thankfully, eliminating the need for Laplace and z-transformations as well as any necessity for partial fraction expansion algebra;

• it maps the entire s-plane to the z-plane, enabling us to completely avoid the frequency-domain aliasing problems we had with the impulse in variance design method; and

• it induces a nonlinear distortion of H(z)'s frequency axis, relative to the original prototype analog filter's frequency axis, that sharpens the final roll-off of digital low-pass filters.

Don't worry. We'll explain each one of these characteristics and see exactly what they mean to us as we go about designing an IIR filter.

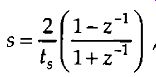

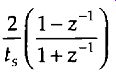

If the transfer function of a prototype analog filter is fie(s), then we can obtain the discrete IIR filter z-domain transfer function H(z) by substituting the following for s in H(s)

(eqn. 88)

where, again, ts is the discrete filter's sampling period (1/4). Just as in the impulse invariance design method, when using the bilinear transform method, we're interested in where the analog filter's poles end up on the z-plane after the transformation. This s-plane to z-plane mapping behavior is exactly what makes the bilinear transform such an attractive design technique.

[ The bilinear transform is a technique in the theory of complex variables for mapping a function on the complex plane of one variable to the complex plane of another variable. It maps circles and straight lines to straight lines and circles, respectively. ]