Listening tests conducted by David L. Clark provide surprising answers

by Ian G. Masters

AUDIO loves its controversies. Over the years, arguments have raged as to whether speaker cables, or amplifiers, or lubricants, or bricks affect the purity of an audio signal. No one seems to win in these debates, but it all contributes to the fascination and fun of high fidelity.

These finer points of traditional audio have receded lately in the face of the greatest contribution--or threat, depending on how you look at it-to ultra-high fidelity that has come along since Edison spoke into that horn in 1877: the digital Compact Disc.

No sane person would deny that Compact Discs sound very different from conventional analog sources, although there is disagreement about whether the newer medium is an improvement over more familiar recording methods at their best.

The analog-vs.-digital debate will no doubt soon become as meaning less as the tubes-vs.-transistors de bate. The Compact Disc is definitely sere to stay. Not only that, but even the most cautious visionary would admit that it will eventually supplant the conventional LP disc.

One of the things that makes a digital future so attractive, according to its advocates, is that the digital disc is an absolute. Not only is it hiss-free, scratch-free, and pop-free, not only does it have wide dynamic range, low distortion, and all those good things, but it is also quintessentially consistent. In theory, if the CD format standards are met, the characteristics of the playback equipment are unimportant, be cause a give n data stream on tile disc will produce the same flawless audio signal from any player.

But is that really so? A great many very experienced listeners think it is, but probably as many deny it. Without even considering relative quality, the lines have been drawn between the faction that says "CD players all sound alike" and the one that claims "CD players sound different." The former listeners are perhaps influenced by the hope-that audio has finally come up with something that is unquestionably unquestionable, the latter by the belief that nothing could be as good as CD's are supposed to be.

------

Without becoming embroiled in the "philosophical" aspects of the question, STEREO REVIEW decided to find out what, if any, audible differences there are among CD players. The editors started with the basic belief that if there are sonic variances they are very small, but that any real distinctions that do exist must be subject to proof by means of scientifically designed and controlled listening tests.

To this end, the magazine enlisted the aid of David L. Clark, of DLC Design, who developed the double blind ABX comparator, and of the redoubtable Southeastern Michigan Woofer and Tweeter Marching Society (SMWTMS, pronounced "Smootums" by its members), a Detroit-based club that has few rivals as a bastion of concerned, but sane, audio enthusiasm. The club's members are extremely experienced listeners who have taken part in many critical audio experiments over the years. During the two heavy days of tests, eleven members of the society took part-a number large enough to filter out any "club prejudices" and to give statistical validity to the test results.

With the ABX system, two components are compared. Listeners switch between the A or B sources and source X, which is either A or B, as randomly selected by the comparator, and decide which source is identical to X.

The question of audible differences may be a burning issue for some serious audiophiles and engineers on a purely theoretical level.

But for the majority of those interested in audio equipment and re corded music it is an important practical matter. Most equipment is sold on the basis of the way it differs from the rest of what is available. If you can hear the difference between Speaker A and Speaker B, a claim can be made that one is better than the other. Or you may prefer one over the other because of the way it sounds. Do CD players, like speakers, sound different enough to give you a basis for choice? That's the question STEREO REVIEW hoped David Clark's tests with SMWTMS would answer.

The tests took place in mid-September in a listening room at DLC in Michigan, constructed according to a proposed IEC standard, using a pair of Magnepan MG-HIA speakers driven by a Threshold S/500 Series II 250-watt-per-channel amplifier. An ABX comparator was used for A/B switching.

With the ABX system, two components, a reference source and a "device under test," are compared.

The listeners switch between the A or B sources and a source called x, which is either A or B, as selected randomly for each trial by a micro processor in the comparator. Listeners can spend as much time as they want in a trial, switching between A, B, and x, before they go on to the next trial in the test. In each trial listeners must decide whether x is the same as A or B. If the differences between the two sources are readily audible, this is a simple matter; if the sources are similar in sound, the task becomes more difficult. Identical-sounding sources elicit a random series of choices, since some choice must be made, but even very subtle differences should show a statistically significant increase in correct choices, even if the listeners think they hear no differences. The ABX system is designed to reveal differences only, not preferences be tween sounds.

Because listeners are asked to decide whether x is identical to A or to B, the only difference between the two sources must be their audio characteristics-anything else could "tip off" the listeners. For instance, if one source was even slightly ahead in time of the other, it would become immediately apparent when switching between x and either source. For this reason, the out puts of the players under test were matched as closely as possible be fore the listeners were allowed to hear them.

Levels were extremely important, for it is well known that even the slightest level difference will tend to make the louder unit seem "better." In fact, during the early part of the test sessions, one player was mis-adjusted, so that its signal was a mere 0.2 dB higher than the reference, and virtually all the listeners caught it. While the misadjustment made this particular test series invalid, it did serve to prove the effectiveness of the testing system and to affirm the listeners' qualifications. (The unit was retested later with the correct levels.) The most difficult part of the procedure was keeping the two machines, the reference player and the one under test, in perfect synchronization. Synchronization was important, however, because any musical difference between the two sources would have immediately indicated whether x was the same as A or B.

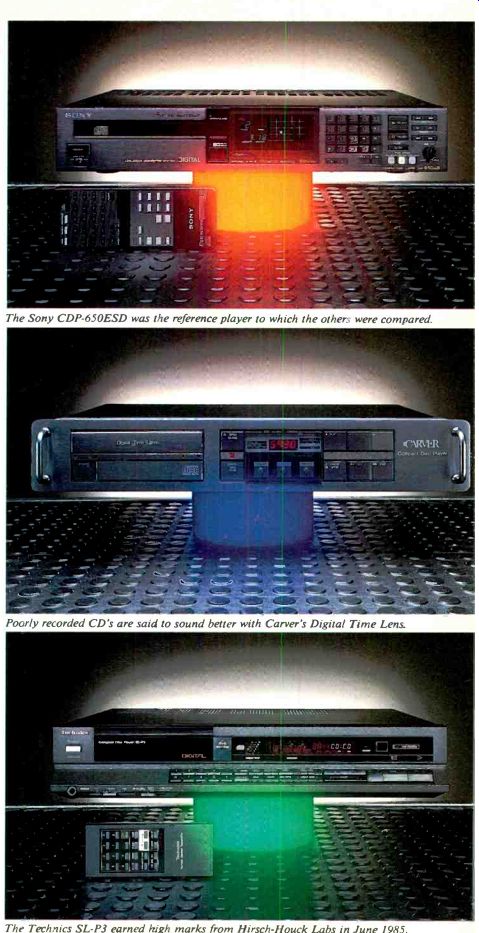

As it happened, one of the players, a Sony CDP-650ESD, could be modified by replacing its internal-clock reference crystal with a Hewlett Packard 8640B nil-signal generator, allowing its speed to be varied with out affecting its audio characteristics. The Sony CDP-650ESD there fore became the reference device in each test, because it could be brought into sync with whichever other machine was being tested. An automatic-mute system cut the audio output of both players if they got out of sync, although synchronizing them initially required some fancy footwork on the part of technician Arthur Greenia.

In the initial round, some twenty listening tests were performed on each machine. The first five used a series of impulse signals from the Denon 38C39-7147 test CD, and they were followed by another series of five using white noise from the same disc. The next five tests used the thunderous "Star Tracks" CD from Telarc, which features massive orchestration and lots of bass (most of the listeners felt the speakers weren't up to the demands of the Telarc CD's low end). The final five tests used an intimate jazz recording by Warren Bernhardt, called "Trio '83," on the dmp Records label. The tests were run from a lab outside the listening room: listeners communicated with the technicians by flailing their arms at a video camera linking the two rooms.

The primary tests were held on a Friday evening, when listeners auditioned all six machines (including the reference). There were eight listeners in the room, and while the danger that the listeners would interact and influence each other seemed to be real-there was considerable chit-chat, at least between selections-this didn't seem to have any significant effect on the test results. The next day, follow-up tests were conducted to clear up some questions from the night be fore and to confirm some of the results. Six listeners were involved in the second round, including three from the night before. All the tests were "double-blind"-neither the listeners nor the technicians knew which machine corresponded to the x button at any time during a test, thanks to the random selection per formed automatically by the ABX comparator.

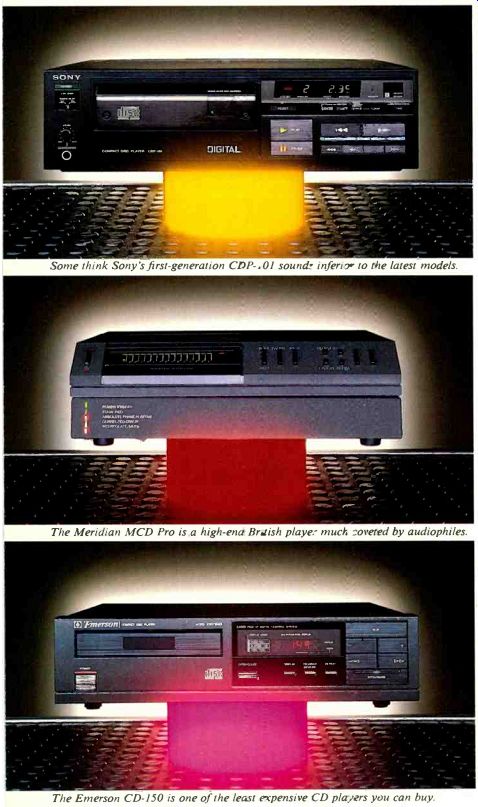

Six CD players were included in the sample. Two Sony units were chosen: the CDP-650ESD, a latest-generation, high-end ($1,300) player (its ability to have its speed varied was a bonus), and the CDP-101, a first-generation unit that is reputed to have a distinctive audio character. The Technics SL-P3 ($600) is a full-featured player from another leading Japanese proponent of the CD system, and the Emerson CD-150 is a relatively modest, inexpensive player (it often sells for less than $200, though the list price is $449). The Meridian MCD Pro is the basic Philips deck (Philips did start all this, after all) with some English electronic modifications and an exotic price tag ($1,400).

Finally, the American entry was the Carver DTL- l 00 ($650), which is one of the few players to include circuitry designed to correct audible problems in some Compact Discs.

What Carver calls a Digital Time Lens is an equalization/difference signal correction circuit intended for use with CD's that have been improperly recorded, and the circuit should be switched out for properly made digital recordings.

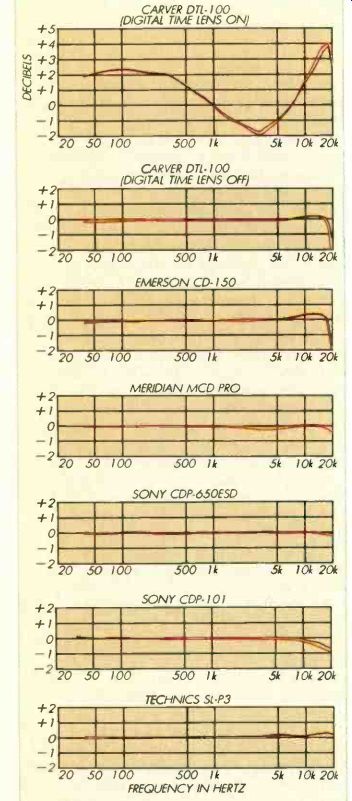

As a preliminary step toward explaining any audible differences among the players that might turn up in the A/B listening tests, a series of frequency-response measurements was made using the Denon test disc. The effect of the Carver player's Digital Time Lens feature

---------------

The Sony CDP-650ESD was the reference player to which the others were compared.

Poorly recorded CD's are said to sound better with Carver's Digital Time Lens.

The Technics SL-P3 earned high marks from Hirsch-Houck Labs in June 1985.

------

Some think Sony's first-generation CDP-101 sounds inferior to the latest models.

The Meridian MCD Pro is a high-end British player much coveted by audiophiles.

The Emerson CD-150 is one of the least expensive CD players you can buy.

--------------------------

... was immediately apparent in its measured response. Switching the circuit on resulted in a 2-dB rise at the low end compared to the reference 0-dB point at 1,000 Hz, a dip of almost 2 dB at 3,000 Hz, and a rise to +4 dB at 18,000 Hz. Such deliberate manipulation would certainly be audible, but it does not indicate anything about the basic similarity of CD players without such modification.

With the Time Lens switched out, the Carver player came much closer to the sort of frequency response we would expect from a CD player: flat within 0.3 dB up to 18,000 Hz and within 0.1 dB up to 6,000 Hz. At the very top of the audio range, the curve fell to-1.6 dB at 20,000 Hz, but this drop would probably not be audible. The Emerson showed a similar drop at the top end as well as a peak of about 0.3 dB between 10,000 and 16,000 Hz; response was flat within 0.1 dB up to 10,000 Hz.

The Technics showed a 0.3-dB peak above 16,000 Hz on one channel, 0.25 dB on the other, and the Sony CDP-101 exhibited a very gradual rolloff above 10,000 Hz, dropping to about -0.7 dB at 20,000 Hz.

Both the Sony 650-ESD and the Meridian were flat within ± 0.1 dB across the spectrum.

Except for the Carver player with its Digital Time Lens on, the measured frequency-response variations were extremely small, particularly when compared with those of most other audio components. Nevertheless, in terms of the subtle audible differences claimed to exist among CD players, they might have some significance.

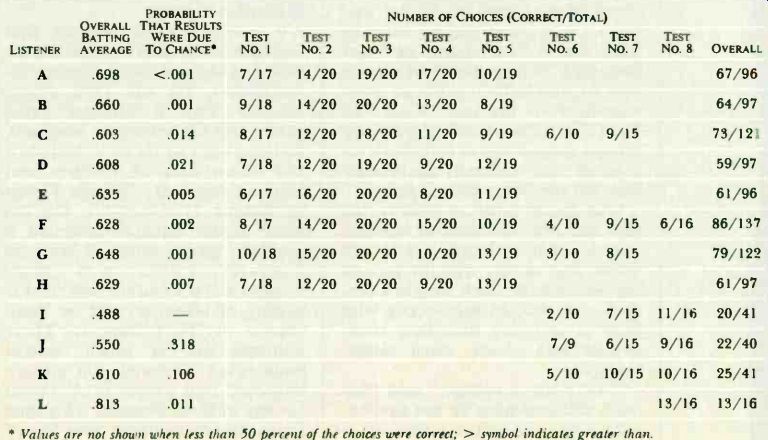

It was not, of course, possible to perform similar basic measurements on the other variable in this testing process: the listeners them selves. It was possible, however, to look at the listeners' responses after the fact and identify any extreme inconsistencies. With the original group of eight listeners, the consistency was remarkable. We calculated "batting averages"-the percentage of correct choices in all the tests participated in-for each listener. The averages of the original eight ranged from .603 to .698, compared with an overall batting average of .637. As David Clark pointed out, "There were no Golden Ears found, and if there were any Tin Ears in the second round, they didn't take part in enough tests to show themselves." In any event, the first round of tests, which were per formed at one sitting and under pressure to get through all the machines, produced much more consistent results than the less formal supplemental tests the next day.

No test procedure is perfect, but this series seemed to have filtered out as many unwanted influences as one could reasonably expect. So, what did the group hear? The first unit to be auditioned was the Technics SL-P3. Until the panel's ears became attuned to what was happening, they strained to hear any difference whatever, but as the tests proceeded, there was some feeling that there was a slight difference in brightness between the Technics and the reference Sony.

This difference was heard only on the impulse and noise portions of the test, however, and several listeners commented that if the differences were so hard to hear on pure test signals, they should be almost impossible to hear with music. And so it turned out-the length of time needed for each test increased as soon as the music came on, and brows furrowed noticeably. The consensus after it was over was that audible differences disappeared when a music signal was being listened to.

As it happened, the "differences" weren't there to begin with. In spite of the comments in the room at the time and on the panel members' response sheets, the choices were entirely random. In straining to hear some sort of distinguishing characteristic in the sound, the listeners believed they had done so, but their responses proved that no such characteristics were consistently found. Because this was the first session, and because the results were at variance with the panel members' beliefs, the Technics was retested the next day-again with entirely random results.

The second unit was the Emerson CD-150. The story seemed quite different in this case, and the listeners were visibly relieved to find real differences, however subtle. On the

--------------

TEST DESIGN AND EQUIPMENT

THE first question to be answered in the design of a scientific listening test is. What are we trying to prove? In this case, we were trying to prove that some differences between CD players are audible. Even if we could prove that our listeners were not able to hear any differences between these CD players, we could never prove that some other group would be unable to hear differences. Perhaps different CD players, associated equipment, acoustics, or program material would make the differences audible. We there fore chose to restrict our goal to proving that at least one group or individual can hear a difference.

Every possible listener advantage was designed into this experiment. The listeners selected are audio hobbyists, most also having experience as musicians or recording engineers. The ABX double-blind comparator system was used to provide instant comparisons rather than waiting for patch cords to be swapped. Program material included exacting test signals as training for the carefully selected music CD's.

Magnepan MG III speakers were set up in an acoustically treated listening room by Wendell Diller of Magnepan.

In the adjacent "equipment" room, a Threshold S-500 II amplifier was monitored by oscilloscope to prevent clip ping at any time. Program level was controlled by a Penny and Giles professional fader feeding a discrete-transistor gain and buffer amplifier. The CD player outputs were matched in level within ±0.05 dB by custom-selected film resistors soldered, not switched, into the circuit for each player. Short runs of Hitachi LC-OFC interconnect and speaker cable were used through out. One-way communication to the operator was provided by a closed-circuit TV camera trained on a black board in the listening room.

Synchronization of the CD players was a major difficulty. If one player's signal were to lead or lag behind the other's, the listeners would be able to distinguish them on this irrelevant basis. Even if you start two players in sync, no easy task in itself they will usually become noticeably out of sync in a few minutes. To overcome this, one unit, a Sony CDP-650ESD, was chosen to be the reference source in all the tests. It was modified so that its speed could be matched with any of the other players. Once the two players were started in sync, changing the out put frequency on the generator allowed "touching up" the synchronization with speed changes of less than 0.01 percent. An envelope-comparator circuit sampled left-channel outputs of the two CD players and automatically muted the audio to the listening room if an out-of-sync condition developed.

Once the operator regained sync, the mute was reset.

The power of statistical analysis was fully utilized to uncover even "subliminal" audibility. With the ABX comparator, pure guessing will get you close to 50 percent correct, while 100 percent represents hearing certainty (or incredible luck). A score of 75 percent is commonly considered to represent the "threshold of audibility." But what if hundreds of responses yield a score of say, 60 percent correct? Some small difference below the supposed hearing "threshold" must be responsible for a listener's doing better than chance. Statistical analysis can derive the probability (p) that a 60 percent score was due to random guesses. A probability of one in twenty (p = .05) or less that the results were by chance is worth further study A p of .01 (one in a hundred) is pretty secure proof that a difference was audible.

All this switching and statistics doesn't have much in common with the home listening experience. However, both home listening and our tests involve real people (as opposed to instruments). real sound systems, and real music program material. The double blind test setup eliminates all but sonic differences and is a far more sensitive and repeatable indicator of performance than home listening. (Try to prove you hear a 0.3-dB response error in one unit using a casual plug/unplug test.) Nonetheless, since the two listening experiences are different, we should not expect audible differences to show up in the same way. A statistical confirmation of a difference heard in a four-hour test may relate to an audiophile's growing dissatisfaction over a period of months with "listening fatigue" or a nuance such as "ambience retrieval."

David L. Clark

---------------------

-----

An exaggerated vertical scale, with 1 dB per division instead of the usual 5 dB, is used to show subtle frequency-response differences among CD players.

As a result, the intentional response modifications caused by the Carver player's Digital Time Lens appear to be somewhat more dramatic than they really are. The response of these players was measured with a Denon test disc that has a minimum frequency of 40 Hz. STEREO REVIEW'S experience with CD player tests over the past three years suggests that it is safe to assume that all these players have flat response down to 20 Hz. (Red curves show the right-channel response, gray curves the left.)

-----------------

... other hand, there was also surprise that there were any differences at all. The panelists generally expected to hear none, and they had been reassured by the difficulty of hearing any in the Technics test.

What they didn't realize was that in the first fifteen of the twenty tests on the Emerson, there was an inadvertent level difference of 0.2 dB.

This level difference was consistently heard by the listeners, but the problem was corrected for the final five tests, and the results for that portion were entirely random.

Again, we decided to retest this unit the next day. With music signals the randomness of choice remained, as it did with white noise at reasonably high levels. With low-level white noise, however, the choices became statistically-significant, although only just, so the listeners did seem to be hearing some real audible difference under those circumstances.

The third unit to be auditioned was the Carver, with the Digital Time Lens switched in. A difference was immediately audible, as might be expected from the frequency-response measurements. Of the 160 individual choices made by the panelists, only four failed to identify the Carver. The next day, the player was tested again with the Time Lens circuitry switched out. This time the results were very much like the others-the differences, if any, were very difficult to detect. Overall, the listeners did hear a slight difference, but only by a very tiny margin.

The Meridian brought groans from the listeners, who wrote on their response sheets, and said after wards during the pause between tests, that they heard absolutely nothing in the way of differences between it and the reference unit.

Oddly enough, while their responses during musical selections bore this out, with pure test signals they showed a definite ability to distinguish the Meridian.

Finally, the Sony CDP-101 proved immediately and consistently identifiable during the impulse tests, but the responses became random when anything else was played, whether white noise or music. A subsequent check of the impulse response on an oscilloscope showed a considerable visible difference between the CDP-101 and the Sony 650ESD reference ma chine, and this may have been the cause of the audible difference.

Throughout the extended listening tests, it was clear that there were differences to be heard. The effects of the Carver Digital Time Lens were readily explainable; this ma chine was chosen for test because it was designed to sound different, and it did. The effects of the misadjusted level on the Emerson show that a difference doesn't have to be very great to be perceptible, at least in a test session.

But neither of these unusual cases really answers the question of whether there are significant audible differences between CD players of conventional design, adjusted properly. The apparent "personalities" under certain circumstances of the Sony CDP-101, the "straight" Carver, the Meridian, and the Emerson do suggest that all compact Disc players are not created equal. Indeed, with the results of the misadjusted Emerson and the Carver's Digital Time Lens ignored, the panel's ability to hear differences 57.6 percent of the time with test signals was statistically significant even though the listeners were not confident they were hearing any differences. These differences may, of course, simply result from their slight differences in frequency response, but that's enough to con firm the view of the "CD players sound different" faction.

At the same time, the listening tests confirmed that whatever the inherent differences, they are very small indeed. Even with pure test signals, it seems very unlikely that the differences could be heard except in a direct A/B comparison, and even then only in a comparison as carefully controlled as these tests were. With music, the numbers indicate that the scores were not significant, and it is difficult to imagine a real-life situation in which audible differences could reliably be detected or in which one player would be consistently preferred to another for its sound alone.

In the end, the main conclusion seems to be that audible differences do exist, but they don't matter un less you think they matter. Perhaps that will make everyone happy.

David L. Clark is president of DLC Design, an electronic/acoustical consulting firm, and a director of the company that manufactures the ABX comparator. He is a contributing editor of Audio and has published technical papers in the Journal of the Audio Engineering Society. The AES recently elected him a fellow for his work in double-blind testing techniques. He is also a member of the Acoustic Society of America and the National Academy of Recording Arts and Sciences.

---------------

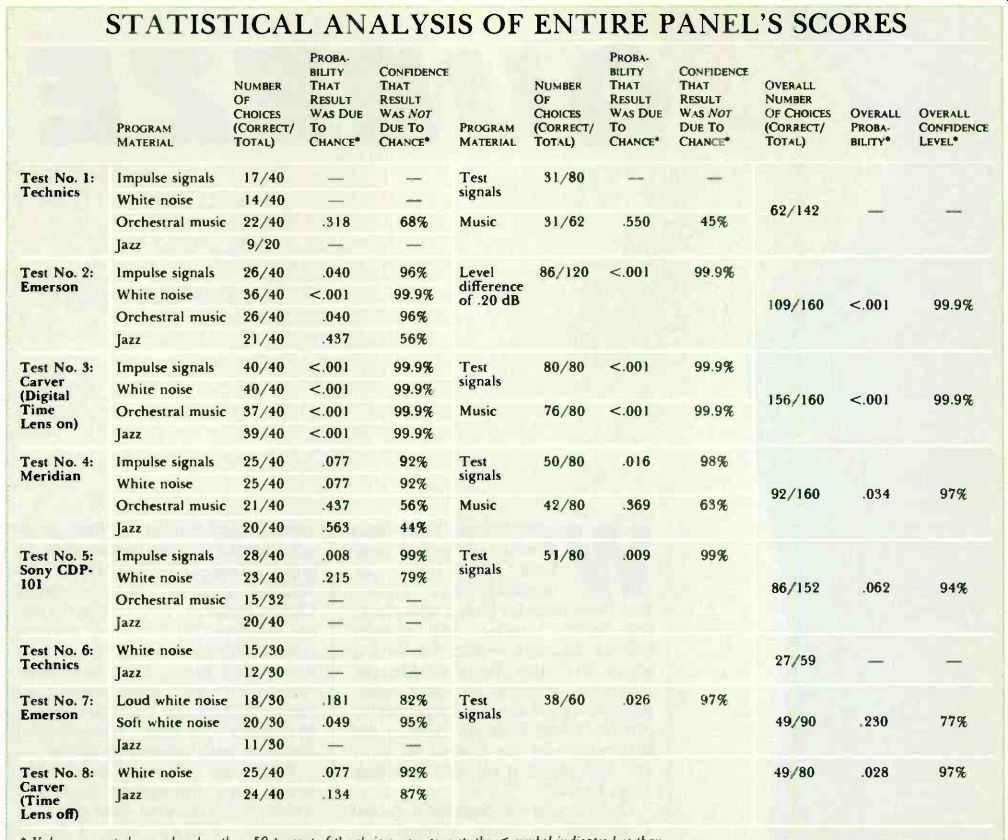

STATISTICAL ANALYSIS OF ENTIRE PANEL'S SCORES

* Values are not shown when less than 50 percent of the choices were correct; the < symbol indicates less than.

INDIVIDUAL LISTENER SCORES

* Values are not shown when less than 50 percent of the choices were correct; > symbol indicates greater than.

NOTES:

In Test No. 1, the playback amplifier blew a fuse during Trial No. 18 (the third trial in the section using the jazz recording). The test was not completed. but some listeners had already made a choice in that trial. Thus, some listeners made a total of eighteen choices in Test No. 1, others only seventeen, for a panel total of 142 rather than the projected 160.

In Test No. 5, all of Trial No. 11 (in the orchestral-music section) had to be thrown out because the CD's went out of sync. Thus each panelist made only nineteen choices in this test, not twenty.

In Test No. 6, listener J could not decide in Trial No. 3, so he did not make a choice.

Test No. 8 had only two parts, and only five listeners participated.

Therefore eight trials were run in each part, rather than the five trials in the other tests, in order to in crease the choice totals and give greater statistical power to the results.

The overall batting average for the entire panel was .629, with 630 correct choices out of a total of 1001.

For the original group of eight panelists. the overall batting average was .637, with 550 correct choices out of 863 total.

====================

CD are here to stay (Jul, 1985)

Link | -- The Pre-recorded Cassette (Jan. 1986)

Source: Stereo Review (USA magazine)