|

|

At a Los Angeles Hi-Fi show, Ed Vilichur of Acoustic Research and others once engaged in a small psychoacoustic experiment. The object was to determine whether there was a specific or minimum playback level necessary to achieve a reasonable subjective simulation of “live” sound. After listening to a variety of selections from the best recordings of the day, we agreed that there did in truth seem to be a specific volume level (which varied somewhat with the recording) at which the music suddenly sounded more “natural.” Below that point there was nothing specifically wrong with the sound—it just wasn’t right.

After about an hour or so sampling different discs, we found that we generally agreed, within several decibels or so, on the volume setting that sounded best. I don’t mean to suggest that the sound was perfect at any level, only that there was a specific volume level at which, for obscure reasons, the reproduced music seemed more realistic.

In the last half-dozen decades since I’ve learned something about the way the human ear/brain responds to sound levels. Psycho-acousticians make a clear and necessary distinction between loudness and sound intensity. Loudness is the ear/brain’s subjective auditory reaction to objective sound-pressure-level stimuli. It’s necessary to distinguish between the subjective and the objective simply because our perception of loudness lacks a linear one-to-one correspondence with the objective world.

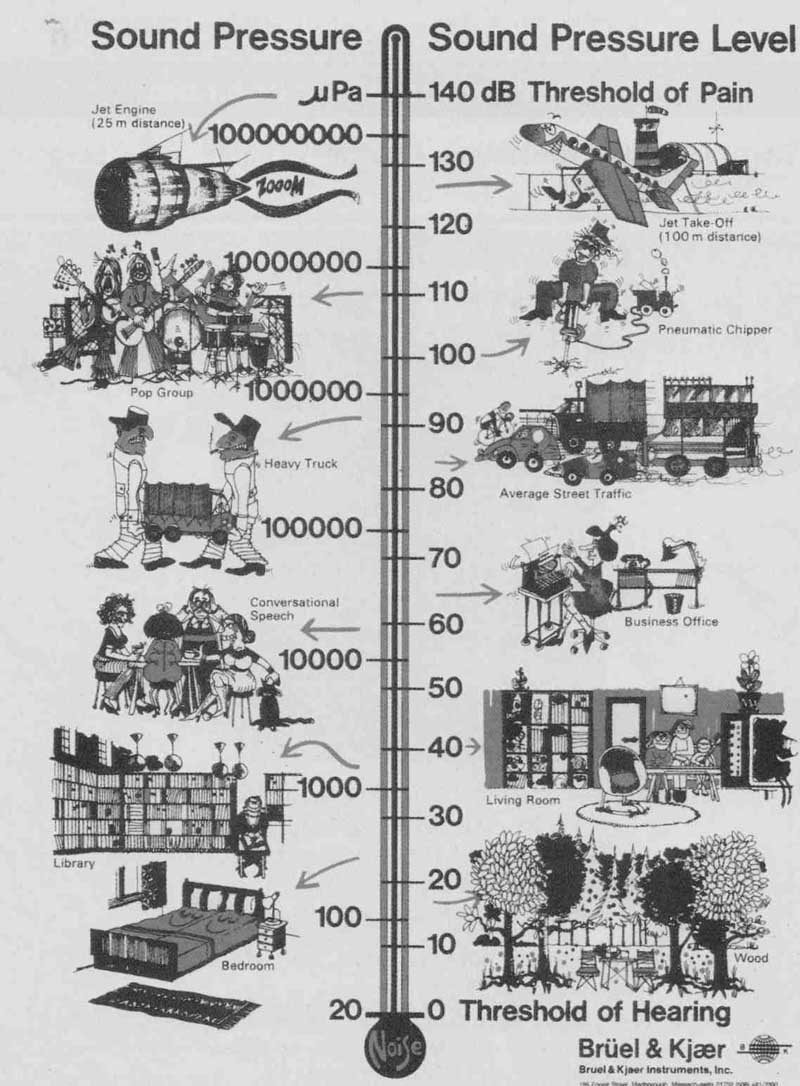

There are good evolutionary reasons why that is so. In respect to volume, for example, the noise created by a jet plane at takeoff is about ten thousand billion times as powerful as the quietest sound we can hear! 1f on a linear scale, a quiet whisper were assigned one intensity unit, a jet engine would have an intensity of ten trillion units!

The ability to compress this enormous dynamic range into something that can be handled and evaluated by the human ear/brain was originally investigated by a 19th-century physicist and philosopher, Gustave Theodor Fechner. In 1860 he published a groundbreaking work, Elements of Psychophysics, which attempted to establish a specific relationship between the outer objective world and the inner subjective one in all areas of sensation. Fechner’s law states, for example, that each time the intensity of a sound is doubled, one step is added to the sensation of loudness. In Fechner’s view, sensation in creased as the logarithm of the strength of the stimulus.

The decibel (dB), which measures sound energy in logarithmic units, would seem to conform nicely with Fechner’s law. But it shortly became apparent to anyone who listened carefully that a noise level of, say, 50dB was not half as loud as 100dB. (Fifty decibels is the background noise in a library reading room; the perceived loudness of 100dB is about 30 times greater than 50dB, and is equivalent to a jet plane heard 1,000 feet overhead.)

FIGURE 1: Relative loudness levels of common sounds.

After much research effort starting in the 1930s at the Psychoacoustic Laboratory at Harvard University, Fechner’s logarithmic approach to auditory perception was ultimately replaced by a true scale of loudness: the sone. The sone scale has a rather straightforward rule: each intensity increase of 10 decibels doubles the sensation of loudness. Today it’s generally accepted that sound levels must be raised by 10dB before they seem twice as loud.

LOUDNESS CONTOURS

The names Fletcher and Munson are commonly invoked when amplifier loudness controls are discussed. In 1933 they were among the first researchers to demonstrate the very nonlinear relationships among the objective sound-pressure level of a sound, its frequency, and its subjective loudness. Aside from the fact that the research had conceptual and practical flaws, it also—at least in the audio equipment area—was misunderstood and misapplied. Let’s see where things went wrong.

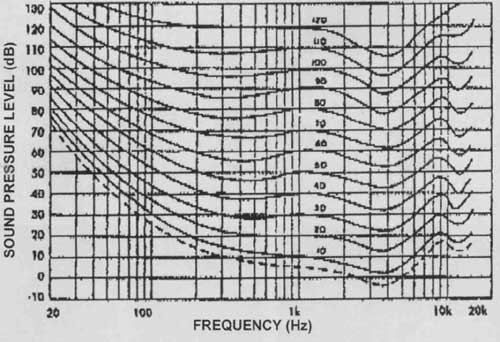

In the original experiment, listeners in an anechoic chamber were asked to match test tones of different frequencies and intensities when calibrated 1000Hz test tones produced at a variety of specific levels. The general results are familiar to most of us; it was found that the ear loses sensitivity to low frequencies as the sound level is reduced. Later work in the mid-1950s by Robinson and Dadson using superior instrumentation produced a somewhat modified set of loudness contours (Fig. 2). Their results were subsequently adopted by the International Standards Organization and are now known officially as the ISO equal-loudness contour curves. Despite the inter national acceptance of the R-D curves, keep in mind that the techniques used to drive them (pure tones in an anechoic chamber) do not correspond exactly to music listened to in a living room.

ACHIEVING REALITY

Anyone who has been critically listening to music with any regularity should by now be convinced that realistic re production is no easy task. The basic problem is the need to present to the listener’s ears a three-dimensional acoustic simulation of the live musical event. It has become obvious that the problem can’t be solved by conventional two-channel stereo, and digital “dimension synthesizers” and multi-channel A/V systems are now becoming commonplace. However, adding the extra channels is a necessary step, but not a sufficient one; the original playback level at the listener’s ears still has to be reproduced. Why should this be so?

Although the question may seem dauntingly complex and laden with philosophical booby traps, some simple—if incomplete—answers are avail able. Setting aside the question of the absolute accuracy of the loudness curves discussed earlier, you know that the ear’s frequency response changes in accordance with the level of the impinging signal. For example, suppose that you were to make a good recording of a live dance band playing at an average level (at the microphones) of 70dB. If you were to subsequently play back the re cording at a 50dB level in your home, the bass frequencies would automatically (as per Fig. 2) suffer a 13dB loss relative to the mid frequencies. Obviously, not only would the bass line be attenuated, but also the entire sound of the orchestra would be thinned out.

OTHER PROBLEMS

The ear has other loudness-dependent peculiarities. As a transducer, it is both asymmetrical and nonlinear, and, there fore, regularly creates (and hears) frequencies that were not in the original material. These are known as aural harmonics and combination tones, which correspond to harmonic and IM distortion products in non-biological audio equipment.

Because the amounts of these acoustic artifacts generated by the ear depend on signal level, any level differences between the recording and playback are going to cause different reactions in the listener’s ears. To complicate matters further, low- frequency sounds appear to decrease in pitch when intensity is raised, while highs subjectively increase in pitch. Psychoacousticians know enough about this effect to chart it on what they call the mel scale.

These and other reasons help explain why music sounds correct only when played at the level (the original level, that is) that properly relates to the ear’s peculiar internal processing. I doubt that it’s possible to design a loudness control that really works, so for the present, at least, you just need to do the best you can, loudness-wise, neighbors and spouses permitting.

FIGURE 2: Robinson-Dadson equal contours for binaural free listening conditions.

The numbers on the left side of the chart and on the lines in the mid are

the sound pressure level in decibels, Sounds below the level of the dashed

line are not audible to humans; e.g., a 40Hz bass tone would not be audible

unless it exceeded 50dB in sound pressure level.

============