1. Introduction

The distances involved in transmission vary from that of a short cable between adjacent units to communication anywhere on earth via data networks or radio communication. This section must consider a correspondingly wide range of possibilities. The importance of direct digital interconnection between audio devices was realized early, and numerous incompatible (and now obsolete) methods were developed by various manufacturers until standardization was reached in the shape of the AES/EBU digital audio interface for professional equipment and the SPDIF interface for consumer equipment. These standards were extended to produce the MADI standard for multi-channel interconnects. All of these work on uncompressed PCM audio.

As digital audio and computers continue to converge, computer networks are also being used for audio purposes. Audio may be transmitted on networks such as Ethernet, ISDN, ATM and Internet. Here compression may or may not be used, and non-real-time transmission may also be found according to economic pressures.

Digital audio is now being broadcast in its own right as DAB, alongside traditional analog television as NICAM digital audio and as MPEG or AC-3 coded signals in digital television broadcasts. Many of the systems described here rely upon coding principles described in Sections 6 and 7.

Whatever the transmission medium, one universal requirement is a reliable synchronization system. In PCM systems, synchronization of the sampling rate between sources is necessary for mixing. In packet-based networks, synchronization allows the original sampling rate to be established at the receiver despite the intermittent transfer of a real packet systems. In digital television systems, synchronization between vision and sound is a further requirement.

2. Introduction to AES/EBU interface

The AES/EBU digital audio interface, originally published in 1985, [1] was proposed to embrace all the functions of existing formats in one standard.

The goal was to ensure interconnection of professional digital audio equipment irrespective of origin. The EBU ratified the AES proposal with the proviso that the optional transformer coupling was made mandatory and led to the term AES/EBU interface, also called EBU/AES in some European countries. The contribution of the BBC to the development of the interface must be mentioned here. Alongside the professional format, Sony and Philips developed a similar format now known as SPDIF (Sony Philips Digital Interface) intended for consumer use. This offers different facilities to suit the application, yet retains sufficient compatibility with the professional interface so that, for many purposes, consumer and professional machines can be connected together. [2,3]

The AES concerns itself with professional audio and accordingly has had little to do with the consumer interface. Thus the recommendations to standards bodies such as the IEC (International Electrotechnical Commission) regarding the professional interface came primarily through the AES whereas the consumer interface input was primarily from industry, although based on AES professional proposals. The IEC and various national standards bodies naturally tended to combine the two into one standard such as IEC 958 [4] which refers to the professional interface and the consumer interface. This process has been charted by Finger. [5] Understandably with so many standards relating to the same subject differences in interpretation arise leading to confusion in what should or should not be implemented, and indeed what the interface should be called. This section will refer generically to the professional interface as the AES/EBU interface and the consumer interface as SPDIF.

Getting the best results out of the AES/EBU interface, or indeed any digital interface, requires some care. Section 13.9 treats this subject in some detail

3. The electrical interface

During the standardization process it was considered desirable to be able to use existing analog audio cabling for digital transmission. Existing professional analog signals use nominally 600 ohm impedance balanced line screened signaling, with one cable per audio channel, or in some cases one twisted pair per channel with a common screen. The 600 ohm standard came from telephony where long distances are involved in comparison with electrical audio wavelengths. The distances likely to be found within a studio complex are short compared to audio electrical wavelengths and as a result at audio frequency the impedance of cable is high and the 600 ohm figure is that of the source and termination. Such a cable has a different impedance at the frequencies used for digital audio, around 110-ohm.

If a single serial channel is to be used, the interconnect has to be self clocking and self-synchronizing, i.e. the single signal must carry enough information to allow the boundaries between individual bits, words and blocks to be detected reliably. To fulfill these requirements, the AES/EBU and SPDIF interfaces use FM channel code (see Section 6) which is DC free, strongly self-clocking and capable of working with a changing sampling rate. Synchronization of deserialization is achieved by violating the usual encoding rules.

The use of FM means that the channel frequency is the same as the bit rate when sending data ones. Tests showed that in typical analog audio cabling installations, sufficient bandwidth was available to convey two digital audio channels in one twisted pair. The standard driver and receiver chips for RS-422A [6] data communication (or the equivalent CCITT V.11) are employed for professional use, but work by the BBC [7] suggested that equalization and transformer coupling were desirable for longer cable runs, particularly if several twisted pairs occupy a common shield.

Successful transmission up to 350m has been achieved with these techniques.[8]

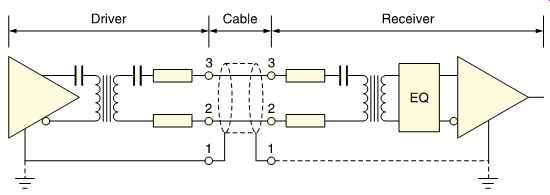

FIG. 1 Recommended electrical circuit for use with the standard two-channel

interface.

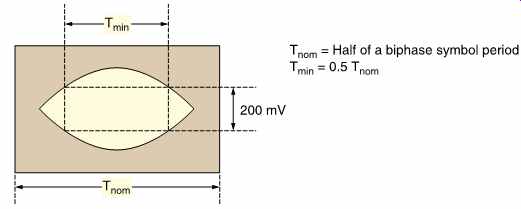

FIG. 2 The minimum eye pattern acceptable for correct decoding of

standard two-channel data.

FIG. 1 shows the standard configuration. The output impedance of the drivers will be about 110 ohms, and the impedance of the cable used should be similar at the frequencies of interest. The driver was specified in AES-3-1985 to produce between 3 and 10V peak-to-peak into such an impedance but this was changed to between 2 and 7 volts in AES 3-1992 to better reflect the characteristics of actual RS-422 driver chips.

The original receiver impedance was set at a high 250 ohm, with the intention that up to four receivers could be driven from one source. This was found to be inadvisable because of reflections caused by impedance mismatches and AES-3-1992 is now a point-to-point interface with source, cable and load impedance all set at 110 ohm . Whilst analog audio cabling was adequate for digital signaling, cable manufacturers have subsequently developed cables which are more appropriate for new digital installations, having lower loss factors allowing greater transmission distances.

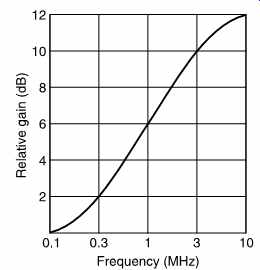

In FIG. 2, the specification of the receiver is shown in terms of the minimum eye pattern (see Section 6) which can be detected without error. It will be noted that the voltage of 200mV specifies the height of the eye opening at a width of half a channel bit period. The actual signal amplitude will need to be larger than this, and even larger if the signal contains noise. FIG. 3 shows the recommended equalization characteristic which can be applied to signals received over long lines.

As an adequate connector in the shape of the XLR is already in wide service, the connector made to IEC 268 Part 12 has been adopted for digital audio use. Effectively, existing analog audio cables having XLR connectors can be used without alteration for digital connections. The AES/EBU standard does, however, require that suitable labeling should be used so that it is clear that the connections on a particular unit are digital. Whilst the XLR connector was never designed to have constant impedance in the megaHertz range, it is capable of towing an outside broadcast vehicle without unlatching.

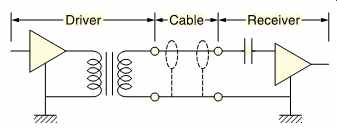

The need to drive long cables does not generally arise in the domestic environment, and so a low-impedance balanced signal was not considered necessary. The electrical interface of the consumer format uses a 0.5V peak single-ended signal, which can be conveyed down conventional audio grade coaxial cable connected with RCA 'phono' plugs. FIG. 4 shows the resulting consumer interface as specified by IEC 958.

FIG. 3 EQ characteristic recommended by the AES to improve reception

in the case of long lines.

FIG. 4 The consumer electrical interface.

There is the additional possibility [9] of a professional interface using coaxial cable and BNC connectors for distances of around 1000m. This is simply the AES/EBU protocol but with a 75 ohm coaxial cable carrying a one-volt signal so that it can be handled by analog video distribution amplifiers. Impedance converting transformers are commercially avail able allowing balanced 110 ohm to unbalanced 75 ohm matching.

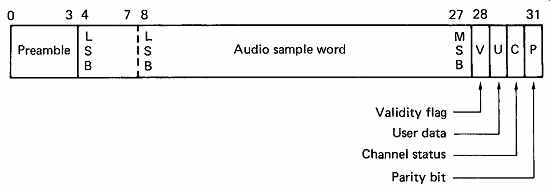

4 Frame structure

In FIG. 5 the basic structure of the professional and consumer formats can be seen. One subframe consists of 32 bit-cells, of which four will be used by a synchronizing pattern. Subframes from the two audio channels, A and B, alternate on a time-division basis. Up to 24-bit sample wordlength can be used, which should cater for all conceivable future developments, but normally 20-bit maximum length samples will be available with four auxiliary data bits, which can be used for a voice grade channel in a professional application. In a consumer DAT machine, subcode can be transmitted in bits 4-11, and the sixteen-bit audio in bits 12-27.

FIG. 5 The basic subframe structure of the AES/EBU format. Sample

can be 20 bits with four auxiliary bits, or 24 bits. LSB is transmitted

first.

Preceding formats sent the most significant bit first. Since this was the order in which bits were available in successive approximation convertors it has become a de-facto standard for inter-chip transmission inside equipment. In contrast, this format sends the least significant bit first. One advantage of this approach is that simple serial arithmetic is then possible on the samples because the carries produced by the operation on a given bit can be delayed by one bit period and then included in the operation on the next higher-order bit. There is additional complication, however, if it is proposed to build adaptors from one of the manufacturers' formats to the new format because of the word reversal. This problem is a temporary issue, as new machines are designed from the outset to have the standard connections.

The format specifies that audio data must be in two's complement coding. Whilst pure binary could accept various alignments of different wordlengths with only a level change, this is not true of two's complement. If different wordlengths are used, the MSBs must always be in the same bit position otherwise the polarity will be misinterpreted.

Thus the MSB has to be in bit 27 irrespective of wordlength. Shorter words are leading zero filled up to the 20-bit capacity. The channel status data included from AES-3-1992 signaling of the actual audio wordlength used so that receiving devices could adjust the digital dithering level needed to shorten a received word which is too long or pack samples onto a disk more efficiently.

Four status bits accompany each subframe. The validity flag will be reset if the associated sample is reliable. Whilst there have been many aspirations regarding what the V bit could be used for, in practice a single bit cannot specify much, and if combined with other V bits to make a word, the time resolution is lost. AES-3-1992 described the V bit as indicating that the information in the associated subframe is 'suitable for conversion to an analog signal'. Thus it might be reset if the interface was being used for non-audio data as is done, for example, in CD-I players.

The parity bit produces even parity over the subframe, such that the total number of ones in the subframe is even. This allows for simple detection of an odd number of bits in error, but its main purpose is that it makes successive sync patterns have the same polarity, which can be used to improve the probability of detection of sync. The user and channel-status bits are discussed later.

Two of the subframes described above make one frame, which repeats at the sampling rate in use. The first subframe will contain the sample from channel A, or from the left channel in stereo working. The second subframe will contain the sample from channel B, or the right channel in stereo. At 48 kHz, the bit rate will be 3.072MHz, but as the sampling rate can vary, the clock rate will vary in proportion.

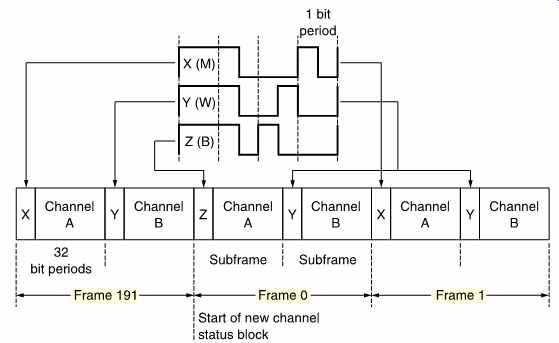

FIG. 6 Three different preambles (X, Y and Z) are used to synchronize

a receiver at the starts of subframes.

In order to separate the audio channels on receipt the synchronizing patterns for the two subframes are different as FIG. 6 shows. These sync patterns begin with a run length of 1.5 bits which violates the FM channel coding rules and so cannot occur due to any data combination.

The type of sync pattern is denoted by the position of the second transition which can be 0.5, 1.0 or 1.5 bits away from the first. The third transition is designed to make the sync patterns DC-free.

The channel status and user bits in each subframe form serial data streams with one bit of each per audio channel per frame. The channel status bits are given a block structure and synchronized every 192 frames, which at 48 kHz gives a block rate of 250Hz, corresponding to a period of four milliseconds. In order to synchronize the channel status blocks, the channel A sync pattern is replaced for one frame only by a third sync pattern which is also shown in FIG. 6. The AES standard refers to these as X,Y and Z whereas IEC 958 calls them M,W and B. As stated, there is a parity bit in each subframe, which means that the binary level at the end of a subframe will always be the same as at the beginning. Since the sync patterns have the same characteristic, the effect is that sync patterns always have the same polarity and the receiver can use that information to reject noise. The polarity of transmission is not specified, and indeed an accidental inversion in a twisted pair is of no consequence, since it is only the transition that is of importance, not the direction.

5 Talkback in auxiliary data

When 24-bit resolution is not required, which is most of the time, the four auxiliary bits can be used to provide talkback.

This was proposed by broadcasters [10] to allow voice coordination between studios as well as program exchange on the same cables.

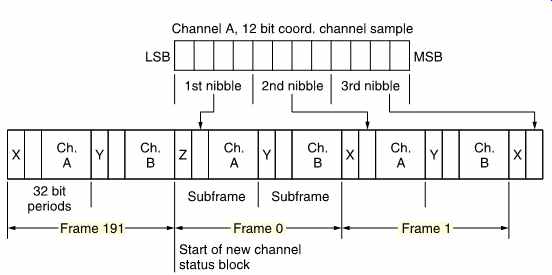

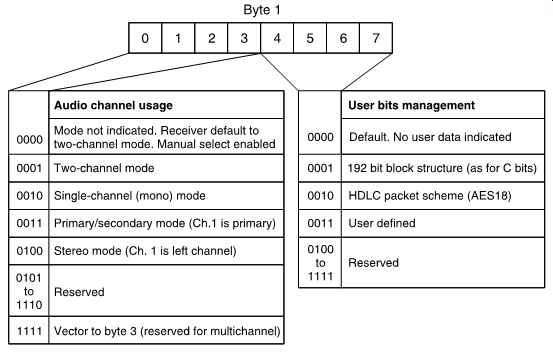

Twelve-bit samples of the talkback signal are taken at one third the main sampling rate. Each twelve-bit sample is then split into three nibbles (half a byte, for gastronomers) which can be sent in the auxiliary data slot of three successive samples in the same audio channel. As there are 192 nibbles per channel status block period, there will be exactly 64 talkback samples in that period. The reassembly of the nibbles can be synchronized by the channel status sync pattern as shown in FIG. 7. Channel status byte 2 reflects the use of auxiliary data in this way.

FIG. 7 The coordination signal is of a lower bit rate than the main

audio and thus may be inserted in the auxiliary nibble of the interface

subframe, taking three subframes per coordination sample.

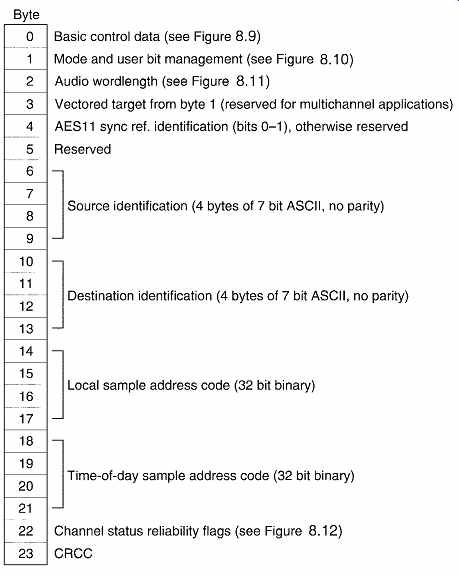

6 Professional channel status

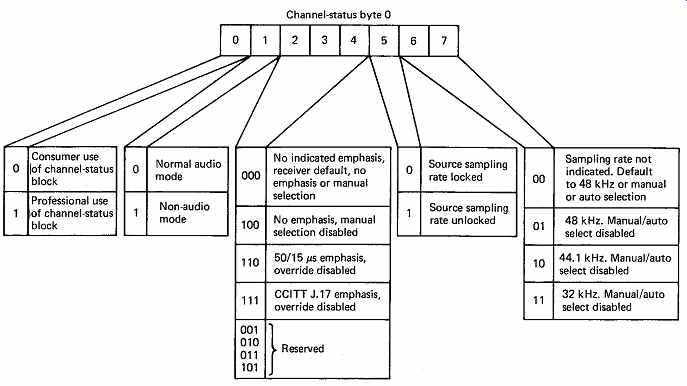

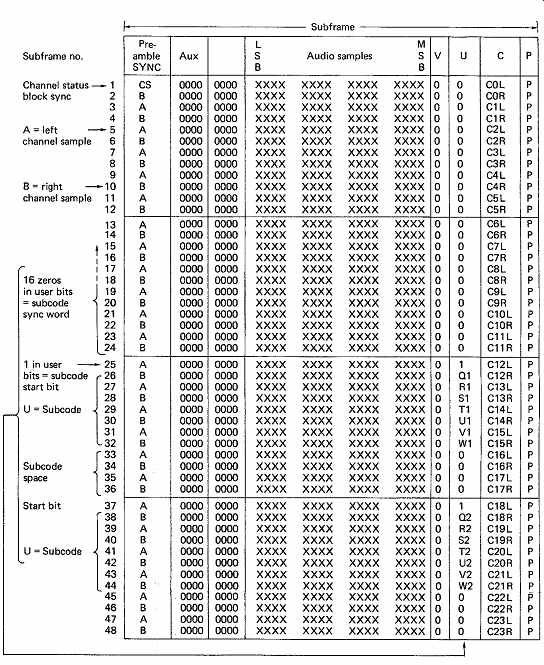

In the both the professional and consumer formats, the sequence of channel-status bits over 192 subframes builds up a 24-byte channel-status block. However, the contents of the channel status data are completely different between the two applications. The professional channel status structure is shown in FIG. 8. Byte 0 determines the use of emphasis and the sampling rate, with details in FIG. 9. Byte 1 determines the channel usage mode, i.e. whether the data transmitted are a stereo pair, two unrelated mono signals or a single mono signal, and details the user bit handling. FIG. 10 gives details. Byte 2 determines wordlength as in FIG. 11. This was made more comprehensive in AES-3-1992. Byte 3 is applicable only to multichannel applications. Byte 4 indicates the suitability of the signal as a sampling rate reference and will be discussed in more detail later in this section.

FIG. 8 Overall format of the professional channel status block.

FIG. 9 The first byte of the channel-status information in the AES/EBU

standard deals primarily with emphasis and sampling-rate control.

FIG. 10 Format of byte 1 of professional channel status.

FIG. 11 Format of byte 2 of professional channel status.

There are two slots of four bytes each which are used for alphanumeric source and destination codes. These can be used for routing. The bytes contain seven-bit ASCII characters (printable characters only) sent LSB first with the eighth bit set to zero according to AES-3-1992. The destination code can be used to operate an automatic router, and the source code will allow the origin of the audio and other remarks to be displayed at the destination.

Bytes 14-17 convey a 32-bit sample address which increments every channel status frame. It effectively numbers the samples in a relative manner from an arbitrary starting point. Bytes 18-21 convey a similar number, but this is a time-of-day count, which starts from zero at midnight. As many digital audio devices do not have real-time clocks built in, this cannot be relied upon.

AES-3-92 specified that the time-of-day bytes should convey the real time at which a recording was made, making it rather like timecode.

There are enough combinations in 32 bits to allow a sample count over 24 hours at 48 kHz. The sample count has the advantage that it is universal and independent of local supply frequency. In theory if the sampling rate is known, conventional hours, minutes, seconds, frames timecode can be calculated from the sample count, but in practice it is a lengthy computation and users have proposed alternative formats in which the data from EBU or SMPTE timecode are transmitted directly in these bytes. Some of these proposals are in service as de-facto standards.

The penultimate byte contains four flags which indicate that certain sections of the channel-status information are unreliable (see FIG. 12).

This allows the transmission of an incomplete channel-status block where the entire structure is not needed or where the information is not available. For example, setting bit 5 to a logical one would mean that no origin or destination data would be interpreted by the receiver, and so it need not be sent.

The final byte in the message is a CRCC which converts the entire channel-status block into a codeword (see Section 7). The channel status message takes 4ms at 48 kHz and in this time a router could have switched to another signal source. This would damage the transmission, but will also result in a CRCC failure so the corrupt block is not used.

Error correction is not necessary, as the channel status data are either stationary, i.e. they stay the same, or change at a predictable rate, e.g. timecode. Stationary data will only change at the receiver if a good CRCC is obtained.

7 Consumer channel status

For consumer use, a different version of the channel-status specification is used. As new products come along, the consumer subcode expands its scope.

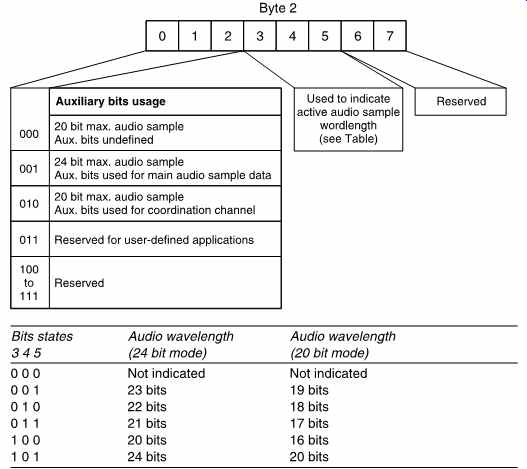

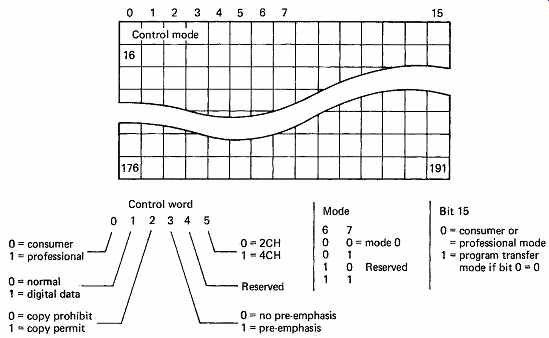

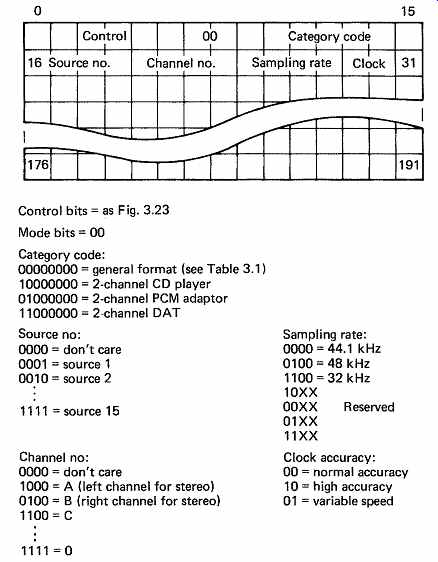

FIG. 13 shows that the serial data bits are assembled into twelve words of sixteen bits each. In the general format, the first six bits of the first word form a control code, and the next two bits permit a mode select for future expansion. At the moment only mode zero is standardized, and the three remaining codes are reserved.

FIG. 13 The general format of the consumer version of channel status.

Bit 0 has the same meaning as in the professional format for compatibility.

Bits 6-7 determine the consumer format mode, and presently only mode 0

is defined (see FIG. 14).

FIG. 14 shows the bit allocations for mode zero. In addition to the control bits, there are a category code, a simplified version of the AES/ EBU source field, a field which specifies the audio channel number for multichannel working, a sampling-rate field, and a sampling-rate tolerance field.

Originally the consumer format was incompatible with the professional format, since bit zero of channel status would be set to a one by a four channel consumer machine, and this would confuse a professional receiver because bit zero specifies professional format. The EBU proposed to the IEC that the four-channel bit be moved to bit 5 of the consumer format, so that bit zero would always then be zero. This proposal is incorporated into the bit definitions of Figures 8.13 and 8.14.

The category code specifies the type of equipment which is transmit ting, and its characteristics. In fact each category of device can output one of two category codes, depending on whether bit 15 is or is not set. Bit 15 is the 'L-bit' and indicates whether the signal is from an original recording (0) or from a first-generation copy (1) as part of the SCMS (Serial Copying Management System) first implemented to resolve the stalemate over the sale of consumer DAT machines. In conjunction with the copyright flag, a receiving device can determine whether to allow or disallow recording. There were originally four categories; general purpose, two-channel CD player, two-channel PCM adaptor and two channel digital tape recorder (DAT), but the list has now extended as FIG. 14 shows.

FIG. 14 In consumer mode 0, the significance of the first two sixteen-bit

channel-status words is shown here. The category codes are expanded in

Tables 1 and 2.

========

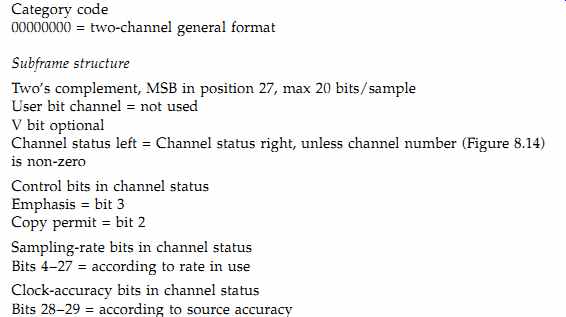

Table 1 The general category code causes the subframe structure of the

transmission to be interpreted as below (see FIG. 5) and the stated

channel-status bits are valid

Category code

00000000 = two-channel general format

Subframe structure

Two's complement, MSB in position 27, max 20 bits/sample

User bit channel = not used V bit optional

Channel status left = Channel status right, unless channel number (FIG. 14) is non-zero

Control bits in channel status

Emphasis = bit 3

Copy permit = bit 2

Sampling-rate bits in channel status

Bits 4-27 = according to rate in use

Clock-accuracy bits in channel status

Bits 28-29 = according to source accuracy Table 2

In the CD category, the meaning below is placed on the transmission.

The main difference from the general category is the use of user bits for subcode as specified in FIG. 15 Category code 10000000 = two-channel CD player

Subframe structure

Two's complement MSB in position 27, 16 bits/sample

Use bit channel = CD subcode (see FIG. 15) V bit optional

Control bits in channel status

Derived from Q subcode control bits (see Section 12)

Sampling-rate bits in channel status Bits 24-27 = 0000 = 44.1 kHz

Clock-accuracy bits in channel status

Bits 28-29 = according to source accuracy and use of variable speed

=========

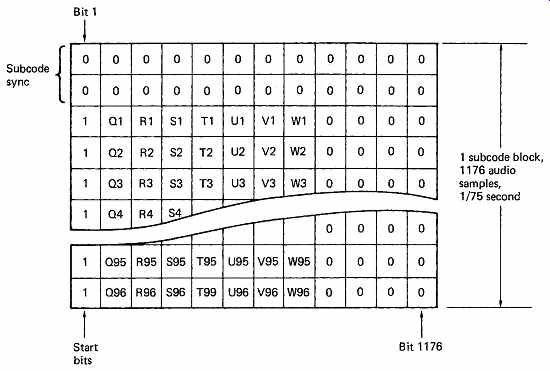

Table 1 illustrates the format of the subframes in the general purpose category. When used with CD players, Table 2 applies. In this application, the extensive subcode data of a CD recording (see Section 12) can be conveyed down the interface. In every CD sync block, there are twelve audio samples, and eight bits of subcode, P-W. The P flag is not transmitted, since it is solely positioning information for the player; thus only Q-W are sent. Since the interface can carry one user bit for every sample, there is surplus capacity in the user-bit channel for subcode. A CD subcode block is built up over 98 sync blocks, and has a repetition rate of 75Hz. The start of the subcode data in the user bitstream will be seen in FIG. 15 to be denoted by a minimum of sixteen zeros, followed by a start bit which is always set to one.

Immediately after the start bit, the receiver expects to see seven subcode bits, Q-W. Following these, another start bit and another seven bits may follow immediately, or a space of up to eight zeros may be left before the next start bit. This sequence repeats 98 times, when another sync pattern will be expected. The ability to leave zeros between the subcode symbols simplifies the handling of the disparity between user bit capacity and subcode bit rate. FIG. 16 shows a representative example of a transmission from a CD player.

In a PCM adaptor, there is no subcode, and the only ancillary information available from the recording consists of copy-protect and emphasis bits. In other respects, the format is the same as the general purpose format.

FIG. 15 In CD, one subcode block is built up over 98 sync blocks.

In this period there will be 1176 audio samples, and so there are 1176

user bits available to carry the subcode. There is insufficient subcode

information to fill this capacity, and zero packing is used.

FIG. 16 Compact Disc subcode transmitted in user bits of serial interface.

When a DAT player is used with the interface, the user bits carry several items of information. [11] Once per drum revolution, the user bit in one subframe is raised when that subframe contains the first sample of the interleave block (see Section 9). This can be used to synchronize several DAT machines together for editing purposes. Immediately following the sync bit, start ID will be transmitted when the player has found the code on the tape. This must be asserted for 300 ± 30 drum revolutions, or about 10 seconds. In the third bit position the skip ID is transmitted when the player detects a skip command on the tape. This indicates that the player will go into fast forward until it detects the next start ID. The skip ID must be transmitted for 33 ± 3 drum rotations. Finally DAT supports an end-of-skip command which terminates a skip when it is detected. This allows jump editing (see Section 11) to omit short sections of the recording. DAT can also transmit the track number (TNO) of the track being played down the user bitstream.

8 User bits

The user channel consists of one bit per audio channel per sample period. Unlike channel status, which only has a 192-bit frame structure, the user channel can have a flexible frame length. FIG. 10 showed how byte 1 of the channel status frame describes the state of the user channel. Many professional devices do not use the user channel at all and would set the all-zeros code. If the user channel frame has the same length as the channel status frame then code 0001 can be set. One user channel format which is standardized is the data packet scheme of AES18-1992. [12,13]

This was developed from proposals to employ the user channel for labeling in an asynchronous format. [14]

A computer industry standard protocol known as HDLC (High-level Data Link Control) 15 is employed in order to take advantage of readily available integrated circuits.

The frame length of the user channel can be conveniently made equal to the frame period of an associated device. For example, it may be locked to Film, TV or DAT frames. The frame length may vary in NTSC as there are not an integer number of samples in a frame.

9 MADI - Multi-channel audio digital interface

Whilst the AES/EBU digital interface excels for the interconnection of stereo equipment, it is at a disadvantage when a large number of channels is required. MADI [16]

was designed specifically to address the requirement for digital connection between multitrack recorders and mixing consoles by a working group set up jointly by Sony, Mitsubishi, Neve and SSL.

The standard provides for 56 simultaneous digital audio channels which are conveyed point-to-point on a single 75–ohm coaxial cable fitted with BNC connectors (as used for analog video) along with a separate synchronization signal. A distance of at least 50m can be achieved.

Essentially MADI takes the subframe structure of the AES/EBU interface and multiplexes 56 of these into one sample period rather than the original two. Clearly this will result in a considerable bit rate, and the FM channel code of the AES/EBU standard would require excessive bandwidth. A more efficient code is used for MADI. In the AES/EBU interface the data rate is proportional to the sampling rate in use. Losses will be greater at the higher bit rate of MADI, and the use of a variable bit rate in the channel would make the task of achieving optimum equalization difficult. Instead the data bit rate is made a constant 100 megabits per second, irrespective of sampling rate. At lower sampling rates, the audio data are padded out to maintain the channel rate.

The MADI standard is effectively a superset of the AES/EBU interface in that the subframe data content is identical. This means that a number of separate AES/EBU signals can be fed into a MADI channel and recovered in their entirety on reception. The only caution required with such an application is that all channels must have the same synchronized sampling rate. The primary application of MADI is to multitrack recorders, and in these machines the sampling rates of all tracks are intrinsically synchronous. When the replay speed of such machines is varied, the sampling rate of all channels will change by the same amount, so they will remain synchronous.

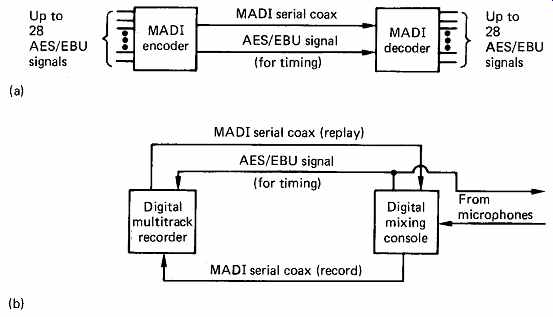

FIG. 17 Some typical MADI applications. In (a) a large number of two-channel

digital signals are multiplexed into the MADI cable to achieve economy

of cabling. Note the separate timing signal. In (b) a pair of MADI links

is necessary to connect a recorder to a mixing console. A third MADI link

could be used to feed microphones into the desk from remote convertors.

At one extreme, MADI will accept a 32 kHz recorder playing 12 1/2 percent slow, and at the other extreme a 48 kHz recorder playing 12 1/2 percent fast. This is almost a factor of 2:1. FIG. 17 shows some typical MADI configurations.

10 MADI data transmission

The data transmission of MADI is made using a group code, where groups of four data bits are represented by groups of five channel bits. Four bits have sixteen combinations, whereas five bits have 32 combinations.

Clearly only 16 out of these 32 are necessary to convey all possible data. It is then possible to use some of the remaining patterns when it is required to pad out the data rate. The padding symbols will not correspond to a valid data symbol and so they can be recognized and thrown away on reception.

A further use of this coding technique is that the 16 patterns of 5 bits which represent real data are chosen to be those which will have the best balance between high and low states, so that DC offsets at the receiver can be minimized. Section 6 discussed the coding rules of 4/5 in MADI. The 4/5 code adopted is the same one used for a computer transmission format known as FDDI, so that existing hardware can be used.

11 MADI frame structure

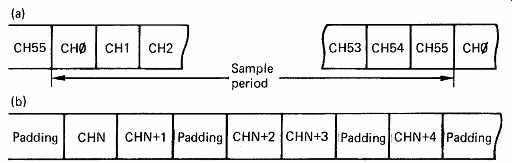

FIG. 18 In (a) all 56 channels are sent in numerical order serially

during the sample period. For simplicity no padding symbols are shown here.

In (b) the use of padding symbols is illustrated. These are necessary to

maintain the channel bit rate at 125 M bits/s irrespective of the sample

rate in use. Padding can be inserted flexibly, but it must only be placed

between the channels.

FIG. 18(a) shows the frame structure of MADI. In one sample period, 56 time slots are available, and these each contain eight 4/5 symbols, corresponding to 32 data bits or 40 channel bits. Depending on the sampling rate in use, more or less padding symbols will need to be inserted in the frame to maintain a constant channel bit rate. Since the receiver does not interpret the padding symbols as data, it is effectively blind to them, and so there is considerable latitude in the allowable positions of the padding. FIG. 18(b) shows some possibilities. The padding must not be inserted within a channel, only between channels, but the channels need not necessarily be separated by padding. At one extreme, all channels can be butted together, followed by a large padding area, or the channels can be evenly spaced throughout the frame.

Although this sounds rather vague, it is intended to allow freedom in the design of associated hardware. Multitrack recorders generally have some form of internal multiplexed data bus, and these have various architectures and protocols. The timing flexibility allows an existing bus timing structure to be connected to a MADI link with the minimum of hardware. Since the channels can be inserted at a variety of places within the frame, the necessity of a separate synchronizing link between transmitter and receiver becomes clear.

12 MADI Audio channel format

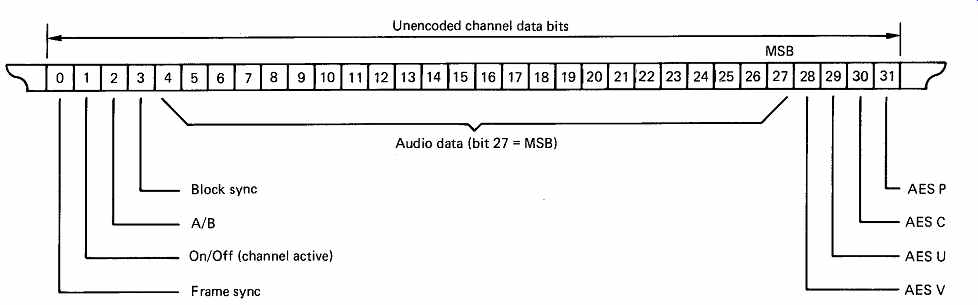

FIG. 19 The MADI channel data are shown here. The last 28 bits are

identical in every way to the AES/EBU interface, but the synchronizing

in the first four bits differs. There is a frame sync bit to identify channel

0, and a channel active bit. The A/B leg of a possible AES/EBU input to

MADI is conveyed, as is the channel status block sync.

FIG. 19 shows the MADI channel format, which should be compared with the AES/EBU subframe shown in FIG. 5. The last 28 bits are identical, and differences are only apparent in the synchronizing area. In order to remain transparent to an AES/EBU signal, which can contain two audio channels, MADI must tell the receiver whether a particular channel contains the A leg or B leg, and when the AES/EBU channel status block sync occurs. Bits 2 and 3 perform these functions. As the 56 channels of MADI follow one another in numerical order, it is necessary to identify channel zero so that the channels are not mixed up. This is the function of bit 0, which is set in channel zero and reset in all other channels. Finally bit 1 is set to indicate an active channel, for the case when less than 56 channels are being fed down the link. Active channels have bit 1 set, and must be consecutive starting at channel zero. Inactive channels have all bits set to zero, and must follow the active channels.

13. Fiber-optic interfacing

Whereas a parallel bus is ideal for a distributed multichannel system, for a point-to-point connection, the use of fiber optics is feasible, particularly as distance increases. An optical fiber is simply a glass filament which is encased in such a way that light is constrained to travel along it.

Transmission is achieved by modulating the power of an LED or small laser coupled to the fiber. A phototransistor converts the received light back to an electrical signal.

Optical fibers have numerous advantages over electrical cabling. The bandwidth available is staggering. Optical fibers neither generate, nor are prone to, electromagnetic interference and, as they are insulators, ground loops cannot occur. [17] The disadvantage of optical fibers is that the terminations of the fiber where transmitters and receivers are attached suffer optical losses, and while these can be compensated in point-to point links, the use of a bus structure is not really feasible. Fiber-optic links are already in service in digital audio mixing consoles. [18]

The fiber implementation by Toshiba and known as the TOSLink is popular in consumer products, and the protocol is identical to the consumer electrical format.

14. Synchronizing

When digital audio signals are to be assembled from a variety of sources, either for mixing down or for transmission through a TDM (time-division multiplexing) system, the samples from each source must be synchronized to one another in both frequency and phase. The source of samples must be fed with a reference sampling rate from some central generator, and will return samples at that rate. The same will be true if digital audio is being used in conjunction with VTRs. As the scanner speed and hence the audio block rate is locked to video, it follows that the audio sampling rate must be locked to video. Such a technique has been used since the earliest days of television in order to allow vision mixing, but now that audio is conveyed in discrete samples, these too must be genlocked to a reference for most production purposes.

AES11-1991 [19] documented standards for digital audio synchronization and requires professional equipment to be able to genlock either to a separate reference input or to the sampling rate of an AES/EBU input.

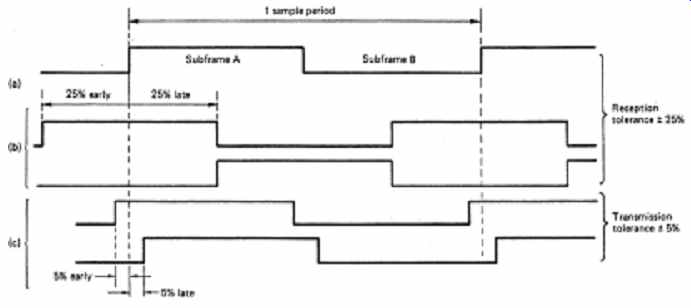

FIG. 20 The timing accuracy required in AES/EBU signals with respect

to a reference (a). Inputs over the range shown at (b) must be accepted,

whereas outputs must be closer in timing to the reference as shown at (c).

As the interface uses serial transmission, a shift register is required in order to return the samples to parallel format within equipment. The shift register is generally buffered with a parallel loading latch which allows some freedom in the exact time at which the latch is read with respect to the serial input timing. Accordingly the standard defines synchronism as an identical sampling rate, but with no requirement for a precise phase relationship. FIG. 20 shows the timing tolerances allowed. The beginning of a frame (the frame edge) is defined as the leading edge of the X preamble. A device which is genlocked must correctly decode an input whose frame edges are within ± 25 percent of the sample period.

This is quite a generous margin, and corresponds to the timing shift due to putting about a kilometer of cable in series with a signal. In order to prevent tolerance build-up when passing through several devices in series, the output timing must be held within ± 5 percent of the sample period.

The reference signal may be an AES/EBU signal carrying program material, or it may carry muted audio samples; the so-called digital audio silence signal. Alternatively it may just contain the sync patterns. The accuracy of the reference is specified in bits 0 and 1 of byte 4 of channel status (see FIG. 8). Two zeros indicates the signal is not reference grade (but some equipment may still be able to lock to it). 01 indicates a Grade 1 reference signal which is ±1 ppm accurate, whereas 10 indicates a Grade 2 reference signal which is ±10 ppm accurate. Clearly devices which are intended to lock to one of these references must have an appropriate phase-locked-loop capture range.

In addition to the AES/EBU synchronization approach, some older equipment carries a word clock input which accepts a TTL level square wave at the sampling frequency. This is the reference clock of the old Sony SDIF-2 interface.

Modern digital audio devices may also have a video input for synchronizing purposes. Video syncs (with or without picture) may be input, and a phase-locked loop will multiply the video frequency by an appropriate factor to produce a synchronous audio sampling clock.

15. Asynchronous operation

In practical situations, genlocking is not always possible. In a satellite transmission, it is not really practicable to genlock a studio complex half way around the world to another. Outside broadcasts may be required to generate their own master timing for the same reason. When genlock is not achieved, there will be a slow slippage of sample phase between source and destination due to such factors as drift in timing generators.

This phase slippage will be corrected by a synchronizer, which is intended to work with frequencies that are nominally the same. It should be contrasted with the sampling-rate convertor which can work at arbitrary but generally greater frequency relationships. Although a sampling-rate convertor can act as a synchronizer, it is a very expensive way of doing the job. A synchronizer can be thought of as a lower-cost version of a sampling-rate convertor which is constrained in the rate difference it can accept.

In one implementation of a digital audio synchronizer, [20] memory is used as a timebase corrector. Samples are written into the memory with the frequency and phase of the source and, when the memory is half-full, samples are read out with the frequency and phase of the destination.

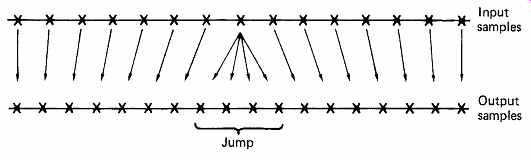

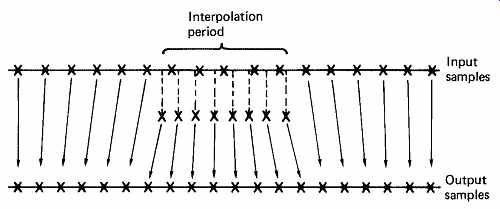

Clearly if there is a net rate difference, the memory will either fill up or empty over a period of time, and in order to re-center the address relationship, it will be necessary to jump the read address. This will cause samples to be omitted or repeated, depending on the relationship of source rate to destination rate, and would be audible on program material. The solution is to detect pauses or low-level passages and permit jumping only at such times. The process is illustrated in FIG. 21. An alternative to address jumping is to undertake sampling-rate conversion for a short period (FIG. 22) in order to slip the input/ output relationship by one sample. [21]

FIG. 21 In jump synchronizing, input samples are subjected to a varying

delay to align them with output timing. Eventually the sample relationship

is forced to jump to prevent delay building up. As shown here, this results

in several samples being repeated, and can only be undertaken during program

pauses, or at very low audio levels. If the input rate exceeds the output

rate, some samples will be lost.

FIG. 22 An alternative synchronizing process is to use a short period

of interpolation in order to regulate the delay in the synchronizer.

If this is done when the signal level is low, short wordlength logic can be used. However, now that sampling rate convertors are available as a low-cost single chip, these solutions are found less often in hardware, although they may be used in software-controlled processes.

The difficulty of synchronizing unlocked sources is eased when the frequency difference is small. This is one reason behind the clock accuracy standards for AES/EBU timing generators. [22]

16. Routing

Routing is the process of directing signals between a large number of devices so that any one can be connected to any other. The principle of a router is not dissimilar to that of a telephone exchange. In analog routers, there is the potential for quality loss due to the switching element. Digital routers are attractive because they need introduce no loss whatsoever. In addition, the switching is performed on a binary signal and therefore the cost can be lower. Routers can be either cross-point or time-division multiplexed.

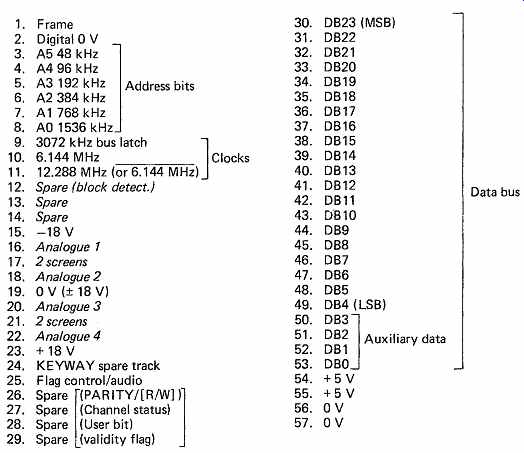

FIG. 23 Time-division multiplexed 64 channel audio bus proposed by

BBC.

In a BBC proposal [23] the 28 data-bit structure of the AES/EBU subframe has been turned sideways, with one conductor allocated to each bit. Since the maximum transition rate of the AES/EBU interface is 64 times the sampling rate it follows that, in the parallel implementation, 64 channels could be time-multiplexed into one sample period within the same bandwidth. The necessary signals are illustrated in FIG. 23. In order to separate the channels on reception, there are six address lines which convey a binary pattern corresponding to the audio channel number of the sample in that time slot. The receiver simply routes the samples according to the attached address. Such a point-to-point system does, however, neglect the potential of the system for more complex use. The bus cable can loop through several different items of equipment, each of which is programmed so that it transmits samples during a different set of time slots from the others. Since all transmissions are available at all receivers, it is only necessary to detect a given address to latch samples from any channel. If two devices decode the same address, the same audio channel will be available at two destinations.

In such a system, channel reassignment is easy. If the audio channels are transmitted in address sequence, it is only necessary to change the addresses which the receiving channels recognize, and a given input channel will emerge from a different output channel. Since the address recognition circuitry is already present in a TDM system, the functionality of a 64 _ 64 point channel-assignment patchboard has been achieved with no extra hardware. The only constraint in the use of TDM systems is that all channels must have synchronized sampling rates. In multitrack recorders this occurs naturally because all the channels are locked by the tape format. With analog inputs it is a simple matter to drive all convertors from a common clock.

Given that the MADI interface uses TDM, it is also possible to perform routing functions using MADI-based hardware.

For asynchronous systems, or where several sampling rates are found simultaneously, a cross-point type of channel-assignment matrix will be necessary, using AES/EBU signals. In such a device, the switching can be performed by logic gates at low cost, and in the digital domain there is, of course, no quality degradation.

===