OUT OF BOUNCE

Let's try a conceptual experiment. Imagine that you've just left Audio's New York offices. You pause on the busy Manhattan sidewalk to blindfold yourself, then walk out into the middle of the street. Your life, of course, would be almost immediately snuffed out by a taxi. But in the brief moment prior to that, you would enjoy the miracle of aural spatiality. Your ears would hear the sounds of traffic and people all around, jet planes over head, subway trains below. In addition to all the other received information, your brain would be able to localize the direction and relative distance of all of those sounds, thanks to the expertise of your ear/brain's psychoacoustical skills.

As I discussed last month, your ear/ brain is fine-tuned to ambient information; if that information is lacking, you immediately judge sound to be less "real" than it is, or less interesting.

That's where DSP--Digital Signal Processing--is playing an increasingly important role in audio. The computational clout of digital circuits and soft ware permits unprecedented manipulation of sound. Among other audio applications, DSP number-crunching is being used to synthesize psycho-acoustic cues, in order to achieve better re-created sound.

Let's try another conceptual experiment, this time in the relative safety of a rock 'n' roll concert. The lead guitarist plays a big power chord; let's carefully follow the sequence of events in time.

When he plays, sound emanates in all directions; you'll eventually hear all that sound, but at different times, because it will follow paths of different lengths.

The first sound you hear is the direct sound from the P.A. system, coming from the front. Next come first-order reflections: The sound of the chord that has bounced off the stage floor or proscenium, which arrives about 10 mS later; and sound from side walls or the ceiling, which come next, about 20 mS later. From behind you comes sound that is returning after bouncing off the back wall, as well as second-order re flections-that is, sound that has undergone two reflections.

At this point, only careful analysis could differentiate the sounds of the original chord as you are bombarded by a thicket of lower order reflections arriving closely together from all directions. As the sound bounces around, it loses energy, and its amplitude slowly dies out.

In real time, you've heard the chord and hall ambience, including early re lections and reverberation. Your ear/brain has received detailed information in amplitude, relative time delay, direction, and frequency response on thousands of variations of the original chord's sound. Given that data, you have rapidly constructed a model of the hall including its size, shape, equalization, and your relative position within it.

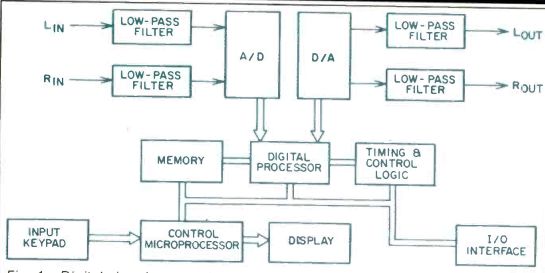

While a good hall supplies all this free of charge, it is a different matter in he recording studio, where the engineer must create most ambient information from scratch. Using DSP, he is able to precisely create a signal-processing chain which models the desired acoustical space. Consider the block diagram shown in Fig. 1. DSP contains all the building blocks, mainly software programs, to compute the numerical equivalents of room acoustics.

The engineer merely selects the desired parameters, then relaxes while he program takes over. Would you like to put Mr. Mister in a large, live auditorium with a slightly boomy low end? No problem.

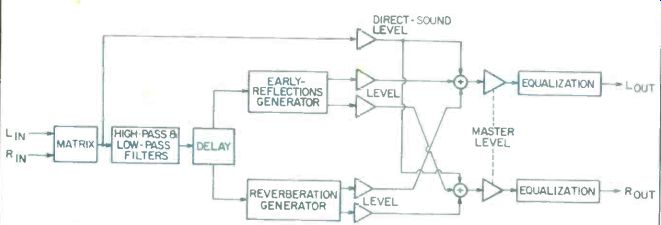

The audio computer performs computations on the data according to the program, contained in RAM or ROM, that has been selected. For concert-hall simulation, the program might be structured according to the signal flow chart in Fig. 2. Using the stereo channels as input, the computer performs equalization, delay and reverberation computations. The sonic output is the re-creation of an existing sonic environment, or the creation of an entirely new (and perhaps physically impossible) space.

Virtually all popular recordings benefit from DSP, and more than a few classical recordings undergo an acoustical facelift. But there's a catch.

While the acoustical parameters of a space have been defined, the reproduced model is incomplete. If you listen to a recording at home and conclude that you are in a concert hall, it would be more than your ear/brain that was confused.

The problem, of course, is that two loudspeakers cannot re-create the acoustical ambience surrounding you in a concert hall. For starters, the ambient information supplied by your listening room is incorrect; moreover, the ambient information coming from the speakers, while correct, is mostly coming from the wrong direction. So your localization cues are all screwed up.

However, digital signal processing could be used to create concert hall-sounding aural cues. In theory, in the same way your ear/brain creates a phantom image where there is no loud speaker, it could also create a concert hall where there is none.

There are two approaches to the problem. One school of thought believes that heavy-duty DSP in the studio could be used to encode the signal so that ambience, if not surround ambience, could be reproduced through two loudspeakers and conventional playback electronics.

Through analysis of the acoustical in formation at each ear and the relation ship between the signals each ear receives, a program could be written to duplicate the sound-pressure ratio of signals reaching each ear and the sound pressures common to both. Be cause the ear/brain responds only to the input at the ears, a correctly simulated input would cause the same response. Thus, a surround environment could be created from a conventional playback system.

Another school would use DSP to synthesize ambient information directly upon playback. Parameters could be set through analysis of the ambient in formation on the input signal, or according to the user's taste. The processor would supply a signal delayed, reverberated, and equalized precisely according to an internally consistent acoustic model. The ambient information could be mixed into the main loud speakers or routed to additional ambient loudspeakers, as in many movie theaters.

Fig. 1-Digial signal-processing hardware. Single lines represent analog signals,

double lines indicate digital data; digital links are two-way unless otherwise

indicated. The I/O interface is for direct connection to other digital devices.

Fig. 2--Software model of digital signal processing.

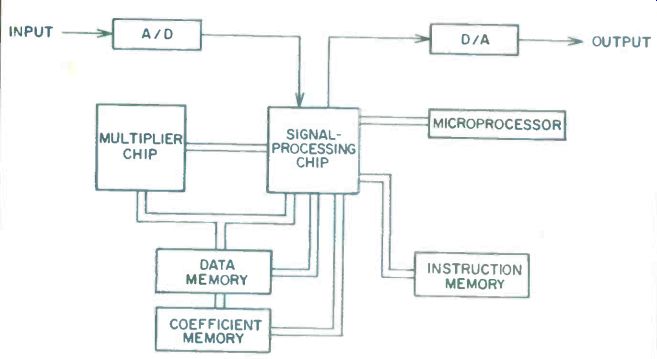

Fig. 3--A multiple-chip digital signal processing system. All digital data

paths shown (double lines) are two-way.

Until recently, both ideas were impractical because the required processing system was pretty expensive.

But that is changing, as DSP chips become available. Figure 3 shows a complete DSP on a few chips. The audio signal is input to a processing chip through an A/D converter and out put from the chip through a D/A (these would be omitted in an all-digital system). The processor manipulates the data with the help of peripheral chips.

The processing chip includes an ALU (arithmetic logic unit) and a microprogram sequencer. The other principal chip is a parallel multiplier. This miniature DSP could be clocked at 8 MHz, executing 166 instructions within every 48-kHz sampling period.

Because of the cost reduction afforded by such integration, consumer products using DSP are appearing.

Eventually, equalization, mixing, reverberation, compression, expansion, time delay, sampling frequency con version, acoustical measurements and analysis could all be accomplished with home equipment incorporating in expensive DSP.

An early example is the Sony SDP 505ES surround-sound processor. It accepts a stereo analog signal, per forms DSP on PCM data with 44.1-kHz, 16-bit specifications, and outputs a stereo analog signal. Although other applications are possible, the synthesized information is typically directed to two ambience loudspeakers. They might be placed in corners, facing a reflective surface to provide widely dispersed sound.

The SDP-505ES is programmed to add delay to an audio signal, in 0.1-mS increments, from 0 to 90 mS. For video (theatrical) software, the unit provides Dolby Surround Sound decoding with equalization and delay. Other modes provide preset ambient synthesis for concert-hall and other surround-sound environments.

The heart of the SDP-505ES is a DSP chip, the CXD-1079, which contains a multiplier and adder, and interface circuits. Delay is accomplished by writing the signal to RAM, then reading it after the prescribed time period has elapsed. Equalization and Dolby de coding follow other simple algorithms-a piece of cake for a digital processor.

The power of psychoacoustics is thus placed at your fingertips. Perhaps in the future, with the availability of consumer DSP products, music will be recorded and played back in totally anechoic rooms, with synthetic room acoustics added in real time, during playback, according to your taste. The enjoyment of recorded music would no longer be constrained by the acoustics of the recording or listening room. Whether this created opportunity would help or harm the realism of re created music is a matter of conjecture at this point.

"Digital audio" has thus far referred mainly to a method of audio storage, but in the near future the term will have much wider applications. Digital Signal Processing will pervade the recording and reproduction chain, providing unprecedented clout to the manipulation of audio signals. Only when analog systems (with digital storage) are re placed by systems that are wholly digital will the true benefit of "digital audio" really become apparent.

(adapted from Audio magazine, Oct. 1986)

= = = =