by THOMAS H. SMITH, MICHAEL R. PETERSON, and PETER O. JACKSON

-------

ABOUT THE AUTHORS: Professionally, Thomas H. Smith (Ph.D., mechanical engineering) is a nuclear waste management specialist. Michael R. Peterson (Ph.D., chemical oceanography) is an environmental chemist, Peter 0. Jackson (B.S., chemistry), a radio-analytical chemist. Each has published numerous technical papers and reports in his field of expertise. All are long-time audio enthusiasts.

--------

HOW MANY AMPLIFIER test reports in major audio magazines conclude that the unit reviewed "has no characteristic sound of its own"? The measured distortion is so low, the frequency response so flat, that the unit is essentially "a straight wire with gain." Yet these same "perfect" amplifiers are evaluated subjectively in "underground" publications or high-end audio salons. Inevitably, the unique sound of each amplifier is described in detail. (Unfortunately, the subjective reviewers often disagree as to which amplifiers sound "airy" or "sweet," and which sound "hoody", "gray", or "gritty".) Many audiophiles and equipment salesmen subscribe to the "golden ears" approach of the subjective reviewers. They maintain experienced listeners can easily hear differences between the sounds of audio components, even top-rated power amplifiers.

Despite these opposing claims, neither group seems to support its position with scientific data. So, which group is right? Are differences between power amplifiers, for example, really audible? Being research scientists as well as amateur audiophiles, we were intrigued by this controversy. We searched for data sup porting one position or the other. In popular audio magazines we found much discussion of the topic but virtually no directly applicable data. Contacts with audio industry people turned up few data. We found several pertinent articles in the technical audio literature; however, tests reported there involved specialized equipment or non-musical program material such as sine waves.

After pondering this dilemma, we conceived a promising approach to clarify it. We then launched our own tests, following principles of scientific inquiry used in our employment. (See below for this discussion.) Our results show that the prospective buyer of audio components need not be con fused by conflicting opinions nor browbeaten by golden-eared people claiming to know which components sound best. Described here are refined A-B listening tests for comparing audio components. The tests put into perspective the conflicting opinions of salesmen and of publications that evaluate components subjectively.

This refined approach could apply in general to any type of audio component. We used the approach to address this question:

Can listeners consistently distinguish be tween the sounds of top-rated power amplifiers? The unexpected-perhaps controversial-results may shed new light on power amplifier performance levels and on the use of A-B testing to compare components.

Our excitement mounted when, after months of theorizing about scientific A-B tests, the components arrived for our study.

Generous manufacturers lent Accuphase P300, Dynaco Stereo 400, and Phase Linear 400 power amplifiers and a Quintessence Model I preamplifier. Our speakers--Larger Advent, Magnepan MGII, and Fulton FMI 80--were donated by our 17-member listening crew, which included 11 ordinary listeners and six who profess to have golden ears. Among the latter are two professional musicians and one high-end audio equipment salesman. All golden ears own high end audio equipment.

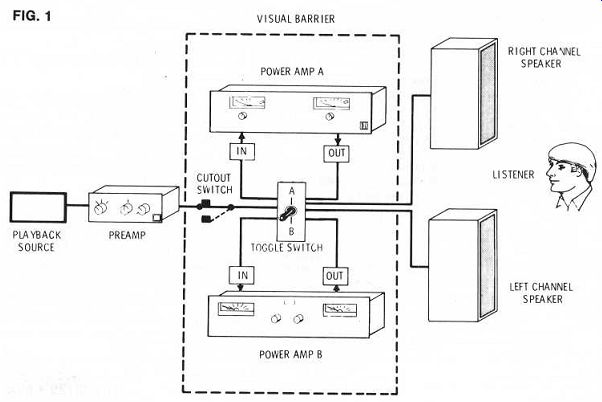

Fig. 1 shows the equipment setup for the A-B comparisons of power amplifiers. The heavy-duty, multipole toggle switch allows simultaneous switching of amplifier inputs and outputs. The driven amplifier thereby "sees" only the speaker load-not the other amplifier's output. Because the toggle switch has no center "off" position, we coupled it with a normally-closed momentary (cutout) switch.

The last preparation was careful matching of the two power amplifier's outputs. We first fed 1kHz sine waves into the preamplifier and read voltages across the speaker terminals. We matched the levels within 0.1dB, the resolution limit of our vacuum tube voltmeter. We checked this preliminary match using broadband FM interstation noise and then 1kHz square waves. In all cases, the levels remained matched within 0.1 dB. Finally we checked the levels with both sine waves and square waves at 100Hz and 10kHz. Power amplifiers matched on 1kHz sine waves were within 0.2dB to 0.5dB of each other on sine waves and square waves at the other frequencies checked.

We made no effort to equalize the amplifiers' frequency response; this would have contradicted the stated purpose of the tests. In all tests but one the output levels were matched by using gain controls on the power amplifiers. In one test we could not achieve the match this way: we inserted a pair of cermet potentiometers in series with the input. Later comparisons of listening results showed no effects arising from the different methods used to match levels.

The next six weeks saw listeners participate in 14 different tests. For every test, each listener was presented with a set (usually 18, sometimes 12 or eight) of A-B comparisons. In half the comparisons the music was heard first through one power amplifier, then through the other. We termed these comparisons A-B or B-A, depending on which amplifier was heard first. In the remaining comparisons we played both music segments through the same amplifier: during the music we switched from amplifier A back to itself instead of across to amplifier B. We called these comparisons A-A (or B-B, if we were using amplifier B). The listener did not know the switching order of A-B, B-A, A-A and B-B comparisons.

We presented all four combinations with equal frequency, but in a scrambled order chosen at random beforehand. The listener decided whether he thought the sound character changed due to the switching. He marked one of two possible answers on a scorecard: either he thought a different amplifier had been switched in or he thought the same amplifier had been retained. We checked his scorecard against the operator's switching log to determine his score.

For the first eight tests we used Switching Method 1: the operator switched once for each listener decision. Switching took place in the middle of each half-minute passage of music and took about 1/5 second. To indicate to the listener that switching had occurred, the operator depressed the cutout switch while flipping the toggle switch. (This technique also eliminated giving the listener any clue as to whether a different amplifier had been switched in.) The tests' repetitive nature did not suit the use of phono discs as signal sources. In all tests but two we used a tape consisting of a live organ recording and excerpts from top quality discs, which we prepared using a Braun TG-1000 deck, Mark Levinson JC-2 preamplifier, Denon 103 phono cartridge, Decca International tonearm, and Technics SP 10 turntable. We played the tape on a Tandberg 3000X deck.

The first test, a baseline case comparing two power amplifiers through the Advent speakers, provided the initial clue to the results of the study: the listeners scored only 46 percent correct in 180 decisions. This approximates the score of 50 percent correct expected from outright guessing. (There were equal numbers of "same" and "different" switching actions and only these two answers.) Here it was! A controlled, scientific test showed that the listeners who participated-ordinary and golden eared alike-could not consistently distinguish between the power amplifiers tested.

We had recognized from the beginning that variables other than the choice of power amplifiers could conceivably affect the audibility of amplifier differences. The remaining 13 of the 14 tests were attempts to identify the possible effects of such variables, taken one at a time, on listeners' scores.

We first studied the effect of the time allowed subjects to listen and decide "same" or "different" for each decision. Doubling the time improved the scores from 46 percent to 53 percent, or 48 correct decisions out of 90; however, this change is not statistically significant. Most listeners said their decisions were based on comparing the sound just before and after the switching. The extra listening time helped their scores little if at all, perhaps because the memory retains subtle impressions only briefly. (Sekuler and Bauer (1965) found similar results in psychophysical studies.) We returned to this subject later in the study.

The next variable studied was sound pressure level (SPL). Nearly all the tests were conducted at an average SPL of 80-85dB. Some tests were repeated at 70-75dB and at 90-95clB. The results showed that the SPL selected was not limiting the perception of differences. The improvements resulting from changes both upward and downward in the SPL were statistically in significant.

The next variable examined was the music's complexity. Nearly all the tests involved music performed by small groups or accompanied soloists. Complex orchestral material lowered scores a statistically in significant amount: 47 correct decisions in 108, or 44 percent. Thus music complexity was not the controlling variable under our test conditions.

We next studied whether the speakers were masking the differences between power amplifiers. Some speakers could conceivably outpoint others in revealing subtle differences between amplifiers. We changed from the Advents to the Magnepans, which some people consider near state-of-the-art.

Again, there was no statistically significant effect (54 percent correct in 162 decisions).

Next we studied the effect of potentially greater sonic differences in amplifiers. In an attempt to force up the scores, we compared a top-quality power amplifier with the amplifier section of a relatively inexpensive receiver (Allied Model 395). The sonic differences were still inaudible to the listeners: 49 percent correct of 252 decisions.

After all these tests produced no consistently audible differences between power amplifiers, we rechecked our equipment setup. One listener even questioned whether the toggle switch was actually switching, but a peek at the movements of the amplifier out put meters restored his faith.

Next, in another attempt to force up scores, we deliberately set the output level of one amplifier higher by 1dB than that of the other. We used program material of typical musical content, in which the flow of musical notes seldom remains within a 1dB range.

With Switching Method 1 the imbalance was inaudible: the listeners scored only 44 percent correct on 72 decisions.

We devised Switching Method 2 to make it easier to identify differences. We wanted to allow many back-and-forth switching actions, instead of only one, for each decision and to eliminate the brief dead time of switching between amplifiers.

In Switching Method 2 the listener made the switches. However, he did not know whether the "A" and "B" positions on the toggle switch were connected to different amplifiers or to the same amplifier. (Between decisions the listener was sent to another room, while the operator manipulated signal cables to produce the desired A-B configuration. The listener was then given control of the switch.) He could throw the switch as often as he desired within a three-minute period before deciding whether he heard a difference. The momentary switch was not needed, so switching was instantaneous in Method 2.

Using Switching Method 2 we repeated the preceding test, involving 1 dB output level offset. Every listener decision was now correct! We had finally produced some audible differences.

The switching method seemed to be the controlling variable, so we repeated some of our original tests, using the new method. We rebalanced the amplifier levels and returned to using two top-quality amplifiers. Results: no audible differences (45 percent correct of 96 decisions). Changing to the Fulton FMI 80 speakers also produced no audible differences between power amplifiers (50 per cent correct of 36 decisions).

We next wondered if the tape deck or the recording process were masking amplifier differences. Using top-quality discs on a Technics SL 1300 automatic turntable with ADC XLM MK II cartridge did not improve the scores. Nor did listeners do better with a live tape recording than with taped discs.

To conclude the study, we returned to the intriguing topic of mismatched levels. With Switching Method 2 but with the imbalance in amplifier levels reduced to %2 dB, the listeners could not detect the difference on music from either tape or disc program sources (46 percent correct of 24 decisions).

The tape again included both a live recording and recorded discs. With the same imbalance of %2 dB, we changed the program material to a 1kHz sine wave from Heathkit Sine-Square Audio Generator Model IG-18. All listeners now scored 100 percent correct.

Let's summarize these results. For the ranges of variables and types of equipment studied, none of the following factors was suppressing the audibility of differences between power amplifiers of matched levels: average SPL, listening time, music complexity, recording medium, loudspeaker brand, power amplifier brand, or A-B switching method. An imbalance of 1dB in output levels was audible on musical program material, but only with listener-controlled, repeated, instantaneous switching (Method 2). An imbalance of %2 dB was audible on a single-frequency test tone, but not on music even with Switching Method 2. These results for imbalanced levels are consistent with those discussed by Hirsch (1975) and with the classical results of Fletcher (1953).

The overall score for all tests with balanced levels was 49.1 percent correct of 1104 decisions by 17 listeners. This percentage matches very closely the 50 percent score expected for simple guesswork. Individual listeners' overall scores ranged from 35 to 57 percent. Golden ears-even those who "knew' they had previously heard power amplifier differences-scored no better than ordinary listeners. No person tested could consistently hear differences between power amplifiers under the home listening conditions we attempted to simulate. The units tested were of sufficiently high performance that differences among them, under our test conditions, were below the threshold of audibility.

These results make one wonder how many power amplifier differences "heard" in A-B comparisons are really imaginary. As we've demonstrated, imagined differences could stem from imprecisely matched output levels. Other possible causes are prejudice and peer pressure. Bindra et al. (1965) found listeners tend to imagine nonexistent differences much more frequently than they fail to detect differences that are present. Our results also furnish a possible explanation for disagreements among subjective reviewers of top-quality power amplifiers: different people may imagine things differently.

Some readers may question these results.

We ask first that they review our philosophy and methods in the box on p. 36 and in this text. The data's validity rests upon the validity of the experimental methods.

Second, we encourage skeptical readers to conduct controlled, scientific tests for themselves, to see if our results are reproducible and representative. They should make their procedures and data for such tests available for others to verify.

We caution, however, that generalizing our conclusions from our test conditions to different conditions should be done with caution. Conditions may exist under which some listeners can rigorously prove they do hear differences between power amplifiers. We expect such demonstrations to be the exception rather than the rule. Of course, we couldn't test all listeners using all models of power amplifiers and all program material.

However, we believe our tests were con ducted under representative conditions. We resisted the use of one-of-a-kind associated components, professional master tapes, etc.

Results of tests conducted under such ideal conditions might not be representative of most home listening.

We certainly don't view our work as the last word on A-B comparisons. On the contrary, we hope it is a useful step toward placing subjective evaluations of components on a more scientific basis. The effects of other variables on the audibility of power amplifier differences need to be studied. We also wonder if differences in preamplifiers, phono cartridges, and other components are as difficult to hear as those between power amplifiers. All these questions can be studied with the methods described here. (Com parison tests of loudspeakers may require special methods to account for directional differences in radiation patterns.) One closing note. Audiophiles are avidly seeking components of ultimate performance levels, just as the forty-niners sought that big gold strike. But both groups of searchers have demonstrated by their actions that, as James Bonner said, "the ability of the human mind to self-delude is infinite."

==============

VALID COMPARISONS OF AUDIO COMPONENT PERFORMANCE

THE TEST PROCEDURES we used reflect our philosophy of scientifically valid comparisons of audio components in a listening environment. We summarize this philosophy below.

We believe the ultimate criterion for rating component performance is the accurate reproduction of music for human hearing under normal listening conditions. Electronic measurements of performance are helpful, especially to designers, but do not necessarily correlate with audible effects. Electronic measurements may include some parameters having no audible effect, yet omit others which affect sound quality ( Davis, 1978). Even relevant, measurable parameters have threshold values for human hearing below which differences are inaudible (e.g., Fletcher, 1953).

Thus we believe one must ultimately depend on listening comparisons of components. "A-B" listening (switching back and forth between two units) is one widely used-and abused-approach for conducting such listening comparisons. There are many pitfalls in A-B listening. For example, one must match the output levels of the components compared very closely (Hirsch, 1975), using electronic instruments. (Our results suggest the required precision of the matching process. More detailed tests have been con ducted by Lipshitz et al. [1979]. Matching the levels "by ear" is not sufficiently accurate.) One must carefully consider the possible interactions of the units under test with other components in the signal chain, from microphone to human auditory system (Hodges, 1974), and also residual distortion and other defects in these connecting components (Moncrieff, 1978; Moir, 1978).

Even careful A-B tests satisfying the above requirements are generally unreliable because scientific methods are not followed. For the results o£ A-B testing to constitute scientific evidence, the tests should meet at least these seven requirements:

(1) The procedures should be based on "difference testing," an objective method used for studying subjective phenomena. (See, for example, Bindra et al., 1965; Bryan and Parbrook, 1960; Gabrielsson and Sjogren, 1972; and Bose, 1973.) In difference testing, the listener states no preference between the sounds of the components being compared. He merely indicates whether he hears a difference between the two. The element of taste, or personal preference, is thereby excluded from the tests. (With a setup of live vs. recorded music, difference testing can even show indirectly which of two units sounds closer to live music.) (2) The listening should be done "blind": that is, the listener should be unaware of which unit the signal is passing through at any given time. The results of non-blind listening can be distorted by the listener's prejudices.

(3) Each listener should be "isolated," i.e., free of influence from other people (Hope, 1978: Colloms, 1978). Group listening results can measure social and intellectual influences within the group as well as component performance.

(4) The test conditions and the associated equipment should be stated. The results may depend on these factors.

(5) Control tests should be included. These tests evaluate possible influences of factors other than the main variable of interest. Control tests should include limiting conditions or bounding studies, intended to cause component differences to appear or disappear. For example, if differences are being heard, a listener might be asked to compare two amplifier units of the same model or even to A-B test an amplifier against itself. If differences can not be made to appear and, under different conditions, to disappear, then an undiscovered systematic factor in the test conditions may be consistently biasing the results.

(6) The results should be treated statistically, so that the possible effects of lucky guesses can be identified. A statistically significant number of data, or listening decisions, should be obtained (Moir, 1978). (Several hundred, or even thousand, data are generally required. Our tests generated 1211 listening decisions. Colloms reported 143 decisions: Moir, 576; Bindra et al., 2048; Gabrielsson and Sjogren, tens of thousands.) Conclusions should include statements of statistical confidence levels.

(7) The data should be reported and should be reproducible, within statistical variations, by the investigator himself and by independent investigators. If other investigators perform the same tests and do not obtain essentially the same results, then the results cannot be accepted as valid until the discrepancies are resolved.

Our experience is that nearly all A-B tests-conducted in showrooms, in homes, or for subjective reviews of components-fail most, if not all, of the seven requirements. Three recent exceptions we are aware of (Chadwick, 1978; Moir, 1978; and Colloms, 1978) are discussed below. By contrast, the A-B test methods used and published by professional researchers of psychoacoustic phenomena, such as audibility thresholds, generally satisfy all seven requirements-see for example, Bindra et al., Bryan and Parbrook, and Gabrielsson and Sjogren. Their results are thus widely accepted in technical circles as scientific evidence. Unfortunately, the sine-wave program material and specialized equipment which they generally use do not represent typical home listening conditions.

In our tests we followed our seven requirements, adapting the methods of the psychoacoustic researchers to study home audio components. (1) Our tests were "difference tests." (2) The listening was "blind." (3) The listeners were "isolated." (4) Test conditions, associated equipment, and data are described briefly in this article and in more detail in supplementary material we are making available. (5) Our study included control tests. (6) Two statisticians performed analyses on our data (chi-square test, Kolmogorov Smirnov test, and rank sum test). The results are in the supplementary material. Statistical confidence levels are presented there. (7) We could reproduce our results.

While the results of our two-year study were being compiled, Moir, Chad wick, and Colloms each reported tests in England. Their methods satisfy most of our seven criteria. As you may see, our results generally agree with these recent studies. Moir gives references to other recent studies which have obtained similar results. A manuscript is in preparation comparing in detail the methods used in these studies and the results obtained.

==============

REFERENCES

Bindra, D., et al. (1965): "Judgments of Sameness and Difference: Experiments on Decision Time." Science, Vol. 150: 1625-1627.

Bose, A. G. (1973): "Sound Recording and Reproduction; Part One: Devices, Measurements, and Perception." Technology Review, ( MIT). June: 19-25.

Bryan, M. E., and Parbrook, H. D., (1960): "Just Audible Thresholds for Harmonic Distortion." Acustica, Vol. 10: 87-91.

Chadwick, J. G. (1978): "A Subjective Amplifier Test." Hi-Fi News and Record Review, July, pp. 49-51.

Colloms, M. (1978): "Amplifier Tests on Test: 2. The Panel Game." Hi-Fi News and Record Review, November, pp. 114-117.

Davis, M. F. (1978): "What's Really Important in Loudspeaker Performance?" High Fidelity Magazine, Vol. 28, No. 6: 53-58.

Fletcher, H. (1953): Speech and Hearing in Communication. D. Van Nostrand Company, Inc., New York, p. 146.

Gabrielsson, A., and Sjogren, H. (1972): "Detection of Amplitude Distortion in Flute and Clarinet Spectra."J. Acoust. Soc. Am., Vol. 52, No. 2 (Part 1): 471-483.

Hirsch, J. D. (1975): "The ABC's of A-B Testing." Stereo Review, Vol. 35, No. 5: 26-27.

Hodges, R. (1974): "Reflections on a Session at AES." Popular Electronics, Vol. 6, No. 6: 22-25.

Hope, A. (1978): "Amplifier Tests o Test: 1. Without Prejudice." Hi-Ft News and Record Review, November, pp. 110-114.

Lipshiu, S. P., et al. (1979): "PAT-5 Listening Tests-An Alter native View." The Audio Amateur, Vol. X, No. 2: 53-54.

Moir, J. (1978): "Valves versus Transistors: The Results of a Comparison Among Three Different Amplifiers." Wireless World, July: 55-58.

Moncrieff, P. (1978): "The M Rule." International Audio Review, No. 33: 36-43.

Sekular, R. W. and Bauer, J. A., Jr. (1965): "Discrimination of Hefted Weights: Effect of Stimulus Duration." Psychon. Sci_ Vol. 3: 255-256.

UNCITED REFERENCES

Carver, R. (1973): "Results of an Informal Test Project on the Audibility of Amplifier Distortion." Stereo Review, Vol. 30, No. 5: 72-75.

Feldman, L. (1979): "Quad (Acoustical Mfg. Co., Ltd.) Model 405 Power Amplifier, "in Equipment Profiles." (See Editor's Note). Audio, Vol. 63, No. 4: 80.

Gould, G. (1975): "The Grass is Always Greener in the Outtakes." High Fidelity Magazine, Vol. 25, No. 8: 54-59.

Greenhill, L. L. (1979): "Issues of Reliability and Validity in Subjective Audio Equipment Criticism." The Audio Amateur, Vol. X, No. 1: 17-'20.

Hirsch, J. D. (1979): "Distortion-How Small Must It Be?" Stereo Review, Vol. 42, No. 3: 42-43.

Long, R. et al. (1978): "A Muscle Amp in Formal Dress," in "New Equipment Reports." High Fidelity Magazine, Vol, 28, No. 11: 66-67.

Pontis, G. D. (1978): 'Audio General Model 511A Preamplifier, " in Equipment Profiles. " Audio, Vol. 62, No. 6: 86-94.

Shanefield, D. (1976): "Audio Equalizers." Stereo Review, Vol. 36, No. 5: 68.

Also see:

A Family of Power Amplifier Power Supplies -- A versatile circuit for regulated DC in quantity, by James E. Boak

A High Accuracy Inverse RIAA Network -- New light and hardware for the phono curve's basic shape, by Stanley P. Lipshitz and Walt Jung

Conversations with Peter Baxandall: Part II Opinion from a distinguished British engineer/audiophile