by DAVID LANDER

On Lexington Common, not far from the site of Raymond Kurzweil's Waltham, Massachusetts office, a small band of armed citizens faced down a British detail one April morning in 1775. The redcoats had been marching all night toward a patriot munitions dump in Concord, and there must have been considerable flint in the voice of their commander when he ordered his antagonists to disperse. The minutemen held their ground.

Kurzweil is a direct spiritual descendant of those rebellious and determined Americans.

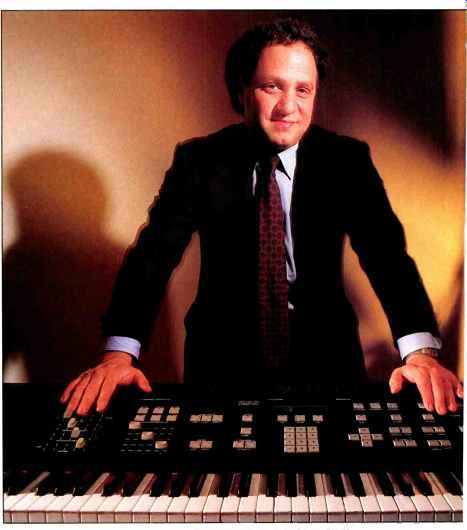

Boyish, indeed almost cherubic, dressed in a sleek Italian suit and sporting a Mickey Mouse watch, he hardly fits the image of a revolutionary. Nonetheless, this 40-year-old M.I.T. graduate has been compared to Edison and Marconi, and is a veritable prophet of change.

Raymond Kurzweil, who dreamed the future and is now helping to create it, has announced that the second industrial revolution is at hand.

The key difference between this new industrial revolution and the one that preceded it, Kurzweil explains, is that "the first involved machines which multiplied our physical capabilities and extended the reach of our muscles.

The new age is multiplying our mental capabilities."

Kurzweil is an artificial-intelligence specialist who invents machines that think: "Power tools for the mind," he's fond of calling them. In the early '70s, he developed a device that may be the most important innovation for the blind since Braille. Using a technique known as pattern recognition, which allows a computer to identify the most basic characteristics of sounds or images--in this case, the letters of the alphabet--his machine identifies the words on a printed page, regardless of typeface, and reads them aloud.

More recently, Kurzweil produced what might be seen as the converse of his reading machine, a voice-activated word processor.

As you speak to it, your words appear on a computer screen, and, if you wish, they can be printed out in hard copy. The device can recognize 20,000 words.

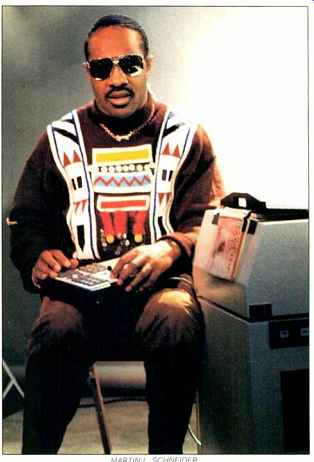

Between these two inventions, Kurzweil took a detour through the world of computerized musical instruments. Prompted by singer Stevie Wonder, an appreciative user of his reading machine, he developed a synthesizer known as the Kurzweil 250. Through digital sampling techniques, it not only reproduces the sound of a grand piano but can be programmed to sound like any instrument played into its memory.

Moreover, a single person can use the device to imitate dozens of instruments played simultaneously. In one instance, the 250 was programmed to simulate a 61-piece orchestra and was then used by an opera company in two choral works. Not surprisingly, this capability has led to controversy.

When Broadway producers negotiated with the musicians' union in 1987, the technology this synthesizer embodies was a focal point in their bargaining sessions.

Not long after Local 802 of the American Federation of Musicians hammered out its pact with the barons of Broadway, Kurzweil addressed a convocation of students entering Boston's Berklee College of Music. After reminding his audience that their art has always employed "the most advanced technologies available, from the cabinet-making crafts of the 18th century ... to the digital electronics and artificial intelligence of the 1980s and 1990s," the inventor noted that "the advent of this most recent wave is making historic changes in the way music is created." It was to ask about these historic changes, to gain insight into their implications, both for music and for those who create it, that I went to Waltham for a talk with Raymond Kurzweil.

-D.L.

Music has been more or less a lifelong interest of yours.

It has. I come from a musical family. My father was a concert pianist, a conductor with the Bell Symphony, the Queens Concert Orchestra, and opera companies in Pittsburgh and in Mobile, Alabama.

He was founder and chairman of the Queensborough College Music Department.

I learned the piano from him starting at age 6, and I was constantly going to concerts where he and his colleagues were performing.

And your interest in computers goes almost as far back.

I've been interested in computers since around the age of 12 and quickly became interested in pattern recognition. In fact, I did a project in high school applying pattern recognition to the structure of melodies, trying to determine what makes a certain sequence of notes and rhythms a pleasing melody and what makes other sequences displeasing. I particularly wanted to find those patterns that characterize different styles of music.

---- Stevie Wonder using the Kurzweil Reading Machine, an innovative

device developed to assist the blind.

You were involved in pioneering work in the field of artificial intelligence when Stevie Wonder got you back into music. Tell us how that came about.

I had known Steve for a number of , years, since he was a fan of the Kurzweil reading machine and one of its earliest users. We had a number of occasions to talk about technology in general and, in particular, technology applied to the creation of musical sounds. He's quite knowledgeable about electronics, computers, synthesizers, and other elements of music technology, and at one point he was lamenting the fact that there were two worlds of musical instruments without any viable bridge between them. There was the world of acoustic instruments, which at that time-and this is still the case today-provided the musical sounds of choice, sounds which are complex, rich, and musically relevant. But it did not provide very effective means for controlling those sounds. Most instruments, such as the saxophone or violin, cannot even be played polyphonically.

You can only play one note at a time; there are limited means for sound modification.

You can do vibrato on a violin and not much else. In fact, most musicians can't even play most instruments, since most don't have a wide variety of playing techniques.

You've contrasted this with the world of electronic musical instruments.

The electronic world was the total converse of this. All the things you could not do in the acoustic world, you could do in the electronic world. All sounds could be played polyphonically, and you could layer any sound with any other sound. You could apply virtually any sound modification technique to any sound source. However, at that time, the sounds that you could apply all these techniques to were very thin. These sounds had found their place in popular and classical music, but they were of limited complexity and could not capture the complexity and musical depth of acoustic sounds. So basically, Steve's challenge was, "Wouldn't it be neat if we could apply all of these control and sound modification techniques that the electronic world provides to acoustic sounds." You could create a whole new class of sounds which would have the complexity of acoustic sounds, because they would start acoustically but would be so modified that they would not be recognizable as the original instruments. There would be a whole new class of sounds that would have equal relevance to music but would be sounds no acoustic instrument could produce.

This occurred around 1982, when you were headed in a different direction.

What impelled you to actually go to work on a synthesizer that could do this?

Shortly before my father died, he began to get excited about the application of computers to music, which at that time was not a very popular concept. He felt that someday I would combine these two interests. And I've always had in the back of my mind that there was some linkage between computers and music. I became excited about what Steve was talking about and understood the potential it would have. I began to explore the concept and began to believe that it was feasible. With that realization, I examined the market potential, which seemed explosive. The whole marketplace seemed poised for an historic transformation from what, at that time, was almost entirely acoustic technology to digital electronic technology. And indeed that is now taking place.

What advantages do digital electronics have over analog in the creation of musical sounds? You have very precise control over sounds-you can create sounds of arbitrary complexity. You can very precisely apply several dozen sound modification techniques, and you can use techniques that are theoretically well grounded. Analog electronics is a bit of a black art. It's not always predictable what the techniques will do, and there's no way of taking a sound, analyzing it, and then re-synthesizing it-it's a bit of hit and miss With digital techniques, we can really understand how the sounds are constructed, analyze them in the frequency domain (which is the way in which our brains understand them), make any modifications we wish, and reconstruct them. In the digital domain, you can emulate all of the techniques that analog synthesis provides, but you also can do a lot more, and you can deal with much more complex phenomena. Using analog techniques, it's really not possible to accurately re-create, for example, a grand piano. But it is possible in the digital domain.

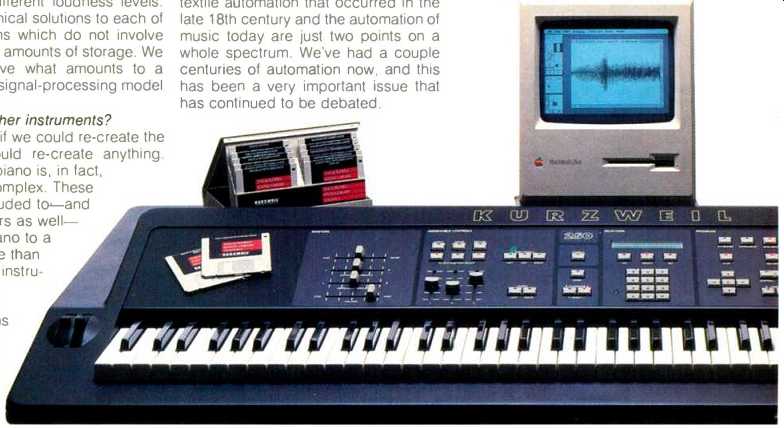

-------- The Kurzweil 250 (in loft at left) is dwarfed by world's largest

organ, in a Philadelphia department store.

Wasn't the re-creation of a grand piano a key goal when you were working on the Model 250?

That was the quintessential goal. We recognized that the piano was the most difficult instrument to re-create.

Why is that?

The reason is that you can't do it using conventional sampling techniques alone. Let me throw out a few of the technical problems. The overtones in a piano are not perfect multiples of the fundamental; there are what are called enharmonic partials. A popular technique to save memory in sampling is to just capture the transients in the attack portion of a note and then, once the attack is over, to loop the last one or two waveforms-in other words, to let the note decay while just repeating the last couple of waveforms. When you do this, all of the overtones become perfect multiples of the fundamental and you lose the enharmonicity of the partial. Yet if you don't loop, if you just record the entire 15or 20-second evolution of each piano note, that chews up too much memory. Because the spectrum actually changes for 15 or 20 seconds. That's one problem. Another is that the sound decays, and fixed-point samplers have a fixed noise floor.

As the note decays, the signal decays into the noise. The perceptual phenomenon that occurs is, it sounds like the hiss in the background is actually growing as the note decays. That's quite objectionable and obviously doesn't happen on a real piano. Furthermore, the time-varying sound a particular note makes actually changes at different loudness levels.

When you hit middle C hard, a completely different time-varying timbre is produced than when you hit it softly.

And the timbres also change quite significantly at different pitches as well. If you were to just use brute force techniques to capture all of these phenomena you would need hundreds, perhaps thousands, of times more storage than is really feasible in a practical digital synthesizer.

What was your solution to all this? A proprietary technology which is based on sampling but has specific techniques to overcome each of these problems. Our piano notes, for example, retain the enharmonicity of the partials throughout the entire duration of each sound. We don't have a fixed noise floor. Our noise floor drops as the signal drops, so our signal-to-noise ratio remains very high even as the notes decay. The time-varying timbre changes at different loudness levels.

We have technical solutions to each of these problems which do not involve using massive amounts of storage. We essentially have what amounts to a very complex signal-processing model of the piano.

What about other instruments? We knew that if we could re-create the piano, we could re-create anything.

Because the piano is, in fact, much more complex. These problems I alluded to--and there are others as well--exist in the piano to a greater degree than in some other instruments. If you could solve these problems with a piano, you could do a very good job of recreating any instrument.

In fact, can't the 250 be used to create any sound at all? Yes, but the point I want to make is it's not just re-creating sounds. It's re-creating instruments and their entire response to such things as changes in pitch and loudness and to other means of sound modification. An instrument is not just creating a sound; it is creating a myriad of sounds. To simply capture all that by recording it would exhaust computer memories, even at today's low prices.

I'd like to explore some of the implications of this technology. Some people maintain that it's going to put a lot of musicians out of work.

This is the same debate they had in the English textile industry when the flying shuttle and some of the other automated textile machines were introduced in the late 18th century. There emerged a movement-called the Luddite movement--led by Ned Lud, who made the same sort of points. It was patently obvious that employment was negatively affected, and in fact ultimately would be devastated by these new machines, because one machine with one operator could do the work of 10 or 20 persons. You could point to all these people who had previously spun wool thread and woven cloth who now were thrown out of work because of these new machines. Yet, paradoxically, employment in England at that time rose dramatically, and there was an era of prosperity, which in fact was the main reason the Luddite movement died out. I think that's very analogous to what's happening now. The English textile automation that occurred in the late 18th century and the automation of music today are just two points on a whole spectrum. We've had a couple centuries of automation now, and this has been a very important issue that has continued to be debated.

-------- The Kurzweil 250 synthesizer is a complex signal-processing model

of the piano.

So you think this issue should be put in historical perspective.

Let me examine it first in the broad scale of automation, and then let me specifically address the impact on music today. The bulk of automation that has occurred in history has occurred over the last hundred years. That's when we've seen all the dramatic automation of machines that could replace our muscles as well as machines that now begin to amplify our mental processes. And the impact on employment over the last hundred years has been the same as in England when the textile industry was automated. In 1870, we had 10 million jobs comprising 30% of the population. Today, we have 120 million jobs comprising nearly 50% of the population. Moreover, the average wage of these jobs today--in constant dollars--is six times what it was a hundred years ago. There is much more wealth, and this has led to expansion both in the private and public sectors. We have huge programs, from Social Security to Medicare, that didn't even exist a hundred years ago.

We couldn't have afforded them then, but we can afford them today because of the increases in economic power and efficiency that automation has brought along with it. And interestingly, even though machines seem to put people out of work, there are many more people working. Albeit at different jobs.

At more interesting jobs. Jobs that require more education. A lot of the new jobs, in fact, have been in education, to provide the higher level of skill that today's jobs require. Today, we have 5 to 6 million college students. We had only 1% of that number a hundred years ago. Let's look at this phenomenon in terms of music, where the same thing is going on. People can create music more effectively and with greater productivity. If you want to weave cloth, as it were, the way it was done 10 years ago, you may find yourself out of work. On the other hand, any musician I know who has bothered to learn the new methodologies of creating music has been besieged with opportunities and is very much in demand. I'll give you just a few examples of new opportunities that didn't exist 10 years ago. There's nearly $10 billion a year spent in this country on making industrial, government, and training films.

Ten years ago, all these films used public domain recordings for their background music. Today, they actually hire someone to create an original soundtrack on a synthesizer, and that provides a musician with a job which didn't exist before. There's a whole cadre of musicians who do nothing but create new musical sounds, whereas 10 years ago, people pretty much limited themselves to the handful of timbres that were available from conventional instruments. Today there's a whole industry of creating new timbres, and there's hardly a pop song that comes along that doesn't have some entirely new sounds. Pop groups I know that acquire these types of synthesizers don't immediately halve their group's size. They keep their size the same, but they put out a more interesting, more dramatic sound. They feel that's good for their business-and indeed it is. Music is becoming more exciting because of this technology, and thus the overall demand and market for music has expanded, and revenues in the music and recording industries have gone up. Overall, the employment opportunities for musicians are greater now than they were 10 years ago-and are more diverse.

Just how complicated is learning these new methodologies likely to be for people trained on traditional instruments?

A strong understanding of the fundamentals of music--of music theory, playing techniques, music traditions--continues to be the best possible preparation for creating music, regardless of the instrument used. Musicians who have a solid grounding generally have little trouble adapting to the new technology. Computer-based keyboards, for example, have been engineered specifically to support the playing techniques that have come before them.

We'd have a hard time selling our instruments if piano keyboard technique were not so widely known.

Sampling makes it possible for an artist to exactly reproduce someone else's sounds. Frank Zappa, in fact, has taken to printing a specific prohibition against this on his recordings. What are the legal implications here?

The copyright protection that's available is implied, in any event, whether Frank Zappa stamps a copyright notice on there or not. The copyright laws are fairly clear, although their interpretation is less so. If somebody produces some original work that shows ingenuity and creativity, he or she owns that.

And if somebody copies a substantial enough portion of a musical work, then that would be an infringement. In an extreme case, if you sample 30 seconds of a recording, I don't think anybody would disagree that that's infringement. Exactly what point constitutes infringement-you can't copy 30 seconds, but can you copy 1 second? Is a tenth of a second too much? These are questions the courts will have to sort out. The principle is, if you're copying enough to be really borrowing some unique expression of creativity from another artist, you're probably infringing. The opportunity to do that certainly existed before samplers.

With the increasing availability of sampling, will infringement become more widespread?

I personally don't feel it's going to be a major problem. Musical expression is not something you can easily copy just by lifting a second or two of someone else's performance. Musical expression is a whole vocabulary, a whole language, and a great musical artist evidences that by the way he or she responds to a broad diversity of musical situations. I don't think you can capture the essence of a musical artist in 1 or 2 seconds.

As the inventor and manufacturer of the 250, have you ever been or do you see yourself becoming party to a copyright suit?

No more than the manufacturer of a photocopier would be liable for someone copying printed material.

In a sense, music performers have historically been forced into a role similar to that of bodybuilders or other athletes; they've had to spend an inordinate amount of time perfecting technique. It would seem the interaction of computers and electronic instruments can free them from this, at least in part.

This is one of the key changes that the new musical technologies bring forth.

There are many dramatic changes in the way music is and can be created with the new tools we have now. The barrier of fine motor coordination is certainly being overcome. A great deal of music required nearly superhuman feats of coordination and could not be produced otherwise. Today, with sequencers, we have the ability to play a line of music at a slow speed and then to speed it up without changing the pitch or other characteristics. We have the ability to edit out mistakes, to make modifications after the fact, to massage a piece of music the same way a writer would massage a piece of prose. This is dramatically changing the way music is created and is allowing people who do not have highly developed technical skills to create music. There are many people who may not have highly developed motor coordination but who nonetheless can express ideas in a musical form. There are other changes in the way music is being created. For centuries, there has been a strong link between playing technique and the sounds created. If you wanted flute sounds, you were stuck with flute-playing technique; if you wanted violin sounds, you had to master violin technique. Now, it's really possible to use any technique and create any sounds. You could have the technique of a wind instrument and [with a device known as a wind controller] create the sounds of virtually any acoustic or electronic instrument.

It's obvious you can do that from a keyboard. There are music controllers that are emulating the playing technique of many acoustic instruments.

We're really breaking that link between playing technique and the sounds created, because we're no longer restricted by the physics of creating the sounds acoustically.

You've demonstrated a genuine interest in helping handicapped people. Do you envision these new music technologies helping someone who has developed arthritis of the hands? There's no question that somebody who has lost-or never had-the coordination or physical skills necessary to play an instrument now has the potential to create music.

Computers have wrought enormous changes in the process of musical composition as well as performance, haven't they?

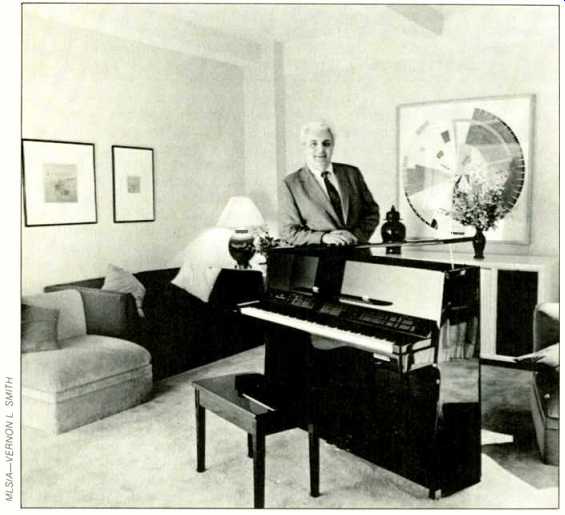

-------- Robert Moog, of synthesizer fame and V.P. of Kurzweil Music,

and the Kurzweil Ensemble Grande.

Right. Composing is now an interactive process. You can hear the work as it's being composed, alter it, try out experiments, and hear them in real time.

You can go through dozens of experiments in a matter of an hour or two in the comfort of your own bedroom. My father was a composer, and the process was incredibly difficult. He had to write a piece of music and imagine the whole thing in his head. He'd write out the notation, which was a very elaborate process, get it reproduced, gather a group of musicians-there'd be arguments about funding-and then finally he would get to hear his work and realize he wanted the bassoons to come in four measures earlier. So he'd dismiss all the musicians, gather up the notation, write the whole thing out again and you literally had to write the whole thing over because there were no music notation processors that would just modify it-make the changes he wanted, have more arguments about funding, and get the musicians back again. Finally he'd hear the bassoons coming in earlier, but he'd have some other idea. Today, he could make that change at the touch of a few buttons and hear it immediately.

How does artificial intelligence fit into all this?

Well, we are developing "musicians' assistants" and "composers' assistants" which can perform some of the chores of creating music through systems that are programmed with knowledge of music theory and other elements of music. For example, there are very advanced notation packages coming out now that actually have a knowledge of music and therefore can intelligently take a computer's music sequence and properly notate it. There are systems that can take a melody and generate a walking bass line, an appropriate rhythm pattern, or harmonic progressions which may only be suggestions to the human composer.

The human composer can take these ideas and modify them or add his or her own creativity. But these systems essentially improve the productivity of the composition process by doing some of the rote work automatically.

Computers have given birth to something known as algorithmic composition. Precisely what is that?

In algorithmic composition, the composer doesn't specify explicitly all the notes to be played but lays out rules or procedures called algorithms that will, in turn, generate the music. This allows the music to perhaps be different every time it's heard. It's a different way of expressing musical ideas, of expressing them at a different level and using the computer essentially as a collaborator.

Does the emergence of a technique like this indicate that we're heading toward music that's more mathematical than emotional, more expressive of rules than of human feeling? Let's get back to fundamentals. Music is, and will remain, a special form of communication between the artist and his or her audience, using elements of sound, melody, rhythm, harmony, and timbres. The new technology which computers are providing is greatly expanding the expressive possibilities that musicians have at their disposal.

Musical instruments which are computer based provide greater modalities of expressiveness than acoustic instruments. In fact, acoustic instruments are very limited in this respect. Each acoustic instrument provides a rather limited repertoire of ways for controlling the sounds and modifying them and translating human expressivity into sound, whereas computer-based instruments provide more freedom for control over musical expression. Twenty years ago, the number of different timbres that a musician had to use for orchestration was measured in dozens, whereas today there are many thousands of timbres that we actually hear in music. And there are means readily available for creating a virtually unlimited number. In fact, musicians can now craft their own sounds, which was never possible before. Musicians can use a wide range of music controllers which are very expressive. We now have keyboards that not only measure the velocity of the flight of each key, but actually measure the amount of pressure each finger places on it.

This is a very powerful form of expressiveness that an ordinary piano keyboard doesn't have. A piano keyboard only measures velocity Even the fairly superficial use of a modern synthesizer provides levels of expressivity and choices that go beyond what a conventional piano offers.

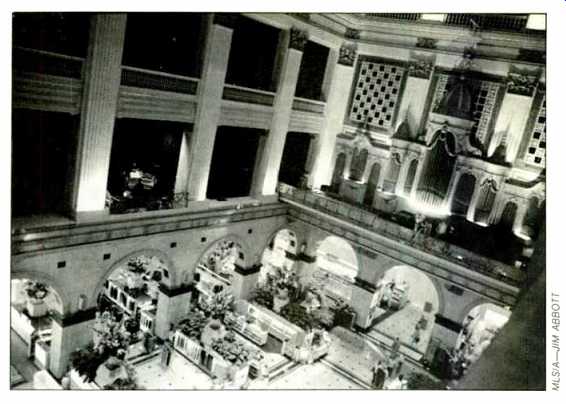

------- Olga Espinola, one of the first blind professionals to use the

Xerox/Kurzweil Personal Reader,

I attended a symposium recently where several leading recording engineers addressed the issue of new technology. One of them said that there's still no substitute for a good keyboard player. Do you agree? Absolutely. The technology is not in any way replacing the musician. The musician remains an irreplaceable part which can read printed material aloud.

of the process. In fact, he or she is the communicator of human ideas and emotions. The reason that a musical group of, let's say, five people, after they acquire this type of technology and are able to put out a much richer sound, do not reduce the size of their group is because they need five people's worth of musical ideas.

Do you see live performances, as we know them, becoming obsolete? Performing music live and in real time will continue to be a special form of expression.

And acoustic instruments? What future do you see for them?

There's no question that acoustic sounds will be around fo' a long time I see variations of those sounds also in common use. A lot of sounds we call synthetic will actually have an acoustic base to them. Somebody will have started with an acoustic piano or some other sound and modified it, so it sounds totally original but nonetheless gains its complexity and musical relevance from the fact that it started as an acoustic sound. However, I think we're rapidly moving toward a time when electronic instruments will be able to provide all the functionality and tone quality of acoustic instruments, along with many layers of capability that they do not provide. I don't think we're that many years from the point where 90% of what has been an acoustic market will be a digital market in which acoustic instruments will have the role that harpsichords do now. They'll still be made in small quantities, but they won't be the primary way that music is created.

As time passes, what are some of the things we can expect to see in electronic musical devices?

The quality of sound is certainly improving. There's a great deal of work being done on the human factors of these instruments, because creating music is a very personal activity and optimizing the interaction of musician and instrument is vital. Optimizing expressiveness is a major issue now. Another major challenge is to make these instruments use music terminology and techniques, and not have them appear to be computers-to make them appear less technical and more intuitive. A lot of work is going into that aspect of these instruments.

It's tempting to compare you to Bartolommeo di Francesco Cristofori, the inventor of the piano. How do you see your historic role in the creation of musical instruments?

Well, our goal was to re-create the functionality of acoustic instruments in a digital, computer-based instrument.

We feel we've achieved that objective.

Our second goal has been to cost reduce that technology so that a very large number of people can actually gain access to it. Our objective is to build up a major company with a worldwide scope and to be a leader of what we feel is an historic transformation of musical instruments.

(Source: Audio magazine, Jan 1989)

Also see:

The Audio Interview: Clive Davis: Finding Songs For Singers (July 1985)

= = = =