Introduction

The CD system is one rare example of international co-operation leading to a standard that has been adopted worldwide, something that was notably lacking in the first generation of videocassette recorders. The CD system did not spring from work that was mainly directed to audio recording but rather from the effort to create video discs that would be an acceptable alternative to videocassettes. At the time when competing video disc systems first became available, there were two systems that needed mechanical contact between the disc and a pickup, and one that did not. The advantages of the Philips system that used a laser beam to avoid mechanical contact were very obvious, though none of the video disc systems was a conspicuous commercial success despite the superior quality of picture and sound as compared to videocassette recorders . The work on video discs was carried out competitively, but by the time that the effort started to focus on the potentially more marketable idea of compact audio discs, the desirability of uniformity became compel ling. Audio systems have never known total incompatibility in the sense that we have experienced with video or computer systems. If you buy an audio record or a cassette anywhere in the world, you do not expect to have to specify the type of system that you will play it on. Manufacturers realized that the audio market was very different from the video market.

Whereas videocassette recorders when they appeared were performing an action that was not possible before, audio compact discs would be in competition with existing methods of sound reproduction, and it would be ridiculous to offer several competing standards.

In 1978, a convention dealing with digital disc recording was organized by some 35 major Japanese manufacturers who recommended that development work on digital discs should be channeled into twelve directions, one of which was the type proposed by Philips. The outstanding points of the Philips proposal were that the disc should use constant linear velocity recording, eight-to-fourteen modulation, and a new system of error correction called Cross-Interleave Reed-Solomon code (CIRC) . The use of constant velocity recording means that no matter whether the inner or the outer tracks are being scanned, the rate of digital information should be the same, and Philips proposed to do this by using a steady rate of recording or replaying data and vary the speed of the disc at different distances from the centre. You can see the effect of this for yourself if you play a track from the start of a CD (the inside tracks) and then switch to a track at the end (outside). The reduction in rotation speed when you make the change is very noticeable, and this use of constant digital rate makes for much simpler processing.

By 1980, Sony and Philips had decided to pool their respective expertise, using the disc modulation methods developed by Philips along with signal-processing systems developed by Sony. The use of a new error-correcting system along with higher packing density made this system so superior to all other proposals that companies flocked to take out licenses for the system. All opposing schemes died off, and the CD system emerged as a rare example of what can be achieved by co-operation in what is normally an intensely competitive market.

The optical system

The CD recording method makes use of optical recording, using a beam of light from a miniature semiconductor laser. Such a beam is of low power, a matter of milliwatts, but the focus of the beam can be to a very small point so that low-melting point materials like plastics can be vaporized by a focused beam. Turning the recording beam on to a place on a plastic disc for a fraction of a microsecond will therefore vaporize the material to leave a tiny crater or pit, about 0.6µm in diameter -- for comparison, a human hair is around 50 µm in diameter. The depth of the pits is also very small, of the order of 0.1 µm. If no beam strikes the disc, then no pit is formed, so that we have here a system that can digitally code pulses into the form of pit or no-pit. These pits on the master disc

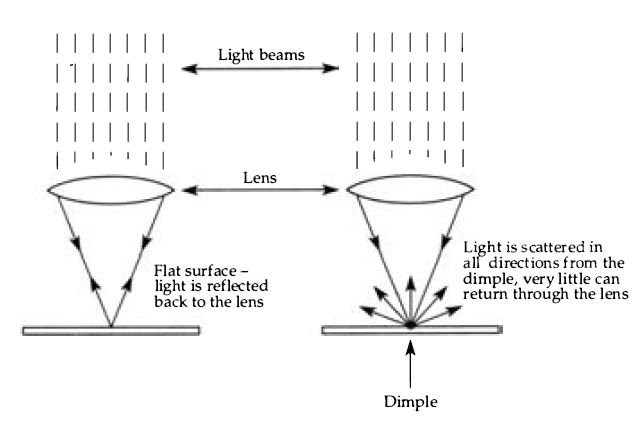

Figure 6.1 How light is reflected back from a flat surface through a lens, but scattered from a dimple. Very little of the scattered light will reach the lens. Flat surface - light is reflected back to the lens the dimple, very little can return through the lens are converted to dimples of the same scale on the copies. The pits/ dimples are of such a small size that the tracks of the CD can be much closer - about 60 CD tracks take up the same width as one LP track.

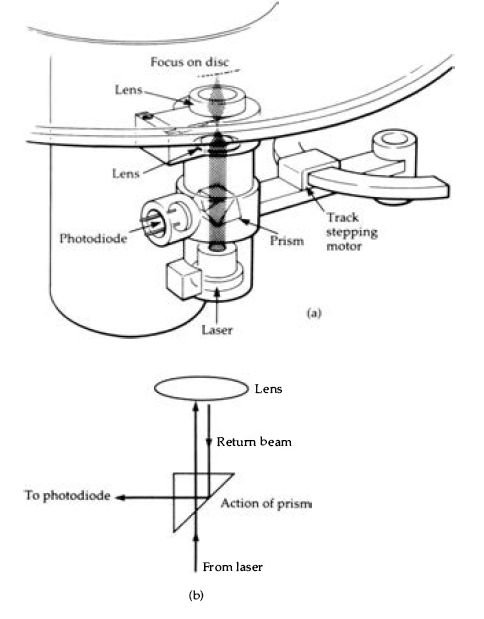

Reading a set of dimples on a disc also makes use of a semiconductor laser, but of much lower power since it need not vaporize material. The reading beam will be reflected from the disc where no dimple exists, but scattered where there is a dimple, Figure 6.1. By using an optical system that allows the light to travel in both directions to and from the disc surface (Figure 6.2), it is possible to focus a reflected beam on to a photodiode, and pick up a signal when the beam is reflected from the disc, with no signal when the beam falls on to a pit. The output from this diode is the digital signal that will be amplified and then processed into an audio signal . Only light from a laser source can fulfill the requirements of being perfectly monochromatic (one single frequency) and coherent (no breaks in the wave-train) so as to permit focusing to such a fine spot.

The CD system uses a beam that is focused at quite a large angle, and with a transparent coating over the disc surface which also focuses the beam as well as protecting the recorded pits, Figure 6.3. Though the diameter of the beam at the pit is around 0.5 µm, the diameter at the surface of the disc, the transparent coating, is about 1-mm. This means that dust particles and hairs on the surface

Figure 6.2 (a) The optical path of light beams in the CD player. The optical

components are underneath the disc, and the purpose of the prism, shown more

clearly in (b) is to split the returning light so that some of it is reflected

to the photocell.

...of the disc have very little effect on the beam, which passes on each side of them - unless your dust particles are a millimeter across! This is just one way in which the CD system establishes its very considerable immunity to dust and scratching on the disc surface, the other being the remarkable error detection and correction system.

Given, then, that the basic record and replay system consists of using a finely focused beam of laser light, how are the pits...

Figure 6.3 The lens arrangement, illustrating how the light beam is focused by both the lens and by the transparent coating of the disc. The effect of this is to al low the beam to have a comparatively large diameter at the surface of the disc, reducing the effect of dirt or scratches.

... arranged on the disc and how does the very small reading spot keep track of the pits? To start with the pits are arranged in a spiral track, like the groove of a conventional record. This track, however, starts at the inside of the disc and spirals its way to the outside, with a distance between adjacent tracks of only 1.6 µm.

Since there is no mechanical contact of any kind, the tracking must be carried out by a servo-motor system. The principle is that the returned light will be displaced if there is mistracking, and the detector unit contains two other photodiodes to detect signals that arise from mistracking in either direction. These signals are obtained by splitting the main laser beam to form two side-beams, neither of which should ever be deflected by the dimples on the disc when the tracking is correct . These side beams are directed to the photodiodes. The signals from these diodes are used to control the servo-motors in a feedback loop system, so that the corrections are being made continually while the disc is being played. In addition, the innermost track carries the position of the various music tracks in the usual digitally coded form. Moving the scanner system radially across the tracks will result in signals from the photodiode unit, and with these signals directed to a counter unit, the number of tracks from the inner starting track can be counted.

This therefore allows the scanner unit to be placed on any one of the possible 41250 tracks.

Positioning on to a track is a comparatively slow operation because part of each track will be read on the way before the count number is increased. In addition, the motor that spins the disc is also servo controlled so that the speed of reading the dimples is constant. Since the inner track has a diameter of 50-mm and the maximum allowable outer track can have a diameter of 116mm, the number of dimples per track will also vary in this same proportion, about 2.32. The disc rotation at the outer edge is therefore slower by this same factor when compared to the rotational speed at the inner track. The rotational speeds range from about 200 rpm on the inner track to about 500 rpm on the outer track -- the actual values depend on the servo control settings and do not have to be absolute in the way that the old 33 1/3 speed had to be absolute.

The only criterion of reading speed on a CD is that the pits are being read at a correct rate, and that rate is determined by a master clock frequency. This corresponds to a reading speed of about 1.2 to 1.4 meters of track per second.

The next problem is of recording and replaying two channels, because the type of reading and writing system that has been described does not exactly lend itself well to twin-channel use with two tracks being read by two independent scanners . This mechanical and optical impossibility is avoided by recording the two-channel information on one track, by recording samples from the channels alternately. This is made easier by the storage of data while it is being converted into frame form for recording, because if you have one memory unit containing L-channel data and one containing R-channel data, they can be read alternately into a third memory ready for making up a complete frame. There is no need to have the samples taken in a phased way to preserve a time interval between the samples on different channels . As the computer jargon puts it, the alternation does not need to take place in real time.

We now have to deal with the most difficult parts of the whole CD system. These are the modulation method, the error-detection and correction, and the way that the signals are assembled into frames for recording and replay. The exact details of each of these processes are released only to licensees who buy into the system.

This is done on a flat-rate basis - the money is shared out between Philips and Sony, and the licensee receives a manual called the 'Red book' which precisely defines the standards and the methods of maintaining them. Part of the agreement, as you might expect, is that the confidentiality of the information is maintained, so that what follows is an outline only of methods. This is all that is needed, however, unless you intend to design and manufacture CD equipment, because for servicing work you do not need to know the circuitry of an IC or the content of a memory in order to check that it is working correctly.

The error-detection and correction system is called CIRC -- Cross-Interleave Reed-Solomon Code. This code is particularly suitable for the correction of what are termed 'long burst' errors, meaning the type of error in which a considerable amount of the signal has been corrupted. This is the type of error that can be caused by scratches, and the code used for CDs can handle errors of up to 4000 bits, corresponding to a 2.5mm fault on the disc lying along the track length. At the same time, a coding that required too many redundant bits to be transmitted would cramp the expansion possibilities of the system, so the CIRC uses only one additional bit added to each three of data -- this is described as an efficiency of 75%. Small errors can be detected and remedied, and large errors are dealt with by synthesizing the probable waveform on the basis of what is available in the region of the error.

The whole system depends heavily on a frame structure which, though not of the same form as a TV signal frame, allows for the signal to carry its own synchronization pattern. This makes the recording self-clocking, so that all the information needed to read Data 12 x 16 bits (6 sets per channel) = 24 x 8 bits

Error Correction 4 x 16 bits

Control/ 8 x 8 bits 33 x 8 bits

Display

Total data as above

Synchronization

Redundant bits for data and sync 33 x 14 bits = 462 24 3 x 34 = 10^2 1x8 bits 264 bits per frame 588 recorded bits per frame (a), (b)

Figure 6.4 (a) The data grouping before EFM . (b) The data grouping after

EFM, with synchronization and redundancy bits added to a total of 588 bits

per frame.

The frame is the unit for error checking, synchronization, and control/display.

... the signal correctly is contained on the disc - there is no need, for example, for each player to maintain a crystal-controlled clock pulse generator working at a fixed frequency. In addition, the presence of a frame structure allows the signal to be recorded and replayed by a rotating head type of tape recorder.

Each frame of data contains a synchronization pattern of 24 bits, along with 12 units of data (using 16-bit 'words' of data), 4 error correcting words (each 16 bits) and 8 bits of control and display code. Of the 12 data words, six will be left-channel and six right channel, and the use of a set of 12 in the frame allows expansion to four channels (3 words each) if needed later. Though the actual data words are of 16 bits each, these are split into 8-bit units for the purposes of assembly into a frame. The content of the frames, before modulation and excluding synchronization, is therefore as shown in Figure 6.4(a) . EFM modulation system The word 'modulation' is used in several senses as far as digital recording systems are concerned, but the meaning here is of the method that is used to code the l's and 0’s of a digital number into l's and 0’s for recording purposes. The principle, as mentioned earlier, is to use a modulation system that will prevent long runs of either 1' s or 0' s from appearing in the final set of bits that will bum in the pits on the disc, or be read from a recorded disc, and calls for each digital number to be encoded in a way that is quite unlike binary code. The system that has been chosen is EFM, meaning eight-to-fourteen modulation, in which each set of eight bits is coded on to a set of 14 bits for the purposes of recording.

The number carried by the eight bits is coded as a pattern on the 14 bits instead, and there is no straightforward mathematical relationship between the 14-bit coded version and the original 8 bit number.

The purpose of EFM is to ensure that each set of eight bits can be written and read with minimum possibility of error. The code is arranged, for example, so that changes of signal never take place closer than 3 recorded bits apart. This cannot be ensured if the unchanged 8 or 16-bit signals are used, and the purpose is to minimize errors caused by the size of the beam which might overlap two dimples and read them as one. The 3-bit minimum greatly eases the requirements for perfect tracking and focus. At the same time, the code allows no more than 11 bits between changes, so avoiding the problems of having long runs of l's or O' s. In addition, three redundant bits are added to each fourteen, and these can be made 0 or 1 so as to break up long strings of 0’s or l's that might exist when two blocks of 14 bits were placed together.

The idea of using a code which is better adapted for reading and writing is not new. The Gray code has for a long time been used as an alternative to the 8-4-2-1 type of binary code, because in a Gray code a small change of number causes only a small change of code. The change, for example from 7 to 8 which in four-bit binary is the change from 01 11 to 1000 would in Gray code be a change from 0100 to 1100 - note that only one digit changes . EFM uses the same principles, and the conversion can be carried out by a small piece of fixed memory (ROM) in which using an eight-bit number as an address will give data output on 14 lines. The receiver will use this in reverse, feeding 14 lines of address into a ROM to get eight bits of number out . If you want to find the exact nature of the code, buy the Red Book! The use of EFM makes the frame considerably larger than you would expect from its content of 33 8-bit units . For each of these units we now have to substitute 14 bits of EFM signal, so that we have 33 x 14 bits of signal. In addition, there will be 3 additional bits for each 14-bit set, and we also have to add the 24 bits of synchronization and another three redundant bits to break up any pattern of excessive l's or O' s here . The result is that a complete frame as recorded needs 588 bits, as detailed in Figure 6.4. All of this, remember, is the coded version of 12 words, six per channel, corresponding to 6 samples taken at 44.1 kHz, and so representing about 136 µs of signal in each channel . Error correction The EFM system is by itself a considerable safeguard against error, but the CD system needs more than this to allow, as we have seen earlier, for scratches on the disc surface that cause long error sequences . The main error-correction is therefore done by the CIRC coding and decoding, and one strand of this system is the principle of interleaving. Before we try to unravel what goes in the CIRC system, then, we need to look at interleaving and why it is carried out.

The errors in a digital recording and replay system can be of two main types, random errors and burst (or block) errors . Random errors, as the name suggests, are errors in a few bits scattered around the disc at random and, because they are random, they can be dealt with by relatively simple methods. The randomness also implies that in a frame of 588 bits, a bit that was in error might not be a data bit, and the error could in any case be corrected reasonably easily. Even if it were not, the use of EFM means that an error in one bit does not have a serious effect on the data.

A block error is quite a different beast, and is an error that involves a large number of consecutive bits . Such an error could be caused by a bad scratch in a disc or a major dropout on tape, and its correction is very much more difficult than the correction of a random error . Now if the bits that make up a set were not actually placed in sequence on a disc, then block errors would have much less effect. If, for example, a set of 24 data units (bytes) of 8-bits each that belonged together were actually recorded so as to be on eight different frames, then all but very large block errors would have the effect of a random error, affecting only one or two of the byte units . This type of shifting is called interleaving, and it is the most effective way of dealing with large block errors. The error-detection and correction stages are placed between the channel alternation stage and the step at which control and display signals are added prior to EFM encoding.

The CIRC method uses the Reed-Solomon system of parity coding along with interleaving to make the recorded code of a rather different form and different sequence as compared to the original code. Two Reed-Solomon coders are used, each of which adds four parity 8-bit units to the code for a number of 8-bit units . The parity system that is used is a very complicated one, unlike the simple single-bit parity that was considered earlier, and it allows an error to be located and signaled. The CD system uses two different Reed-Solomon stages, one dealing with 24 bytes (8 bit units) and the other dealing with 28 bytes (the data bytes plus parity bytes from the first one), so that one frame is processed at a time. In addition, by placing time delays in the form of serial registers between the coders, the interleaving of bytes from one frame to another can be achieved. The Reed-Solomon coding leaves the signal consisting of blocks that consist of correction code(1), data(l), data (2) and correction code(2), and these four parts are interleaved . For example, a recorded 32-bit signal might consist of the first correction code from one block, the first data byte of the adjacent block, the second data byte of the fourth block, and the second correction code from the eighth data block. These are assembled together, and a cyclic redundancy check number can be added.

At the decoder, the whole sequence is performed in reverse.

This time, however, there may be errors present . We can detect early on whether the recorded 'scrambled' blocks contain errors and these can be corrected as far as possible. When the correct parts of a block (error code, data, data, error code) have been put together, then each word can be checked for errors, and these corrected as far as possible. Finally, the data is stripped of all added codes, and will either be error-free or will have caused the activation of some method (like interpolation) of correcting or concealing gross errors . Production methods A compact disc starts as a glass plate which is ground and polished to 'optical' flatness -- meaning that the surface contains no deformities that can be detected by a light beam. If a beam of laser light is used to examine a plate like this, the effect of reflected light from the glass will be to add to or subtract from the incident light, forming an interference pattern of bright and dark rings . If these rings are perfectly circular then the glass plate is perfectly flat so that this can be the basis of an inspection system that can be automated.

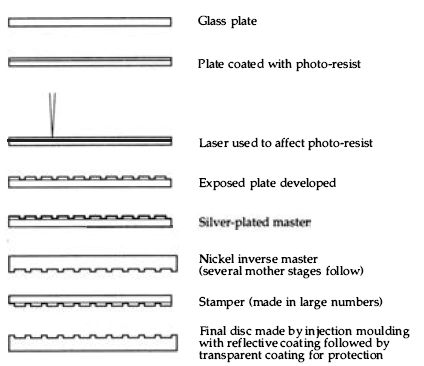

The glass plate is then coated with photoresist, using the types of photoresist that have been developed for the production of ICs.

These are capable of being 'printed' with very much finer detail that is possible using the type of photoresist which is used for PCB construction, and the thickness and uniformity of composition of the resist must both be very carefully controlled.

The image is then produced by treating the glass plate as a compact disc and writing the digital information on to the photoresist with a laser beam. Once the photoresist has been processed, the pattern of pits will develop and this comprises the glass master . The surface of this disc is then silvered so that the pits are protected, and a thicker layer of nickel is then plated over the surface. This layer can be peeled off the glass and is the first metal master . The metal master is used to make ten mother plates, each of which will be used to prepare the stamper plates.

Once the stamper plates have been prepared, mass production of the CDs can start . The familiar plastic discs are made by injection moulding (the word 'stamper' is taken from the corresponding stage in the production of black vinyl discs) and the recorded surface is coated with aluminum, using vacuum vaporization.

----------------------

Figure 6.5 Steps in the manufacture of a CD. The stampers are used in the

injection moulding process, and the plastic discs have to be aluminised to

reflect light, and then treated with their transparent coating which protects

the dimples and helps to focus the laser light.

Glass plate

Plate coated with photo-resist

Laser used to affect photo-resist

Exposed plate developed

Silver-plated master

Nickel inverse master (several mother stages follow)

Stamper (made in large numbers)

Final disc made by injection moulding with reflective coating followed by transparent coating for protection

Following this, the aluminum is protected by a transparent plastic which forms part of the optical path for the reader and which can support the disc label . The system is illustrated in outline in Figure 6.5.

Figure 6.6 Block diagrams for CD recording (a) and replay (b).

The end result

All of this encoding and decoding is possible mainly because we can work with stored signals, and such intensive manipulation is tolerable only because the signals are in the form of digital code.

Certainly any efforts to correct analog signals by such elaborate methods would not be welcome and would hardly add to the fidelity of reproduction. Because we are dealing with signals that consist only of 1's and 0’s, however, and which do not provide an analog signal until they are converted, the amount of work that is done on the signals is irrelevant . This is the hardest point to accept to anyone who has been brought up in the school of thought that the less that is done to an audio signal the better it is. The whole point about digital signals is that they can be manipulated

as we please providing that the underlying number codes are not altered in some irreversible way.

The specifications for the error-correcting system are : Max. correctable burst error length 4000 bits (2.5 mm length)

Maximum interpolable burst error length 12,300 bits (7.7 mm length) . Other factors depend on the bit error rate, the ratio of the number of errors received to the number of bits total. The system aims to cope with bit error rates (BER) between 10-3 and 10-4• For BER = 10-3 interpolation rate is 1000 sample per minute.

- undetected errors less than 1 in 750 hours For BER = 10-4 interpolation rate is 1 sample in 10 hours .

- undetected errors negligible.

Control bytes For each block of data one control byte (8 bits) is added. This allows eight channels of additional information to be added, known as sub-codes P to W. This corresponds to one bit for each channel in each byte. To date, only the channels P and Q have been used.

The P channel carries a selector bit which is 0 during music and lead-in, but is set to 1 at the start of a piece, allowing a simple but slow form of selection to be used. This bit is also used to indicate the end of the disc, when it is switched between 0 and 1 at a rate of 2Hz in the lead-out track.

The Q channel contains more information, including track number and timing. A channel word consists of a total of 98 bits, and since there is one bit in each control byte for a particular channel the complete channel word is read in each 98 blocks. This word includes codes which can distinguish between four different uses of the audio signals : 2 audio channels, no pre-emphasis 4 audio channels, no pre-emphasis 2 audio channels, pre-emphasis at 50µs and 15µs 4 audio channels, pre-emphasis at 50µs and 15µs Another four bits are used to indicate the mode number for the Q channel . Three such modes are defined, but only mode 1 is of interest to users of CDs . When mode 1 is in use, the data for the disc is contained in 72 bits which carry information in nine 8-bit sections. These are:

1. TNO - the track number byte which holds two digits in BCD code. The lead-in track is 00 and the others are numbered in sequence.

2. TOC (Table of Contents) information on track numbers and times, three bytes

3. A Frame number and running time within a track, three more bytes.

4. A zero byte .

The allocation of these control channels allows considerable flexibility in the development of the CD format, so that players in the future could make use of features which have to date not been thought of. Some of the additional channels are used on the CDs which are employed as large-capacity storage for computers.

Summary

The proof of the efficacy of the whole system lies in the audio performance. The bare facts are impressive enough, with frequency range of 20Hz to 20 kHz, (within 0.3 db) and more than 90db dynamic range, signal to noise ratio and channel separation figures, with total harmonic distortion (including noise) less than 0.005%. A specification of that order with analog recording equipment would be difficult, to say the least, and one of the problems of digital recording is to ensure that the analog equipment which is used in the signal processing is good enough to match up to the digital portion.

Added to the audio performance, however, we have the convenience of being able to treat the digital signals as we treat any other digital signals . The inclusion of control and display data means that the number of items on a recording can be displayed, and we can select the order in which they are played, repeating items if we wish. Even more impressive (and very useful for music teachers !) is the ability to move from track to track, allowing a few notes to be repeated or skipped as required. Though some users treat a CD recording as they used to treat the old black-plastic disc, playing it from beginning to end, many have come to appreciate the usefulness of being able to select items, and this may be something that recording companies have to take notice of in the future. The old concert-hall principle that you can make people hear modern music by placing it between two Mozart works will not apply to CD, and the current bonanza of re-recording old master tapes (often with very high noise levels) on to CD cannot last much longer.

Every real improvement in audio reproduction has brought with it an increase in the level of acceptability. We find the reproduced sound quality of early LP's now almost as poor compared to our newly raised standards as the reproduction from 78' s appeared in the early days of LP's. The quality that can be obtained from a CD is not always reflected in samples I have heard, where the noise from the original master tapes and the results of editing are only too obvious. Music lovers are usually prepared to sacrifice audio quality for musical quality - we will listen to Caruso or McCormack with a total suspension of criticism of the reproduction -- but only to a point. Nothing less than full digital mastering coupled with excellent microphone technique and very much less 'sound engineering' used on the master tapes is now called for, and this will mean a hectic future for orchestras as the concert classics are recorded anew with the equipment of the new generation.

In case it all sounds too perfect, there can still be problems in production. I have a CD of archive recordings of Maria Callas in which many tracks are very poor because of either overmodulation or bad tracking caused by poor CD production techniques . The symptom is a loud clicking at about the rate of revolution of the disc, or strange metallic noises on parts of the music. Discs of this quality should never have left the factory (in Germany), and if you do come across a faulty disc you should return it at the first possible opportunity so that the factory can be aware of the problems -- I shall keep mine as a dreadful warning that there is no such thing as perfection.

Bitstream tailpiece

The use of bitstream technology was mentioned in section 4. Its advantages as far as CD reproduction is concerned are fourfold :

1. The conversion from digital to analog is now carried out in a digital way, with no need to generate 16 very precise values of current.

2. The linearity of small signals is greatly improved because of the elimination of cross-over distortion caused by current inaccuracies.

3. The glitches that were caused in conventional D-A converters when bit values changed at slightly different times are eliminated.

4. The zero level (1/2), known as digital silence, is very much more stable because it no longer depends on accuracy of current division, only on the generation of a 101010 .. pattern from the bitstream converter.

The Philips system uses an oversampling of 256 times to generate a signal stream at 11.2896 MHz. A dither signal at 176 kHz is added to reduce the formation of patterns in the noise shaper, and this is addition of dither is the equivalent of adding another bit to the 16-bit signal . In the conversion process, the quantization noise which is generated when a 16-bit word is reduced to a stream of single bits is all in the higher frequency band and is removed by a simple low-pass filter.

The simple system described in section 4 shows the action of a first-order noise-shaper, with one stage of integration only and acting on one input number only. The Philips system uses a second-order noise shaper, working with two delays and adding to two waiting input numbers . This provides an even higher standard of performance, typically of distortion at 1 kHz 92 db down, small signal distortion 94 db down and signal-to-noise ratio of 96 db, with linearity of ±0.5 db.

The IC which is used for bitstream conversion can be used in a differential mode, with inverted data being fed to another unit and the output combined with inversion. This has the effect of adding the in-phase signals but subtracting noise signals, leading to a further improvement in signal-to-noise ratio.

The MASH system

The principle of using two-level outputs has been taken up by several other manufacturers, and Technics refer to their system as MASH, an abbreviation of 'multi-stage noise shaping', first developed by NTT Inc. and developed by Matsushita Electric. The MASH system uses a third order noise-shaping circuit, previously thought to be susceptible to oscillation, but designed so as to ensure stability with a very precise conversion of digital to analog conversion in the audio band. A crystal oscillator is used to generate the delay time, so ensuring very high accuracy along with excellent temperature and time stability.

The sampling is effectively at 768 times the CD sampling frequency, so that the output from the three noise shapers is at 33.869 MHz . The output signal is 3-bit (7 levels) rather than 1-bit, and the seven levels of this 3-bit code are converted into pulse width variations . This allows the final conversion to analog to make use of simple low-pass filters . Another system, PEM (Pulse Edge Modulation) is being used by JVC, with sampling at 384 times the normal rate and four noise shapers to provide a bitstream at 16.934 MHz.

A rather different approach has been taken by the Denon DAT players, using the name of LAMBDA SLC, Ladder-form Multiple Bias Digital to Analog Super Linear Conversion. This method uses a 20-bit D-A conversion in duplicate, with the outputs combined so as to reduce errors.