WAV and BWF

The Waveform Audio (WAV) file format (.wav extension) was introduced in Windows 3.1 as the format for multimedia sound. The WAV file interchange format is described in the Microsoft/IBM Multimedia Programming Interface and Data Specifications document. The WAV format is the most common type of file adhering to the Resource Interchange File Format (RIFF) specification; it is sometimes called RIFF WAV. WAV is widely used for uncompressed 8-, 12-, and 16-bit audio files, both monaural and multi-channel, at a variety of sampling frequencies. RIFF files organize blocks of data into sections called chunks. The RIFF chunk at the beginning of a file identifies the file as a WAV file and describes its length. The Format chunk describes sampling frequency, word length, and other parameters. Applications might use information contained in chunks, or ignore it entirely. The Data chunk contains the amplitude sample values. PCM or non-PCM audio formats can be stored in a WAV file. For example, a format specific field can hold parameters used to specify a data-compressed file such as Dolby Digital or MPEG data. The format chunk is extended to include the additional content, and a "cbSize" descriptor is included along with data describing the extended format. Eight-bit bytes are represented in unsigned integer format and 16 bit bytes are represented in signed two's complement format.

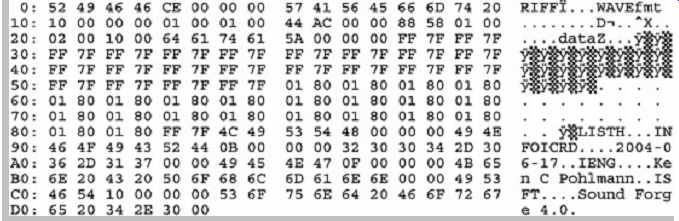

FIG. 5 shows one cycle of a monaural 1-kHz square wave recorded at 44.1 kHz and 16 bits and stored as a WAV file. The left-hand field represents the file in hexadecimal form and the right-hand side in ASCI I form.

The first 12 bytes (each pair of hex numbers is a byte) (52 49 46 46) represent the ASCI I characters for RIFF, the file type. The next four bytes (CE 00 00 00) represent the number of bytes of data in the remainder of the file (excluding the first eight header bytes). This field is expressed in "little-endian" form (the term taken from Gulliver's Travels and the question of which end of a soft boiled egg should be cracked first). In this case, the least significant bytes are listed first. (Big-endian represents most significant bytes first.) The last four bytes of this chunk (57 41 56 45) identify this RIFF file as a WAV file. The next 24 bytes is the Format chunk holding information describing the file. For example, 44 AC 00 00 identifies the sampling frequency as 44,100 Hz. The Data chunk (following the ASCI I word "data") describes the chunk length, and contains the square-wave data itself, stored in little-endian format. This file concludes with an Info chunk with various text information.

FIG. 5 One cycle of a 1-kHz square wave recorded at 44.1 kHz and saved

as a WAV file. The hexadecimal (left hand field) and ASCI I (right-hand field)

representations are shown.

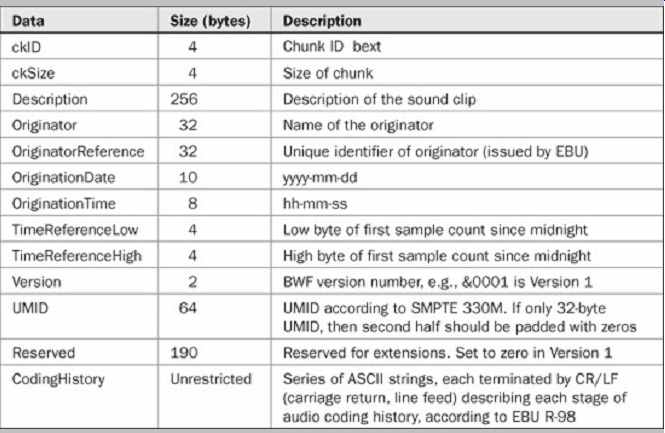

The Broadcast Wave Format (BWF) audio file format is an open-source format based on the WAV format. It was developed by the European Broadcasting Union (EBU) and is described in the EBU Tech. 3285 document. BWF files may be considered as WAV files with additional restrictions and additions. BWF uses an additional "broadcast audio extension" header chunk to define the audio data's format, and contains information on the sound sequence, originator/producer, creation date, a timecode reference, and other data, as shown in Table. 14.1. Each file is time-stamped using a 64-bit value. Thus, one advantage of BWF over WAV is that BWF files can be time-stamped with sample accuracy. Applications can read the time stamp and places files in a specific order or at a specific location, without a need for an edit decision list.

The BWF specification calls for a 48-kHz sampling frequency and at least a 16-bit PCM word length.

Multichannel MPEG-2 data is supported. It defines parameters such as surround format, downmix coefficients, and channel ordering; multichannel data is written as multiple monaural channels, and not interleaved. BWF files use the same .wav extension as WAV files, and BWF files can be played by any system capable of playing a WAV file (they ignore the additional chunks). However, a BWF file can contain either PCM or MPEG-2 data. Some players will not play MPEG files with a .wav extension. The AES46 standard describes a radio traffic audio delivery extension to the BWF file format. It defines a CART chunk that describes cartridge labels for automated playback systems; information such as timing, cuing, and level reference can be placed in the label.

TABLE 1 BWF extension chunk format.

MP3, AIFF, QuickTime, and Other File Formats As noted, MPEG audio data can be placed in AIFF-C, WAV, and BWF file formats using appropriate headers.

However, in many cases, MP3 files are used in raw form with the .mp3 extension. In this case, data is represented as a sequence of MPEG audio frames; each frame is preceded by a 32-bit header starting with a unique synchronization pattern of eleven "1s." These files can be deciphered and playback can initiate from any point in the file by locating the start of the next frame.

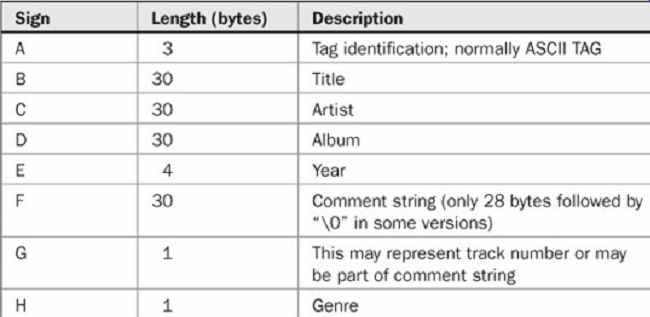

The ID3 tag is present in many MP3 files; it can include data such as title, artist, and genre information. This tag is usually located in the last 128 bits of the file. Since it does not begin with an audio-frame synchronization pattern, audio decoders do not play this non-audio data. An example of an ID3 tag data is shown in Table. 2. The ID3v2.2 tag is a more complex tag structure.

The AU (.au) file format was developed for the Unix platform, but is also used on other systems. It supports a variety of linear audio types as well as compressed files with ADPCM or µ law.

TABLE 2 Example of data found in an ID3 tag structure.

The Voice VOC (.voc) file format is used in some sound cards. VOC defines eight block types that can vary in length; sampling frequency and word length are specified.

Sound quality up to 16-bit stereo is supported, along with compressed formats. VOC files can contain markers for looping, synchronization markers for multimedia applications, and silence markers.

The AIFF (.aiff or .aif) Audio Interchange File Format is native to Macintosh computers, and is also used in PC and other computer types. AIFF is based on the EA IFF 85 standard. AIFF supports many types of uncompressed data with a variety of channels, sampling frequencies, and word lengths. The format contains information on the number of interleaved channels, sample size, and sampling frequency, as well as the raw audio data. As in WAV files, AIFF files store data in chunks. The FORM chunk identifies the file format, COMM contains the format parameter information, and SSND contains audio data. A big-endian format is used. Markers can be placed anywhere in a file, for example, to mark edit points. However, markers can be used for any purpose, as defined by the application software.

The AIFF format is used for some Macintosh sound files and is recognized by numerous software editing systems.

Because sound files are stored together with other parameters, it is difficult to add data, for example, as in a multitrack overdub, without writing a new file. The AIFF-C (compressed) (or AIFC) file format is an AIFF version that allows for compressed audio data. Several types of compression are used in Macintosh applications including Macintosh Audio Compression/Expansion (MACE), IMA/ADPCM, and µ law. AIFF is defined in the context of the EA IFF 85 standard.

The Sound Designer II (SDII or SD2) file format (.sd2) was developed by Digi-Design. It is the successor to the Sound Designer I file format, originally developed for their Macintosh-based recording and editing systems. Audio data is stored separately from file parameters. SDI I stores monaural or stereo data. For the latter, tracks are interleaved as left/right samples; samples are stored as signed values. In some applications, left and right channels are stored in separate files; .L and .R suffixes are used.

The format contains information on the file's sampling frequency, word length and data sizes. Parameters are stored in three STR fields. SDI I is used to store and transfer files used in editing applications, and to move data between Macintosh and PC platforms.

QuickTime is a file format (.mov) and multimedia extension to the Macintosh operating system. It is cross platform and can be used to play videos on most computers. More generally, time-based files, including audio, animation, and MIDI can be stored, synchronized and controlled, and replayed. Because of the timebase inherent in a video program, the video itself can be used to control preset actions. QuickTime movies can have multiple audio tracks. For example, different language soundtracks can accompany a video. In addition, audio-only QuickTime movies may be authored. Videos can be played at 15 fps or 30 fps. However, frame rate, along with picture size and resolution, may be limited by hard-disk data transfer rates.

QuickTime itself does not define a video compression method; for example, a Sorenson Video codec might be used. Audio codecs can include those in MPEG-1, MPEG 2 AAC, or Apple Lossless Encoder. Using the QuickTime file format, MPEG-4 audio and video codecs can be combined with other QuickTime-compatible technologies such as Macromedia Flash. Applications that use the Export component of QuickTime can create MPEG-4 compatible files. Any .mp4 file containing compliant MPEG 4 video and AAC audio should be compatible with QuickTime.

Hardware and software tools allow users to record video clips to a hard disk, trim extraneous material, compress video, edit video, add audio tracks, then play the result as a QuickTime movie. In some cases, the software can be used as an off-line video editor, and used to create an edit decision list with timecode, or the finished product can be output directly. Audio files with 16-bit, 44.1-kHz quality can be inserted in QuickTime movies. QuickTime also accepts MIDI data for playback. QuickTime can also be used to stream media files or live events in real time. QuickTime also supports iTunes and other applications. The 3GPP and 3GPP2 standards are used to create, deliver, and play back bandwidth-intensive multimedia over 3G wireless mobile cellular networks such as GSM. The standards are based on the QuickTime file format and contain MPEG-4 and H.263 video, AAC and AMR audio, and 3G text; 3GPP2 can also use QCELP audio. QuickTime software, or other software that uses QuickTime exporters, can be used to create and play back 3GPP and 3GPP2 content.

As noted, QuickTime is also available for Windows so that presentations developed on a Macintosh can be played on a PC. The Audio Video Interleaved (AVI ) format is similar to QuickTime, but is only used on Windows computers.

The RealAudio (.ra or .ram) file format is designed to play music in real time over the Internet. It was introduced by RealNetworks in 1995 (then known as Progressive Networks). Both 8- and 16-bit audio is supported at a variety of bit rates; the compression algorithm is optimized for different modem speeds. RA files can be created from WAV, AU, or other files or generated in real time.

Streaming technology is described in Section 15.

The JPEG (Joint Photographic Experts Group) lossy video compression format is used primarily to reduce the size of still image files. Compression ratios of 20:1 to 30:1 can be achieved with little loss of quality, and much higher ratios are possible. Motion JPEG (MJPEG) can be used to store a series of data-reduced frames comprising motion video. This is often used in video editors where individual frame quality is needed. Many proprietary JPEG formats are in use. The MPEG (Moving Picture Experts Group) lossy video compression methods are used primarily for motion video with accompanying audio. Some frames are stored with great resolution, then intervening frames are stored as differences between frames; video compression ratios of 200:1 are possible. MPEG also defines a number of compressed audio formats such as MP3, MPEG AAC, and others; MPEG audio is discussed in more detail in Section 11. MPEG video is discussed in Section 16.

Open Media Framework Interchange (OMFI)

In a perfect world, audio and video projects produced on workstations from different manufacturers could be interchanged between platforms with complete compatibility. That kind of common cross-platform interchange language was the goal of the Open Media Framework Interchange (OMFI ). OMFI is a set of file format standards for audio, text, still graphics, images, animation, and video files. In addition, it defines editing, mixing, and processing notation so that both content and description of edited audio and video programs can be interchanged.

The format also contains information identifying the sources of the media as well as sampling and timecode information, and accommodates both compressed and uncompressed files. Files can be created in one format, interchanged to another platform for editing and signal processing, and then returned to the original format without loss of information. In other words, an OMFI file contains all the information needed to create, edit, and play digital media presentations. In most cases, files in a native format are converted to the OMFI format, interchanged via direct transmission or removable physical media, and then converted to the new native format. However, to help streamline operation, OMFI is structured to facilitate playback directly from an interchanged file when the playback platform has similar characteristics as the source platform. To operate efficiently with large files, OMFI is able to identify and extract specific objects of information such as media source information, without reading the entire file.

In addition, a file can be incrementally changed without requiring a complete recalculation and rewriting of the entire file.

OMFI uses two basic types of information.

"Compositions" are descriptions of all the data required to play or edit a presentation. Compositions do not contain media data, but point to them and provide coordinated operation using methods such as time-code-based edit decision lists, source/destination labels, and crossfade times. "Physical sources" contain the actual media data such as audio and video, as well as identification of the sources used in the composition. Data structures called media objects (or mobs) are used to identify compositions and sources. An OMFI file contains objects-information that other data can reference. For example, a composition mob is an object that contains information describing the composition; an object's data is called its values. An applications programming interface (API ) is used to access object values and translate proprietary file formats into OMFI-compatible files.

OMFI allows file transfer such as via disc or transmission on a network. Common file formats included in the OMFI format are TIFF (including RGB, JPEG, and YCC) for video and graphics, and AIFC and WAV for audio. Clearly, a common transmission method must be used to link stations; for example, Ethernet, FDDI , ATM, or TCP/IP could be used. OMFI can also be used in a client server system that allows multi-user real-time access from the server to the client.

OMFI was migrated to Microsoft's Structured Storage container format to form the core of the Advanced Authoring Format (AAF). AAF also employs Microsoft's Component Object Model (COM), an inter-application communication protocol supported by most popular programming languages including C++ and Java. The Bento format, developed by Apple, links elements of a project within an overall container; it is used with OMFI .

Both OMFI Version 1 and OMFI Version 2 are used. They differ to the extent that they can be incompatible. OMFI was developed by Avid Corporation.

Advanced Authoring Format (AAF)

The Advanced Authoring Format (AAF) is a successor to the OMFI specification. Introduced in 1998, AAF is an open-source interchange protocol for professional multimedia post-production and authoring applications (not delivery). Essence and metadata can be exchanged between multiple users and facilities, diverse platforms, systems, and applications. It defines the relationship between content elements, maps elements to a timeline, synchronizes content streams, describes processing, tracks the history of the file, and can reference external essence not in the file. For example, it can convey complex project structures of audio, video, graphics, and animation that enable sample-accurate editing from multiple sources, along with the compositional information needed to render the materials into finished content. For example, an AAF file might contain 60 minutes of video, 100 still images, 20 minutes of audio, and references to external content, along with instructions to process this essence in a certain way and combine them into a 5-minute presentation. AAF also uses variable key length encoding; chunks can be appended to a file and be read only by those with the proper key. Those without the key skip the chunk and read the rest of the file. In this way, different manufacturers can tailor the file for specific needs while still adhering to the format, and new metadata can be added to the standard as well.

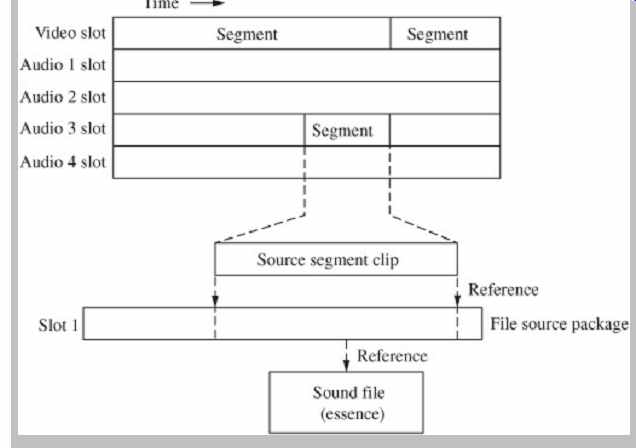

FIG. 6 An example of how audio post-production metadata might appear

in an AAF file.

Considerable flexibility is possible. For example, a composition package has slots that may be considered as independent tracks, as shown in FIG. 6. Each slot can describe one kind of essence. Slots can be time independent, can use a timecode reference, or be event based. Within a slot, a segment might hold a source clip.

That clip might refer to a section of another slot in another essence package, which in turn refers to an external sound file.

AAF uses a Structured Storage format implemented in a C++ environment. As an AAF file is edited, its version control feature can keep a record of the revisions. The AAF Association was founded by Avid and Microsoft.

Material eXchange Format (MXF)

The Material eXchange Format (MXF) is a media format for the exchange of program material. It is used in professional post-production and authoring applications. It provides a simplified standard container format for essence and metadata, and is platform-independent. It is closely related to AAF. Files comprise a sequence of frames where each frame holds audio, video, and data content along with frame-based metadata. MXF files can contain any particular media format such as MEG, WAV, AVI , or DPX; and MPX files may be associated with AAF projects. MXF and AAF use the same internal data structure, and both use the SMPTE Metadata Dictionary to define data and workflow information. However, MXF promotes a simpler metadata that is compatible with AAF.

MXF files can be read by an AAF workstation, for example, and be integrated into a project. An AAF file might comprise only metadata, and simply reference the essence in MXF files.

MXF's data structure offers a partial retrieve feature so that users can retrieve only sections of a file that are pertinent to them without copying the entire file. Because essence is placed in a temporal streaming format, data can be delivered in real time, or conveyed with conventional file-transfer operations. Because data is sequentially arranged, MXF is often used for finished projects and for writing to media. MXF was developed by the Pro-MPEG Forum, EBU, and SMPTE.

AES31

The AES31 specification is a group of non-proprietary specifications used to interchange audio data and project information between devices such as workstations and recorders. It allows simple exchange of one audio file or exchanges of complex files with editing information from different devices. There are four independent stages with interchange options. AES31-1 defines the physical data transport describing how files can be moved via removable media or high-speed network. It is compatible with the Microsoft FAT32 disk filing structure. AES31-2 defines an audio file format, describing how BWF data chunks should be placed on a storage media or packaged for network transfer. AES31-3 defines a simple project structure using a sample-accurate audio decision list (ADL) that can contain information such as levels and anti-click and creative crossfades, and allows files to be played back in synchronization; files use the .adl extension. It uses a URL to identify a file source, whether residing locally or on a network. It also provides interchange between NTSC and PAL formats. For user convenience, edit lists are conveyed with human-readable ASCI I characters; the Edit Decision Markup Language (EDML) is used. AES31-4 defines an object-oriented project structure capable of describing a variety of characteristics. In AES31, a universal resource locator can access files on different media or platforms; the locater specifies the file, host, disk volume, directory, subdirectories, and the file name with a .wav extension.

Digital Audio Extraction

In digital audio extraction (DAE), music data is copied from a CD with direct digital means, without conversion to an analog signal, using a CD- or DVD-ROM drive to create a file (such as a WAV file) on the host computer. The copy is ostensibly an exact bit-for-bit copy of the original data-a clone. Performing DAE is not as easy as copying a file from a ROM disc. Red Book data is stored as tracks, whereas Yellow Book and DVD data is stored as files. CD ROM (and other computer data) is formatted in sectors with unique addresses that inherently provide incremental reading and copying. Red Book does not provide this. Its track format supposes that the data spiral will be mainly read continuously, as when normally listening to music, thus there is no addressing provision. With DAE, the system reads this spiral discontinuously, and must piece together a continuous file. Moreover, when writing a CD-R or CD-RW disc, the data sent to the recorder must be absolutely continuous. If there is an interruption at the writing laser, the recording is unusable. One application of DAE is the illegal copying of music. DAE is often known as "ripping," which also generally refers to the copying of music into files.

CD-ROM (and DVD-ROM) drives are capable of digitally transferring information from a CD-ROM disc to a host computer. The transfer is made via an adapter or interface connected to the drive. CD-ROM drives can also play back CD-Audio discs by reading the data through audio converters and analog output circuits, as in regular CD-Audio players. Users may listen to the audio CD through the computer sound system or a CD-ROM headphone jack. With DAE software, a CD-ROM drive may also deliver this digital audio data to a computer. In this case, the computer requests audio data from the CD-ROM drive as it would request computer data. Because of the differences between the CD-ROM and CD-Audio file formats, DAE requires special logic, either in hardware, firmware (a programmable chip in the data path) or a software program on the host. This logic is needed to correct synchronization errors that would result when a CD Audio disc is read like a CD-ROM disc.

As described in Section 7, CD-Audio data is organized in frames, each with 24 bytes of audio information. There is no unique identifier (address) for individual frames. Each frame also contains eight subcode bits. These are collected over 98 frames to form eight channels, each 98 bits in length. Although Red Book CD players do not do this, computers can collect audio bytes over 98 frames, yielding a block of 2352 audio bytes. In real playing time, this 98-frame block comprises 1/75th of a second. In computer parlance, this block is sometimes called a sector or raw sector. For CD-Audio, there is no distinction between a sector and a raw sector because all 2352 bytes of data are "actual" audio data. The subcode contains the time in minutes:seconds:frames (called MSF time) of the subcode block. This MSF time is displayed by CD-Audio players as track time.

In the CD-Audio player, audio data and subcode are separated and follow different processing paths. When a computer requests and receives audio data, it is not accompanied by any time or address data. Moreover, if the computer were to separately request subcode data, it would not be known which group of 98 frames the time/address refers to, and in any case the time/address cannot be placed in the 2352-byte sector, which is occupied by audio data. In contrast, CD-ROM data is organized in blocks, each with 2352 bytes; 2048 of these bytes contain the user data (CD-ROM Mode 1). The remaining bytes are used for error correction and to uniquely identify that block of data with an address; this is called a sector number. A 2352-byte block is called a raw sector. The 2048 bytes of data in the raw sector are called a sector. Importantly, the block's data and its address are intrinsically combined. When a computer requests and receives a block of CD-ROM data, both the data and its disc address are present and can be placed in a 2352-byte sector. In this way, the computer can identify the contents and position of data read from a CD-ROM disc.

In the CD-ROM format the unique identifier or address is carried as part of the block itself. The CD-ROM drive must be able to locate specific blocks of data because of the way operating systems implement their File I/O Services.

(File I/O Services are also used to store data on hard disks.) As files are written and deleted, the disk becomes fragmented. The File I/O Services may distribute one large file across the disc to fill in these gaps. The file format used in CD-ROM discs was designed to mimic the way hard disk drives store data-in sectors. The storage medium must therefore provide a way to randomly access any sector. By including a unique address in each block, CD ROM drives can provide this random access capability.

CD-Audio discs do not need addresses because the format was designed to deliver continuous streams of music data. A 4-minute song comprises about 42 million bytes of data. Attaching unique addresses to every 24-byte frame would needlessly waste space. On the other hand, some type of random access is required, so the user can skip to a different song or fast-forward through a song.

However, these accesses do not need to be completely precise. This is where the MSF subcode time is used. A CD-Audio player finds a song, or a given time on the disc, by reading the subcode and placing the laser pickup close to the accompanying block that contains the target MSF in its subcode. However, because each MSF time is only accurate to 1/75th of a second, the resolution is only 98 frames. A CD-Audio player can only locate data within a block 1/75th of a second prior to the target MSF time.

Because CD-ROM drives evolved from CD-Audio players, they incur the same problem. If asked to read a certain sector on a CD-ROM disc, they can only place the pickup just before the target sector, not right on it. However, since the CD-ROM blocks are addressable (uniquely identifiable) the CD-ROM drive can read through the partial sector where it starts, and wait until the correct sector appears. When the correct sector starts, it will begin streaming data into its buffer. This method is reliable because each CD-ROM block is intrinsically addressed;

the address of each data block is known when the block is received.

CD-ROM drives can also mimic sector reading from a CD-Audio disc. CD-ROM drives respond to two types of read commands: Read Sector and Read Raw Sector.

Read Sector commands will transfer only the 2048 actual data bytes from each raw sector read. In normal CD-ROM operations, the Read Sector command is typically used. If needed, an application may issue a Read Raw Sector command, which will transfer all 2352 bytes from each raw sector read. The application might be concerned with the CD-ROM addresses or error correction.

By reading 98 CD-Audio frames to form a 2352-byte block, the computer can issue a Read Raw Sector command to a CD-Audio disc in a CD-ROM drive. This works, but not perfectly. The flow, as documented by Will Pirkle, works like this:

1. The host program sends a Read Raw Sector command to read a sector (e.g., #1234) of an audio disc in a CD ROM drive.

2. The CD-ROM drive converts the sector number into an approximate MSF time.

3. The CD-ROM searches the subcode until it locates the target MSF, and it moves the pickup to within 1/75th of a second before the correct accompanying block (sector) and somewhere inside the previous block (sector #1233).

4. The CD-ROM begins reading data. It loads the data into its buffer for output to the host computer. It does not know the address of each received block, so it cannot locate the next sector properly. The CD-ROM drive reads out exactly 2352 bytes and then stops, somewhere inside sector #1234.

In this example, the program read one block's worth of data (2352 bytes); however, it did not get the correct (or one complete) block. It read part of the previous block and part of the correct block. This is the crux of the DAE problem.

In DAE, large data files are moved from the ROM drive to either a CD/DVD recorder or a hard disk. Large-scale data movement cannot happen continuously in the PC environment. The flow of data is intermittent and intermediate buffers (memory storage locations) are filled and emptied to simulate continuous transfer. Thus, the ROM drive will read sectors in short bursts, with gaps of time between the data transfers. This exacerbates the central DAE problem because the ROM drive must start and stop its reading mechanism millions of times during the transfer of one 4-minute song. The DAE software must sort the partial blocks, which will occur at the beginning and end of each burst of data, and piece the data together, not repeating redundant data, or skipping data. Any device performing DAE, be it the ROM drive, the adapter, the driver, or host software, faces the same problem of dealing with the overlapping of incorrect data.

The erroneous data read from the beginning and end of the data buffers is a result of synchronization error. If left uncorrected, these synchronization errors manifest themselves as periodic clicks, pops, or noise bursts in the extracted music. Clearly, DAE errors must be corrected by matching known good data to questionable data. This matching procedure is essential in any DAE system. ROM drives may also utilize high-accuracy motors to try to place the laser pickup as close as possible to a target CD-Audio sector. Even highly accurate motors cannot guarantee perfect sector reads. Some type of overlap and compare method must be employed.

This overlap technique has no other function except to perform DAE. A ROM drive, adapter, or host software that implements DAE must apply several engineering methods to properly correct the synchronization errors that occur when a CD-Audio disc is accessed like a CD-ROM disc.

Only then may these accesses transfer correct digital audio data from a given CD-Audio source. The ATAPI specification defines drive interfacing. The command set in newer revisions of the specification provides better head positioning and supports error-correction and subcode functions and thus simplifies software requirements and expedites extraction.

Flash Memory

Portable flash memory offers a small and robust way to store data to nonvolatile solid-state memory (an electrically erasable programmable read-only memory or EEPROM), via an onboard controller. A variety of flash memory formats have been developed, each with a different form factor.

These formats include the Compact Flash, Memory Stick, SD, and others. Flash memories on cards are designed to directly plug into a receiving socket. Other flash memory devices interface via a USB or other means. Flash memory technology allows high storage capacity of many gigabytes using semiconductor traces of 130-nm thickness, or as thin as 80 nm. Data transfer rates of 20 Mbps and higher are available. Some cards also incorporate WiFi or Bluetooth for wireless data exchange and communications. Flash memory operates with low power consumption and does not generate heat.

The Compact Flash format uses Type I and Type II form factors. Type II is larger than Type I and requires a wider slot; Type I cards can use Type II slots. The SD format contains a unique, encrypted key, using the Content Protection for Recordable Media (CPRM) standard. The SDIO (SD Input/Output) specification uses the standard SD socket to also allow portable devices to connect to peripherals and accessories. The MultiMediaCard format is a similar precursor to SD, and can be read in many SD devices. Memory Stick media is available in unencrypted Standard and encrypted MagicGate forms. Memory Stick PRO provides encryption and data loss protection features.

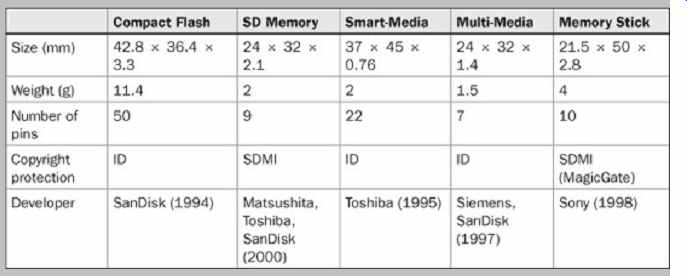

Specifications for some flash memory formats are given in Table. 14.3.

TABLE 3 Specifications for removable flash memory formats.

Hard-Disk Drives

Both personal computers and dedicated audio workstations are widely used for audio production. From a hardware standpoint, the key to both systems is the ability to store audio data on magnetic hard-disk drives. Hard disk drives offer fast and random access, fast data transfer, high storage capacity, and low cost. A hard-disk-based audio system consolidates storage and editing features and allows an efficient approach to production needs.

Optical disc storage has many advantages, particularly for static data storage. But magnetic hard-disk drives are superior for the dynamic workflow of audio production.

Magnetic Recording

Magnetic media is composed of a substrate coated with a thin layer of magnetic material, such as gamma ferric oxide (Fe2O3). This material is composed of particles that are acicular (cigar-shaped). Each particle exhibits a permanent magnetic pole structure that produces a constant magnetic field. The orientation of the magnetic field can be switched back and forth. When a media is unrecorded, the magnetic fields of the particles have no net orientation. To record information, an external magnetic field orients the particles' magnetic fields according to the alignment of the applied field. The coercivity of the particles describes the strength of the external field that is needed to affect their orientation.

Further, the coercivity of the particles exhibits a Gaussian distribution in which a few particles are oriented by a weak applied field, and the number increases as the field is increased, until the media saturates and an increase in the external field will no longer change net magnetization.

Saturation magnetic recording is used when storing binary data. The force of the external field is increased so that the magnetic fields in virtually all the particles are oriented. When a bipolar waveform is applied, a saturated media thus has two states of equal magnitude but opposite polarity. The write signal is a current that changes polarity at the transitions in the channel bitstream. Signals from the write head cause entire regions of particles to be oriented either positively or negatively. These transitions in magnetic polarity can represent transitions between 0 or 1 binary values. During playback, the magnetic medium with its different pole-oriented regions passes before a read head, which detects the changes in orientation. Each transition in recorded polarity causes the flux field in the read head to reverse, generating an output signal that reconstructs the write waveform. The strength of the net magnetic changes recorded on the medium determines the medium's robustness. A strongly recorded signal is desired because it can be read with less chance of error. Saturation recording ensures the greatest possible net variation in orientation of domains; hence, it is robust.

Hard-Disk Design

Hard-disk drives offer reliable storage, fast access time, fast data transfer, large storage capacity, and random access. Many gigabytes of data can be stored in a sealed environment at an extremely low cost and in a relatively small size.

In most systems, the hard disk media is nonremovable; this lowers manufacturing cost, simplifies the medium's design, and allows increased capacity. The media usually comprises a series of disks, usually made of rigid aluminum alloy, stacked on a common spindle. The disks are coated on the top and bottom with a magnetic material such as ferric oxide, with an aluminum-oxide undercoat.

Alternatively, metallic disks can be electroplated with a magnetic recording layer. These magnetic thin-film disks allow closer spacing of data tracks, providing greater data density and faster track access. Thin-film disks are more durable than conventional oxide disks because the data surface is harder; this helps them to resist head crashes.

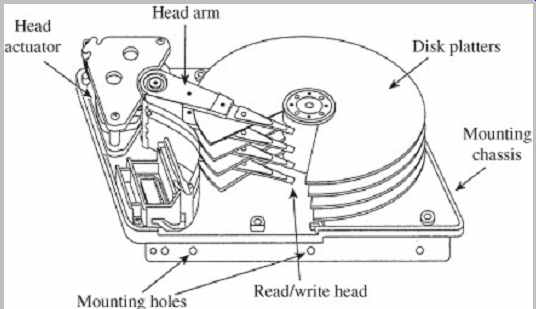

Construction of a hard-disk drive is shown in FIG. 7.

Hard disks rotate whenever the unit is powered. This is because the mass of the system might require several seconds to reach proper rotational speed. A series of read/write heads, one for each magnetic surface, are mounted on an arm called a head actuator. The actuator moves the heads across the disk surfaces in unison to seek data. In most designs, only one head is used at a time (some drives used for digital video are an exception); thus, read/write circuitry can be shared among all the heads.

Hard-disk heads float over the magnetic surfaces on a thin cushion of air, typically 20 µm or less. The head must be aerodynamically designed to provide proper flying height, yet negotiate disk surface warping that could cause azimuth errors, and also fly above disk contaminants.

However, the flying height limits data density due to spacing loss. A special, lubricated portion of the disc is used as a parking strip. In the event of a head crash, the head touches the surface, causing it to burn (literally, crash and burn). This usually catastrophically damages both the head and disks, necessitating, at best, a data-recovery procedure and drive replacement.

FIG. 7 Construction of a hard-disk drive showing disk platters, head

actuator, head arm, and read/write heads.

Some disk drives use ferrite heads with metal-in-gap and double metal-in-gap technology. The former uses metal sputtered in the trailing edge of the recording gap to provide a well-defined record pulse and higher density; the latter adds additional magnetic material to further improve head response. Some heads use thin-film technology to achieve very small gap areas, which allows higher track density. Some drives use magneto-resistive heads (MRH) that use a nano-sized magnetic material in the read gap with a resistance that varies with magnetic flux. Typically, only one head is used at a time. The same head is used for both reading and writing; precompensation equalization is used during writing. Erasing is performed by overwriting.

Several types of head actuator designs are used; for example, a moving coil assembly can be used. The moving coil acts against a spring to position the head actuator on the disk surface. Alternatively, an electric motor and carriage arrangement could be used in the actuator. Small PCMCIA drives use a head-to-media contact recording architecture, thin-film heads, and vertical recording for high data density.

To maintain correct head-to-track tolerances, some drives calibrate their mechanical systems according to changes in temperature. With automatic thermal recalibration, the drive interrupts data flow to perform this function; this is not a hardship with most data applications, but can interrupt an audio or video signal. Some drives use smart controllers that do not perform thermal recalibration when in use; these drives (sometimes called AV drives) are recommended for critical audio and video applications.

Data on the disk surface is configured in concentric data tracks. Each track comprises one disk circumference for a given head position. The total tracks provided by all the heads at a given radius position is known as a cylinder-a strictly imaginary construction. Most drives segment data tracks into arcs known as sectors. A particular physical address within a sector, known as a block, is identified by a cylinder (positioner address), head (surface address), and sector (rotational angle address). Modified frequency modulation (MFM) coding as well as other forms of coding such as 2/3 and 2/7 run-length limited codes are used for high storage density.

Hard-disk drives were developed for computer applications where any error is considered fatal. Drives are assembled in clean rooms. The atmosphere inside the drive housing is evacuated, and the unit is hermetically sealed to protect the media from contamination. Media errors are greatly reduced by the sealed disk environment.

However, an error correction encoding scheme is still needed in most applications. Manufactured disk defects, resulting in bad data blocks, are logged at the factory, and their locations are mapped in directory firmware so the drive controller will never write data to those defective addresses. Some hard-disk drives use heat sinks to prevent thermal buildup from the internal motors. In some cases, the enclosure is charged with helium to facilitate heat dissipation and reduce disk drag.

Data can be output in either serial or parallel; the latter provides faster data transfer rates. For faster access times, disk-based systems can be designed to write data in a logically organized fashion. A method known as spiraling can be used to minimize interruptions in data transfer by reducing sector seek times at a track boundary. Overall, hard disks should provide a sustained transfer rate of 5 to 20 Mbyte/s and access time (accounting for seek time, rotational latency, and command overhead) of 5 ms. Some disk drives specify burst data transfer rates; a sustained rate is a more useful specification. A rotational speed of 7,200 rpm to 15,000 rpm is recommended for most audio applications. In any case, RAM buffers are used to provide a continuous flow of output data.

Hard-disk drives are connected to the host computer via SCSI , IDE (ATA), Firewire, USB, EIDE (ATA-2), and Ultra ATA (ATA-3 or Ultra DMA) connections. ATA drives offer satisfactory performance at a low price. SCSI-2 and SCSI-3 drives offer faster and more robust performance compared to EIDE; up to 15 drives can be placed on a wide SCSI bus. In practice, the transfer rate of a hard disk is faster than that required for a digital audio channel.

During playback, the drive delivers bursts of data to the output buffer which in turn steadily delivers output data. The drive is free to access data randomly distributed on different platters. Similarly, given sufficient drive transfer rate, it is possible to record and play back multiple channels of audio. High-performance systems use a Redundant Array of Independent Disks (RAID) controller;

RAID level 0 is often used in audio or video applications. A level 0 configuration uses disk mirroring, writing blocks of each file to multiple drives, to achieve fast throughput.

It is sometimes helpful to periodically defragment or optimize a drive; a bundled or separate utility program places data into continuous sections for faster access.

Most disk editing is done through an edit decision list in which in/out and other edit points are saved as data addresses. Music plays from one address, and as the edit point approaches, the system accesses the next music address from another disk location, joining them in real time through a crossfade. This allows nondestructive editing; the original data files are not altered. Original data can be backed up to another medium, or a finished recording can be output using the edit list.

Highly miniaturized hard-disk drives are packaged to form removable cards that can be connected via USB or plugged directly into a receiving slot. This drive technology is sometimes known as "IBM Microdrive." The magnetic platter is nominally "1 inch" in diameter; the card package measures 42.8 × 36.4 × 5 mm overall. Other devices use platters with 17-mm or 18-mm diameter; outer card dimensions are 36.4 × 21.4 × 5 mm. Both flash memory and Microdrive storage are used for portable audio and video applications. Microdrives offer low cost per megabyte stored.

Digital Audio Workstations

Digital audio workstations are computer-based systems that provide extensive audio recording, storage, editing, and interfacing capabilities. Workstations can perform many of the functions of a traditional recording studio and are widely used for music production. The low cost of personal computers and audio software applications has encouraged their wide use by professional music engineers, musicians, and home recordists. The increase in productivity as well as creative possibilities, are immense.

Digital audio workstations provide random access storage, and multitrack recording and playback. System functions may include nondestructive editing; digital signal processing for mixing, equalization, compression, and reverberation; subframe synchronization to timecode and other timebase references; data backup; networking; media removability; external machine control; sound-cue assembly; edit decision list I /O; and analog and digital data I /O. In most cases, this is accomplished with a personal computer, and dedicated audio electronics that are interfaced to the computer, or software plug-in programs that add specific functionality. Workstations provide multi track operation. Time-division multiplexing is used to overcome limitations of a hardwired bus structure. In this way, the number of tracks does not equal the number of audio outputs. In theory, a system could have any number of virtual tracks, flexibly directed to inputs and outputs. In practice, as data is distributed over a disk surface, access time limits the number of channels that can be output from a drive. Additional disk drives can increase the number of virtual tracks available; however, physical input/output connections ultimately impose a constraint. For example, a system might feature 256 virtual tracks with 24 I/O channels.

Digital audio workstations use a graphical interface, with most human action taking place with a mouse and keyboard. Some systems provide a dedicated hardware controller. Although software packages differ, most systems provide standard "tape recorder" transport controls along with the means to name autolocation points, and punch-in and punch-out indicators. Time-scale indicators permit material to be measured in minutes, seconds, bars and beats, SMPTE timecode, or feet and frames. Grabber tools allow regions and tracks to be moved; moves can be precisely aligned and synchronized to events. Zoomer tools allow audio waveforms to be viewed at any resolution, down to the individual sample, for precise editing. Other features include fading, crossfading, gain change, normalization, tempo change, pitch change, time stretch and shrink, and morphing.

Digital audio workstations can provide mixing console emulation including virtual mixing capabilities. An image of a console surface appears on the display, with both audio and MIDI tracks. Audio tracks can be manipulated with faders, pan pots, and equalization modules, and MIDI tracks addressed with volume and pan messages. Other console controls include mute, record, solo, output channel assignment, and automation. Volume Unit (VU) meters indicate signal level and clipping status. Nondestructive bouncing allows tracks to be combined during production, prior to mix down. Digital signal processing can be used to perform editing, mixing, filtering, equalization, reverberation, and other functions. Digital filters may provide a number of filter types, and filters can provide smoothing function between previous and current filter settings.

Although the majority of workstations use off-the-shelf Apple or PC computers, some are dedicated, stand-alone systems. For example, a rack-mount unit can contain multiple hard-disk drives, providing recording capability for perhaps 96 channels. A remote-control unit provides a control surface with faders, keyboard, track ball, and other controllers.

Audio Software Applications

Audio workstation software packages permit audio recording, editing, processing, and analysis. Software packages permit operation in different time modes including number of samples, absolute frames, measures and beats, and different SMPTE timecodes. They can perform audio file conversion, supporting AVI , WAV, AIFF, RealAudio, and other file types. For example, AVI files allow editing of audio tracks in a video program. In most cases, software can create samples and interface with MIDI instruments.

The user can highlight portions of a file, ranging from a single sample to the entire piece, and perform signal processing. For example, reverberation could be added to a dry recording, using presets emulating different acoustical spaces and effects. Time compression and expansion allow manipulation of the timebase. For example, a 70-second piece could be converted to 60 seconds, without changing the pitch. Alternatively, music could be shifted up or down a standard musical interval, yielding a key change. A flat vocal part can be raised in pitch. A late entrance can be moved forward by trimming the preceding rest. A note that is held too long can be trimmed back. A part that is rushed can be slowed down while maintaining its pitch. Moreover, all those tools can be wielded against musical phrases, individual notes, or parts of notes.

In many cases, software provides analysis functions. A spectrum analysis plug-in can analyze the properties of sound files. This software performs a fast Fourier transform (FFT), so a time-based signal can be examined in the frequency domain. A spectrum graph could be displayed either along one frequency axis, or along multiple frequency axes over time, in a "waterfall" display. Alternatively, signals could be plotted as a spectrogram, showing spectral and amplitude variations over time. Advanced users can select the FFT sample size and overlap, and apply different smoothing windows.

Noise reduction plug-in software can analyze and remove noise such as tape hiss, electrical hum, and machinery rumble from sound files by distinguishing the noise from the desired signal. It analyzes a part of the recording where there is noise, but no signal, and then creates a noiseprint by performing an FFT on the noise.

Using the noiseprint as a guide, the algorithm can remove noise with minimal impact on the desired signal. For the best results, the user manually adjusts the amount of noise attenuation, attack and release of attenuation, and perhaps changes the frequency envelope of the noiseprint. Plug-ins can also remove clicks and pops from a vinyl recording.

Clicks can be removed with an automatic feature that detects and removes all clicks, or individual defects can be removed manually. The algorithm allows a number of approaches. For example, a click could be replaced by a signal surrounding the click, with a signal from the opposite channel, or a pencil tool could be used to draw a replacement waveform. Conversely, other plug-ins add noises to a new recording, to make it sound vintage.

One of the most basic and important functions of a workstation is its use as an audio editor. With random access storage, instantaneous auditioning, level adjustment, marking, and crossfading, nondestructive edit operations are efficiently performed. Many editing errors can be corrected with an "undo" command.

Using an edit cursor, clipboard, cut and paste, and other tools, sample-accurate cutting, copying, pasting, and splicing are easily accomplished. Edit points are located in ways analogous to analog tape recorders; sound is "scrubbed" back and forth until the edit point is found. In some cases, an edit point is assigned by entering a timecode number. Crossfade times can be selected automatically or manually. Edit splices often contain four parameters: duration, mark point, crossfade contour, and level. Duration sets the time of the fade. The mark point identifies the edit position, and can be set to various points within the fade. Crossfade contour sets the gain-versus time relationship across the edit. Although a linear contour sets the midpoint gain of each segment at -6 dB, other midpoint gains might be more suitable. The level sets the gain of any segments edited together to help match them.

Most editing tasks can be broken down into cut, copy, replace, align, and loop operations. Cut and copy functions are used for most editing tasks. A cut edit moves marked audio to a selected location, and removes it from the previous location. However, cut edits can be broken down into four types. A basic cut combines two different segments. Two editing points are identified, and the segments joined. A cut/insert operation moves a marked segment to a marked destination point. Three edit points are thus required. A delete/cut edit removes a segment marked by two edit points, shortening overall duration. A fourth cut operation, a wipe, is used to edit silence before or after a segment.

A copy edit places an identical duplicate of a marked audio section in another section. It thus leaves the original segment unchanged; the duration of the destination is changed, but not that of the source. A basic copy operation combines two segments. A copy/insert operation copies a marked segment to a destination marked in another segment. Three edit points are required.

A "replace" command exchanges a marked source section with a marked destination section, using four edit points. Three types of replace operations are performed.

An exact replace copies the source segment and inserts it in place of the marked destination segment. Both segment durations remain the same. Because the duration of the destination segment is not changed, any three of the four edit points define the operation. The fourth point could be automatically calculated. A relative replace edit operation permits the destination segment to be replaced with a source segment of a different duration; the location of one edit point is simply altered. A replace-with-silence operation writes silence over a segment. Both duration and timecode alignment are unchanged.

An "align" edit command slips sections relative to timecode, slaving them to a reference, or specifying an offset. Several types of align edits are used. A synchronization align edit is used to slave a segment to a reference timecode address. One edit point defines the synchronization reference alignment point in the timecode, and the other marks the segment timecode address to be aligned. A trim alignment is used to slip a segment relative to timecode; care must be taken not to overlap consecutive segments. An offset alignment changes the alignment between an external timecode and an internal timecode when slaving the workstation.

A "loop" command creates the equivalent of a tape loop in which a segment is seamlessly repeated. In effect, the segment is sequentially copied. The loop section is marked with the duration and destination; the destination can be an unused track, or an existing segment.

When any edit is executed, the relevant parameters are stored in an edit list and recalled when that edit is performed. The audio source material is never altered.

Edits can be easily revised; moreover, memory is conserved. For example, when a sound is copied, there is no need to rewrite the data. An extensive editing session would result in a short database of edit decisions and their parameters, as well as system configuration, assembled into an edit list. Note that in the above descriptions of editing operations, the various moves and copies are virtual, not physical.

Professional Applications

Much professional work is done on PC-based workstations, as well as dedicated workstations. A range of production and post-production applications are accommodated: music scoring, recording, video sweetening, sound design, sound effects, edit-to-picture, Foley, ADR (automatic dialogue replacement), and mixing.

To achieve this, a workstation can combine elements of a multitrack recorder, sequencer, drum machine, synthesizer, sampler, digital effects processor, and mixing board, with MIDI , SMPTE, and clock interfaces to audio and video equipment. Some workstations specialize in more specific areas of application.

Soundtrack production for film and video benefits from the inherent nature of synchronization and random access in a workstation. Instantaneous lockup and the ability to lock to vari-speed timecode facilitates production, as does the ability to slide individual tracks or cues back and forth, and the ability to insert or delete musical passages while maintaining lock. Similarly, a workstation can fit sound effects to a picture in slow motion while preserving synchronization.

In general, to achieve proper artistic balance, audio post-production work is divided into dialogue editing, music, effects, Foley, atmosphere, and mixing. In many cases, the audio elements in a feature film are largely re created. A workstation is ideal for this application because of its ability to deal with disparate elements independently, locate, overlay, and manipulate sound quickly, synthesize and process sound, adjust timing and duration of sounds, and nondestructively audition and edit sounds. In addition, there is no loss of audio quality in transferring from one digital medium to another.

In Foley, footsteps and other natural sound effects are created to fit a picture's requirements. For example, a stage with sand, gravel, concrete, and water can be used to record footstep sounds in synchronization with the picture.

With a workstation, the sounds can be recorded to disk or memory, and easily edited and fitted. Alternatively, a library of sounds can be accessed in real time, eliminating the need for a Foley stage. Hundreds of footsteps or other sounds can be sequenced, providing a wide variety of effects. Similarly, film and video requires ambient sound, or atmosphere, such as traffic sounds or cocktail party chatter.

With a workstation, library sounds can be sequenced, then overlaid with other sounds and looped to create a complex atmosphere, to be triggered by an edit list, or crossfaded with other atmospheres. Disk recording also expedites dialogue replacement. Master takes can be assembled from multiple passes by setting cue points, and then fitted back to picture at locations logged from the original synchronization master. Room ambience can be taken from location tapes, then looped and overlaid on re recorded dialogue.

A workstation can be used as a master MIDI controller and sequencer. The user can remap MIDI outputs, modify or remove messages such as aftertouch, and transmit patch changes and volume commands as well as song position pointers. It can be advantageous to transfer MIDI sequences to the workstation because of its superior timing resolution. For example, a delay problem could be solved by sliding tracks in fractions of milliseconds.

Workstations are designed to integrate with SMPTE timecode. SMPTE in- and out-points can be placed in a sequence to create a hit list. Offset information can be assigned flexibly for one or many events. Blank frame spaces can be inserted or deleted to shift events. Track times can be slid independently from times of other tracks, and sounds can be interchanged without otherwise altering the edit list.

Commercial production can be expedited. For example, an announcer can be recorded to memory, assigning each line to its own track. In this way, tags and inserts can be accommodated by shifting individual lines backward or forward; transfers and back-timing are eliminated. Likewise "doughnuts" can be produced by switching sounds, muting and soloing tracks, changing keys without changing tempos, cutting and pasting, and manipulating tracks with fade-ins and fade-outs. For broadcast, segments can be assigned to different tracks and triggered via timecode. In music production, for example, a vocal fix is easily accomplished by sampling the vocal to memory, bending the pitch, and then flying it back to the master-an easy job when the workstation records timecode while sampling.

Audio for Video Workstations

Some digital audio workstations provide digital video displays for video editing, and for synchronizing audio to picture. Using video capture tools, the movie can be displayed in a small insert window on the same screen as the audio tools; however, a full-screen display on a second monitor is preferable. Users can prepare audio tracks for QuickTime movies, with random access to many takes.

Using authoring tools, audio and video materials can be combined into a final presentation. Although the definitions have blurred, it is correct to classify video edit systems as linear, nonlinear, off-line, and on-line.

Nonlinear systems are disk-based, and linear systems are videotape-based. Off-line systems are used to edit audio and video programs, then the generated edit decision list (EDL) can be transferred to a higher-quality on-line video system for final assembly. In many cases, an off-line system is used for editing, and its EDL is transferred to a more sophisticated on-line system for creation of final materials. In other cases, and increasingly, on-line, nonlinear workstations provide all the tools, and production quality, for complete editing and post production.

Audio for video workstations offer a selection of frame rates and sampling frequencies, as well as pre-roll, post roll, and other video prerequisites. Some workstations also provide direct control over external videotape recorders.

Video signals are recorded using data reduction algorithms to reduce throughput and storage demands.

Depending on the compression ratio, requirements can vary from 8 Mbytes/min to over 50 Mbytes/min. In many cases, video data on a hard disk should be defragmented for more optimal reading and writing.

Depending on the application, either the audio or video program can be designated as the master. When a picture is slaved to audio, for example, the movie will respond to audio transport commands such as play, rewind, and fast forward. A picture can be scrubbed with frame accuracy, and audio dropped into place. Alternatively, a user can park the movie at one location while listening to audio at another location. In any case, there is no waiting while audio or videotapes shuttle while assembling the program.

Using machine control, the user can audition the audio program while watching the master videotape, then lay back the final synchronized audio program to a digital audio or video recorder.

During a video session, the user can capture video clips from an outside analog source. Similarly, audio clips can be captured from analog sources. Depending on the onboard audio, or sound card used, audio can be captured in monaural or stereo, at a variety of sampling rates, and word length resolutions. Similarly, source elements that are already digitized can be imported directly into the application. For example, sound files in the AIFF or WAV formats can be imported, or tracks can be imported from CD-Audio or CD-ROM.

Once clips are placed in the project window, authoring software is used to audition and edit clips, and combine them into a movie with linked tracks. For example, the waveforms comprising audio tracks can be displayed, and specific nuances or errors processed with software tools.

Audio and video can be linked and edited together, or unlinked and edited separately. In- and out-points and markers can be set, identifying timed transition events such as a fade. Editing is nondestructive; the recorded file is not manipulated, only the directions for replaying it; edit points can be revised ad infinitum. Edited video and audio clips can be placed relative to other clips using software tools, and audio levels can be varied. The finished project can be compiled into a Quick-Time movie, for example, or with appropriate hardware can be recorded to an external videotape or optical disc recorder.