Musical Instrument Digital Interface (MIDI)

The Musical Instrument Digital Interface (MIDI ) is widely used to interconnect electronic music instruments and other audio production equipment, as well as music notation devices. MIDI is not a digital audio interface because audio signals do not pass through it. Instead, MIDI conveys control information as well as timing and synchronization information to control musical events and system parameters of music devices. For example, striking a key on a MIDI keyboard generates a note-on message, containing information such as the note's pitch and the velocity with which the key was struck. MIDI allows one instrument to control others, eliminating the requirement that each instrument have a dedicated controller. Since a MIDI file notates musical events, MIDI files are very small compared to WAV files and can be streamed with low overhead or downloaded very quickly. However, at the client end, the user must have a MIDI synthesizer installed on the local machine to render the data into music. Many sound cards contain MIDI input and output ports within a 15-pin joystick connector; an optional adapter is required to provide the 5-pin DIN jack comprising a MIDI port. A MIDI port provides 16 channels of communication, allowing many connection options.

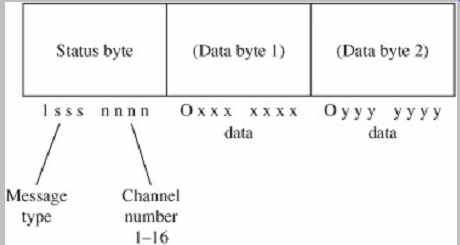

FIG. 10 The general MIDI message protocol conveys data in sequences of

up to three words, comprising one status word and two data words.

MIDI is an asynchronous, unidirectional serial interface. It operates at 31,250 bits/second (31.25 baud). The data format uses 8-bit bytes; most messages are conveyed as a one-, two-, or three-word sequence, as shown in FIG. 10.

The first word in a sequence is a status word describing the type of message and channel number (up to 16). Status words begin with a 1 and may convey a message such as "note on" or "note off." Subsequent words (if any) are data words. Data words begin with a 0 and contain message particulars. For example, if the message is "note on," two data bytes describe which note and its playback level.

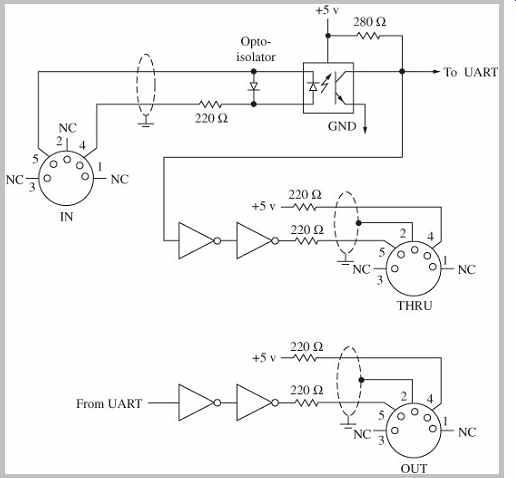

MIDI can also convey system exclusive messages with data relating to specific types of equipment. These sequences can contain any number of bytes. MIDI can also convey synchronization and timing information. Status bytes can convey MIDI beats, song pointers can convey specific locations from the start of a song, and MIDI Time Code (MTC) can convey SMPTE/EBU timecode. MIDI Show Control (MSC) is used to control multimedia and theatrical devices such as lights and effects. General MIDI (GM) standardizes many functions such as instrument definitions; it is used in many game and Internet applications where conformity is needed. MIDI connections typically use a 5 pin DIN connector, but XLR connectors can also be used. A twisted-pair cable is used over a maximum length of 50 feet. As shown in FIG. 11, many MIDI devices have three MIDI ports for MIDI In, MIDI Out, and MIDI Thru, to receive data, output data and to duplicate and pass along received data, respectively.

The original MIDI specification did not describe what instrument sounds (called patches) should be included on a synthesizer. Thus, a song recorded with piano, bass, and drum sounds on one synthesizer could be played back with different instruments on another. The General MIDI specification set a new standard for governing the ordering and naming of sounds in a synthesizer's memory banks.

This allows a song written on one manufacturer's instrument to play back with the correct sounds on another manufacturer's instrument. General MIDI provides 128 musical sounds and 47 percussive sounds.

FIG. 11 The MIDI electrical interface uses In, Thru, and Out ports, typically

using 5-pin DIN connectors.

AES11 Digital Audio Reference Signal

The AES11-1997 standard specifies criteria for synchronization of digital audio equipment in studio operations. It is important for interconnected devices to share a common timing signal so that individual samples are processed simultaneously. Timing inaccuracies can lead to increased noise, and even clicks and pops in the audio signal. With a proper reference, transmitters, receivers, and D/A converters can all work in unison.

Devices must be synchronized in both frequency and phase, and be SMPTE time synchronous as well. It is relatively easy to achieve frequency synchronization between two sources; they must follow a common clock, and the signals' bit periods must be equal. However, to achieve phase-synchronization, the bit edges in the different signals must begin simultaneously.

When connecting one digital audio device to another, the devices must operate at a common sampling frequency. Also, equally important, bits in the sending and received signals must begin simultaneously. These synchronization requirements are relatively easy to achieve.

Most digital audio data streams are self-clocking; the receiving circuits read the incoming modulation code, and reference the signal to an internal clock to produce stable data. In some cases, an independent synchronization signal is transmitted. In either case, in simple applications, the receiver can lock to the bitstream's sampling frequency.

However, when numerous devices are connected, it is difficult to obtain frequency and phase synchronization.

Different types of devices use different timebases hence they exhibit noninteger relationships. For example, at 44.1 kHz, a digital audio bit-stream will clock 1471.47 samples per NTSC video frame; sample edges align only once every 10 frames. Other data, such as the 192-sample channel status block, creates additional synchronization challenges; in this case, the audio sample clock, channel status, and video frame will align only once every 20 minutes.

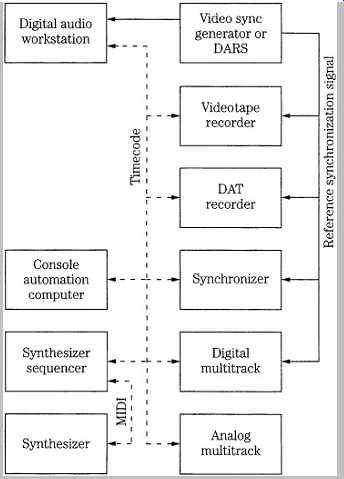

To achieve synchronization, a common clock with good frequency stability should be distributed through a studio. In addition, external synchronizers are needed to read SMPTE timecode, and provide time synchronization between devices. FIG. 12 shows an example of synchronization for an audio/video studio. Timecode is used to provide general time lock; a master oscillator (using AES11 or video sync) provides a stable clock to ensure frequency lock of primary devices (the analog multitrack recorder is locked via an external synchronizer and synthesizers are not locked). It is important that the timecode reference is different from the frequency lock reference. In addition, most timecode sources are not sufficiently accurate to provide frequency and phase-locked references through a studio.

FIG. 12 An example of synchronization in an audio/video studio showing

a reference synchronization signal, timecode, and MIDI connections.

Although an AES3 line could be used to distribute a very stable clock reference, a dedicated word clock is preferred. Specifically, the AES11 Digital Audio Reference Signal (DARS) has been defined, providing a clocking signal with high frequency stability for jitter regulation. Using this reference signal, any sample can be time-aligned to any other sample, or with the addition of a timecode reference, aligned to a specific video frame edge. AES11 uses the same format and electrical configuration, and connectors as AES3; only the preamble is used as a reference clock. The AES11 signal is sometimes called "AES3 black" because when displayed it looks like an AES3 signal with no audio data present.

The AES11 standard defines Grade 1 long-term frequency accuracy (accurate to within ±1 ppm) and Grade 2 long-term accuracy (accurate to within ±10 ppm), where ppm is one part per million. Use of Grade 1 or 2 is identified in byte 4, bits 0 and 1 of the channel status block:

Grade 1 (01), Grade 2 (10). With Grade 1, for example, a reference sampling clock of 44.1 kHz would require an accuracy of ±0.0441 Hz. A Grade 1 system would permit operation with 16- or 18-bit audio data; 20-bit resolution might require a more accurate reference clock, such as one derived from a video sync pulse generator (SPG).

Time-code references lack sufficient stability. When synchronizing audio and video equipment, the DARS must be locked to the video synchronization reference.

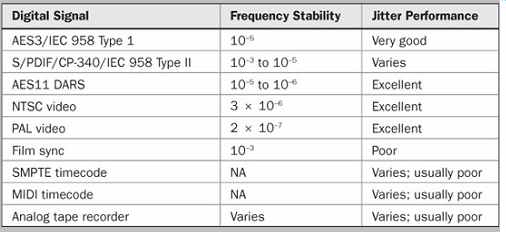

Frequency stability of the clocking in several audio and video interface signals is summarized in Table 1.

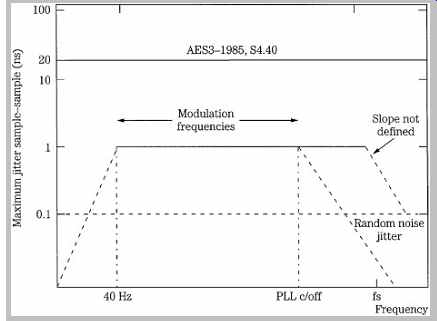

A separate word clock cable is run from the reference source to each piece of digital equipment through a star type architecture, using a distribution amplifier as with a video sync pulse generator, to the reference clock inputs of all digital audio devices. Only a small buffer is needed to reclock data at the receiver. For example, a 5-ms buffer would accommodate 8 minutes of program that varies by ±10 ppm from the master reference. When A/D or D/A converters do not have internal clocks, and derive their clocks from the DARS, timing accuracy of the DARS must be increased; any timing errors are applied directly to the reconstructed audio signal. For 16-bit resolution at the converter, the AES11 standard recommends that peak sample clock modulation, sample to sample, be less than ±1 ns at all modulation frequencies above 40 Hz, and that random clock jitter, sample to sample, be less than ±0.1 ns per sample clock period, as shown in FIG. 13. Jitter is discussed in more detail in Section 4.

Table 1 Frequency stability of clocking in some audio and video interface

signals.

FIG. 13 Timebase tolerances must be increased when DARS is used to clock

sample converters.

Recommended jitter limits for sample clocks in 16-bit A/D and D/A converters are depicted.

AES18 User Data Channels

The AES18-1996 standard describes a method for formatting the user data channels found within the AES3 interface. This format is derived from the packet-based High-Level Data Link Control (HDLC) communications protocol. It conveys text and other message data that might be related or unrelated to the audio data. The user data channel is a transparent carrier providing a constant data rate when the AES3 interface operates at an audio sampling frequency of 48 kHz ± 12.5%. A message is sent as one or more data packets, each with the address of its destination; a receiver reads only the messages addressed to it. Packets can be added or deleted as is appropriate as data is conveyed from one device to another. A packet comprises an address byte, control byte, address extension byte (optional), and an information field that is no more than 16 bytes. Multiple packets are placed in an HDLC frame; it contains a beginning flag field, packets, a CRCC field, and an ending flag. As described above, each AES3 subframe contains one user bit. User data is coded as an NRZ signal, LSB leading. Typical user bit applications can include messages such as scripts, subtitles, editing information, copyright, performer credits, switching instructions, and other annotations.

AES24 Control of Audio Devices

The AES24-1-1999 standard describes a method to control and monitor audio devices via digital data networks.

It is a peer-to-peer protocol, so that any device may initiate or accept control and monitoring commands. The standard specifies the formats, rules, and meanings of commands, but does not define the physical manner in which commands (or audio signals) are transmitted; thus, it is applicable to a variety of communication networks. Using AES24, devices from different manufacturers can be controlled and monitored with a unified command set within a standard format. Each AES24 device is uniquely addressable by the transport network software, such as through a port number. Devices may be signal processors, system controllers, or other components, with or without user interfaces. Devices contain hierarchical objects that may include functions or controls such as gain controls, power switches, or pilot lamps. Object-to-object communication is provided because every object has a unique address. Object addresses have two forms: an object path (a pre-assigned text string) and an object address (a 48-bit unsigned integer).

Messages pass from one object to another; all messages share a common format and a set of exchange rules. In normal message exchange, an object creates and sends a message to another object, specifying a target method. Upon receiving the message, the required action is performed by the target method; if the original message requested a reply when the action has been completed, the target returns a reply stating the outcome. Because the standard is an abstraction, the action may be completed by any means, such as voltage-controlled amplifiers or digital signal processing software. AES24 sub-networks can be connected to form AES24 internetworks. Complex networks with bridges, hubs, and repeaters are possible.

However, each device must be reachable by any other device. In one application, a PC-based controller may run a program with a graphical display of faders and meters (each one is an object). It communicates with external hardware processing devices (containing other objects) using the AES24 protocol. Other objects supervise initialization and configuration processes.

Sample Rate Converters

In a monolithic digital world, there would be one sampling frequency. In practice, the world is populated by many different sampling frequencies. Although 44.1 kHz and 48 kHz are the most common, 32 kHz is used in many broadcast applications. The AES5-1984 standard originally defined the use of these sampling frequencies.

Sound cards use frequencies of 44.1, 22.05, and 11.025 kHz (among others), and 44.056 kHz is often used with video equipment. The DVD-Audio format defines several sampling frequencies including 88.2, 96, 176.4, and 192 kHz. The Blu-ray format can use 48-, 96-, and 192-kHz sampling frequencies. In addition, in many applications, vari-speed is used to bend pitch, producing radically diverse sampling rates.

Devices generally cannot be connected when their sampling rates differ. Even when sources are recorded at a common sampling frequency, their data streams can be asynchronous and thus differ by a few Hertz; they must be synchronized to an exact common frequency. In addition, a signal can be degraded by jitter. This changes the accuracy of the signal's sample rate. In some cases, sample rate can be changed with little effort. For example, a 44.1-kHz signal can be converted to 44.056 kHz by removing a sample approximately every 23 ms, or about every 1000 samples.

More typically, dedicated converters are needed.

A synchronous sample rate converter converts one rate to another using an integer ratio. The output rate is fixed in relation to the input rate; this limits applications. An asynchronous sample rate converter (ASRC) can accept a dynamically changing input sampling frequency, and output a constant and uninterrupted sampling frequency, at the same or different frequency. The input and output rates can have an irrational ratio relationship. In other words, the input and output rates are completely decoupled. In addition, the converter will follow any slow rate variations. This solves many interfacing problems.

Conceptually, sample rate conversion works like this: a digital signal is passed through a D/A converter, the analog signal is lowpass-filtered, and then passed through an A/D converter operating at a different sampling frequency. In practice, these functions can be performed digitally, through interpolation and decimation. The input sampling frequency is increased to a very high oversampling rate by inserting zeros in the bitstream, and then is digitally lowpass-filtered. This interpolated signal, with a very high data rate, is then decimated by deleting output samples and digitally lowpass-filtered to decrease output sampling frequency to a rate lower than the oversampling rate. The resolution of the conversion is determined by the number of samples available to the decimation filter. For example, for 16-bit accuracy, the difference between adjacent interpolated samples must be less than 1 LSB at the 16-bit level. This in turn determines the interpolation ratio; an oversampling ratio of 65,536 is required for 16-bit accuracy.

This ratio could be realized with a time-varying finite impulse response (FIR) filter of length 64, in which only nonzero data values are considered, but the required master clock signal of 3.27 GHz is impractical. Another approach uses polyphase filters. A low-pass filter highly oversampling by factor N can be decomposed into N different filters, each filter using a different subset of the original set of coefficients. If the subfilter coefficients are relatively fixed in time, their outputs can be summed to yield the original filter. They act as a parallel filter bank differing in their linear-phase group delays. This can be used for sample rate conversion. If input samples are applied to the polyphase filter bank, samples can be generated at any point between the input samples by selecting the output of a particular polyphase filter. An output sample late in the input sampling period would require a short filter delay (a large offset in the coefficient set), but an early output sample would demand a long delay (a short offset). That is, the offset of the coefficient set is proportional to the timing of the input/output sample selection. As before, accurate conversion requires 216 polyphase filters; in practice, reduction methods reduce the number of coefficients.

To summarize, by adjusting the interpolation and decimation processes, arbitrary rate changes can be accommodated. These functions are effectively performed through polyphase filtering. Input data is applied to a highly oversampled digital lowpass filter. It has a passband of 0 Hz to 20 kHz, and many times the filter coefficients needed to provide this response (equivalent to thousands of polyphase filters). Depending on the instantaneous temporal relationship between the input/output frequency ratio, a selected set of these coefficients processes input samples and compute the amplitude of output samples, at the proper output frequency.

The computation of the ratio between the input and output samples is itself digitally filtered. Effectively, when the frequency of the jitter is higher than the cutoff frequency of the polyphase selection process, the jitter is attenuated; this reduces the effect of any jitter on the input clock. Short periods of sample-rate conversion can thus be used to synchronize signals. An internal first-in first-out (FIFO) buffer is used to absorb data during dynamically changing input sample rates. Input audio samples enter the buffer at the input sampling rate, and are output at the output rate. For example, a timing error of one sample period can exist at the input, but the sampling rate converter can correct this by distributing the content of 99 to 101 input samples over 100 output samples. In this way, the ASRC isolates the jittered clock recovered from the incoming signal, and synchronizes the signal with an accurate low-jitter clock.

Devices such as this make sample rate conversion essentially transparent to the user, and overcome many interfacing problems such as jitter. Because rate converters mathematically alter data values, when sampling rate conversion is not specifically required, it is better to use dedicated reclocking devices to solve jitter problems.

Fiber-Optic Cable Interconnection

With electric cable, information is transmitted by means of electrons. With fiber optics, photons are the carrier. Signals are conveyed by sending pulses of light through an optically clear fiber. Bandwidth is fiber optic's forte; transmission rates of 1 Gbps are common. Either glass or plastic fiber can be used. Plastic fiber is limited to short distances (perhaps 150 feet) and is thicker in diameter than glass fiber; communications systems use glass fiber. The purity of a glass fiber is such that light can pass through 15 miles of it before the light's intensity is halved. In comparison, 1 inch of window glass will halve light intensity. Fibers are pure to within 1 part per billion, yielding absorption losses less than 0.2 dB/km. Fiber-optic communication is not affected by electromagnetic and radio-frequency interference, lightning strikes and other high voltage, and other conditions hostile to electric signals. For example, fiber-optic cable can be run in the same conduit as high power cables, or along the third rail of an electric railroad.

Moreover, because a fiber-optic cable does not generate a flux signal, it causes no interference of its own. Because fiber-optic cables are nonmetallic insulators, ground loops are prevented. A fiber is safe because it cannot cause electrical shock or spark. Fiber-optics also provide low propagation delay, low bit-error rates, small size, light weight, and ruggedness.

Although bandwidth is very high, the physical dimensions of the fiber-optic cables are small. Fiber size is measured in micrometers, with a typical fiber diameter ranging from 10 µm to 200 µm. Moreover, many individual fibers might be housed in a thin cable. For example, there may be 6 independent fibers in a tube, with 144 tubes within one cable.

Any fiber-optic system, whether linking stereos, cities, or continents, consists of three parts: an optical source acting as an optical modulator to convert an electrical signal to a light pulse; a transmission medium to convey the light; and an optical receiver to detect and demodulate the signal.

The source can be a light-emitting diode (LED), laser diode, or other component. Fiber optics provides the transmission channel. Positive-intrinsic-negative (PIN) photodiodes or avalanche photodiodes (APD) can serve as receivers. As with any data transmission line, other components such as encoding and decoding circuits are required. In general, low-bandwidth systems use LEDs and PINs with TTL interfaces and multimode fiber. High bandwidth systems use lasers and APDs with emitter coupled logic (ECL) interfaces and single-mode fiber.

Laser sources are used for long-distance applications.

Laser sources can be communication laser diodes, distributed feedback lasers, or lasers similar to those used in optical disc pickups. Although the low-power delivery available from LEDs limits their applications, they are easy to fabricate and useful for short-distance, low-bandwidth transmission, when coupled with PIN photodiodes. Over longer distances, LEDs can be used with single-mode fiber and avalanche photodiodes. Similarly, selection of the type of detector often depends on the application. Data rate, detectivity, crosstalk, wavelength, and available optical power are all factors.

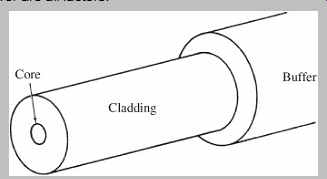

FIG. 14 A fiber-optic cable is constructed with fiber core and cladding,

surrounded by a buffer.

Fiber-Optic Cable

Optical fiber operates as a light pipe that traps entering light. The glass or plastic rod, called the core, is surrounded by a reflective covering, called the cladding, that reflects light back toward the center of the fiber, and hence to the destination. A protective buffer sleeve surrounds the cladding. A fiber-optic cable is shown in FIG. 14. The cladding comprises a glass or plastic material with an index of refraction lower than that of the core. This boundary creates a highly efficient reflector. When light traveling through the core reaches the cladding, the light is either partly or wholly reflected back into the core. If the angle of the ray with the boundary is less than the critical angle (determined from the refractive indexes of the core and the cladding), the ray is partly refracted into the cladding, and partly reflected into the core.

If the ray is incident on the boundary at an angle greater than the critical angle, the ray is totally reflected back into the core. This is known as total internal reflection (TIR).

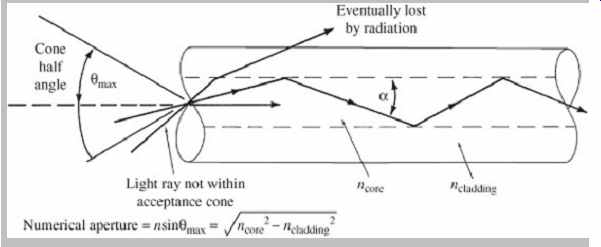

Thus, all rays at incident angles greater than the critical angle are guided by the core, affected only by absorption and connector losses. TIR is shown in FIG. 15. The principle of TIR is credited to British physicist John Tyndall. In 1870, using a candle and two beakers of water, he demonstrated that light could travel contained within a stream of flowing water.

FIG. 15 Total internal reflection (TIR) characterizes the propagation

of light through the fiber. The numerical

aperture of a stepped index optical fiber is a measure of its light acceptance angle and is determined by the refractive indexes of the core and the cladding.

In 1873, James Clerk Maxwell proved that the equations describing the behavior of electric waves apply equally to light. Moreover, he showed that light travels in modes--mathematically, eigenvalue solutions to his electromagnetic field equations that characterize wave guides. In the case of optical fiber, this represents one or more paths along the light wave guide. Multimode fiber-optic cable has a core diameter (perhaps 50 µm to 500 µm) that is large compared to the wavelength of the light source; this allows multiple propagation modes. The result is multiple path lengths for different modes of the optical signal; simply put, most rays of light are not parallel to the fiber axis.

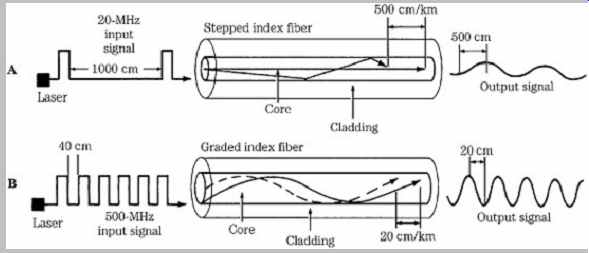

Multimode fiber is specified according to the reflective properties of the boundary: stepped index and graded index. In stepped index fiber, the boundary between the core and cladding is sharply defined, causing light to reflect angularly. Light with an angle of incidence less than the critical angle will pass into the cladding. With graded index fiber, the index of refraction decreases gradually from the central axis outward. This gradual interface results in smoother reflection characteristics. In either case, in a multimode fiber, most light travels within the core.

Performance of multimode fiber is degraded by pulse broadening caused by inter-modal and intramodal dispersion, both of which decrease the bandwidth of the fiber. Stepped index fiber is inferior to graded index in this respect. With intermodal dispersion (also called modal dispersion), some light reaches the end of a multimode fiber earlier than other light due to path length differences in the internal reflective angles. This results in multiple modes.

In stepped index cable, there is delay between the lowest order modes, those modes that travel parallel to the fiber axis, and the highest-order modes, those propagating at the critical angle. In other words, reflections at steeper angles follow a longer path length, and leave the cable after light traveling at shallow angles. A stepped index fiber can exhibit a delay of 60 ns/km. This modal dispersion significantly reduces the fiber's available bandwidth per kilometer, and is a limiting factor. This dispersion is shown in FIG. 16A.

FIG. 16 Fiber-optic cables are available as stepped index or graded index

fibers. A. Stepped index fiber suffers from modal dispersion. B. Graded index

fiber provides a higher transmission bandwidth.

Multimode graded index fiber has reduced intermodal dispersion. This is achieved by compensating for high order mode delay to ensure that these modes travel through a lower refractive index material than the low-order modes, as shown in FIG. 16B. The high-order modes travel at a greater speed than the lower-order modes, compensating for their longer path lengths. Specifically, light travels faster near the cladding, away from the center axis where the index of refraction is higher. The velocity of higher mode light traveling farther from the core more nearly equals that of lower mode light in the optically dense center. Pulse-broadening is reduced, hence the data rate can be boosted. By selecting an optimal refractive index profile, this delay can be reduced to 0.5 ns/km.

Intramodal dispersion is caused by irregularities in the index of refraction of the core and cladding. These irregularities are wavelength-dependent, thus the delay varies according to the wavelength of the light source.

Fibers are thus manufactured to operate at preferred light wavelengths.

In stepped and graded index multimode fibers, the degree of spreading is a function of cable length; bandwidth specification is proportional to distance. For example, a fiber can be specified at 500 kbps for 1 km. It could thus achieve a 500-kbps rate over 1 km or, for example, a 5-Mbps rate over 100 meters. In a multimode system, using either stepped or graded index fiber, wide core fibers carry several light waves simultaneously, often emitted from an LED source. However, dispersion and attenuation limit applications. Multimode systems are thus most useful in applications with short to medium distances and lower data rates.

Single-mode fiber was developed to eliminate modal dispersion. In single-mode systems, the diameter of the stepped index fiber core is small (perhaps 2 µm to 10 µm) and approaches the wavelength of the light source. Thus only one mode, the fundamental mode, exists through the fiber there is only one light path, so rays travel parallel to the fiber axis. For example, a wideband 9/125-µm single-mode fiber contains a 9-µm diameter light guide inside a 125-µm cladding. Because there is only one mode, modal dispersion is eliminated. In single-mode fibers, a significant portion of the light is carried in the cladding. Single-mode systems often use laser drivers; the narrow beam of light propagates with low dispersion and attenuation, providing higher data rates and longer transmission distances. For example, high-performance digital and radio frequency (RF) applications such as CATV (cable TV) would use single-mode fiber and laser sources.

The amount of optical power loss due to absorption and scattering is specified at a fixed wavelength over a length of cable, typically 1 km, and is expressed as decibels of optical power loss per km (dB/km). For example, a 50/125-µm multimode fiber has an attenuation of 2.5 dB/km at 1300 nm, and 4 dB/km at 850 nm. (Because light is measured as power, 3 dB represents a doubling or halving of power.) Generally, a premium glass cable can have an attenuation of 0.5 dB/km, and a plastic cable can exhibit 1000 dB/km.

Most fibers are best suited for operation in visible and near infrared wavelength regions. Fibers are optimized for operation in certain wavelength regions called windows where loss is minimized. Three commonly used wavelengths are approximately 850, 1300, and 1550 nm (353,000, 230,000, and 194,000 GHz, respectively).

Generally, 1300 nm is used for long-distance communication; small fiber diameter (less than 10 µm) and a laser source must be used. Short distances, such as LANs (local-area networks), use 850 nm; LED sources can be used. The 1550-nm wavelength is often used with wavelength multiplexers so that a 1550-nm carrier can be piggybacked on a fiber operating at 850 nm or 1300 nm, running either in a reverse direction or as additional capacity.

Single-mode systems can operate in the 1310-nm and 1550-nm wavelength ranges. Multimode systems use fiber optimized in the 800-nm to 900-nm range. Generally, multi mode plastic fibers operate optimally at 650 nm. In general, light with longer wavelengths passes through fiber with less attenuation. Most fibers exhibit medium losses ( 3 to 5 dB/km) in the 800-nm to 900-nm range, low loss (0.5 to 1.5 dB/km) at 1150-nm to 1350-nm region, and very low loss (less than 0.5 dB/km) at 1550 nm.

Fiber optics lends itself to time-division multiplexing, in which multiple independent signals can be transmitted simultaneously. One digital bitstream operating, for example, at 45 MHz, can be interleaved with others to achieve an overall rate of 1 GHz. This signal is transmitted along a fiber at an operating wavelength. With wavelength division multiplexing (WDM), multiple optical signals can be simultaneously conveyed on a fiber at different wavelengths. For example, transmission windows at 840, 1310, and 1550 nm could be used simultaneously.

Independent laser sources are tuned to different wavelengths and multiplexed, and the optical signal consisting of the input wavelengths is transmitted over a single fiber. At the receiving end, the wavelengths are de-multiplexed and directed to separate receivers or other fibers.

Connection and Installation

Fiber-optic interconnection provides a number of interesting challenges. Although the electrons in an electrical wire can pass through any secure mechanical splice, the light in a fiber-optic cable is more fickle through a transition. Fiber ends must be clean, planar, smooth, and touching-not an inconsiderable task considering that the fibers can be 10 µm in diameter. (In some cases, rounded fiber ends yield a more satisfactory transition.) Thus, the interfacing of fiber to connectors requires special consideration and tools. Fundamentally, fiber and connectors must be aligned and mechanically held together.

Fibers can be joined with a variety of mechanical splices. Generally, fiber ends are ground and polished and butted together using various devices. A V-groove strip holds fiber ends together, where they are secured with an adhesive and a metal clip. Ribbon splice and chip array splicers are used to join multi-fiber multimode fibers. A rotary splicer is used for single-mode fibers; fibers are placed in ferrules that allow the fibers to be independently positioned until they are aligned and held by adhesive.

Many mechanical splices are considered temporary, and are later replaced by permanent fusion splicing; it is preferred because it reduces connector loss. With fusion splicing, fiber ends are heated to the fiber melting point, and fused together to form a continuous fiber. Specialized equipment is needed to perform the splice. Losses-as low as 0.01 dB-can be achieved.

Some fiber maintenance is simple; for example, to test continuity, a worker can shine a flashlight on one cable end, while another worker watches for light on the other end.

Other measures are more sophisticated. An optical time domain reflector (OTDR) is used to locate the position of poor connections or a break in a very long cable run. Light pulses are directed along a cable and the OTDR measures the delay and strength of the returning pulse. With short runs, a visual fault inspection can be best; a bright visible light source is used, and the cable and connectors are examined for light leaks. An optical power meter and a light source are used to measure cable attenuation and output levels. An inspection microscope is used to examine fiber ends for scratches and contamination. Although light disperses after leaving a fiber, workers should use eye protection near lasers and fiber ends. A fiber link does not require periodic maintenance. Perhaps the primary cause of failure is a "back hoe fade," which occurs when a cable is accidentally cut during digging.

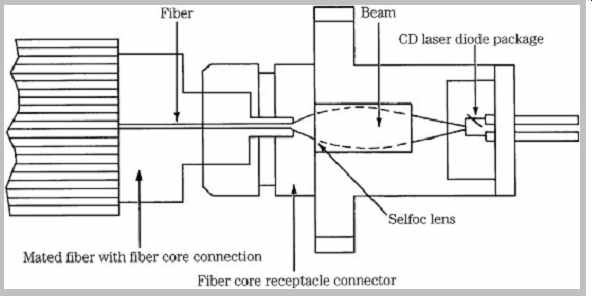

FIG. 17 Laser-diode packages consolidate a laser diode, lens, and receptacle

connector.

Various connectors and couplers are used in fiber installations. The purpose of an optical connector is to mechanically align one fiber with another, or with a transmitting or receiving port. Simple connectors allow fibers to be connected and disconnected. They properly align fiber ends; however, connector loss is typically between 0.5 dB and 2.0 dB, using butt-type connectors.

Directional couplers connect three or more ports to combine or separate signals. Star couplers are used at central distribution points to distribute signals evenly to several outputs. Transmitters, such as the one shown in FIG. 17, and receivers integrate several elements into modules, simplifying installation. Subsystem integration provides data links in which the complete path from one buffer memory to another is integrated.

Design Example

Generally, the principal design criteria of a fiber-optic cable installation are not complex. Consider a system with a 300 foot fiber run from a microphone panel to a recording console. In addition, midway in the cable, a split runs to a house mixing console. Design begins by specifying the signal-to-noise (S/N) ratio of the fiber (as opposed to the S/N ratio of the audio signal) to determine the bit-error rate (BER). For example, to achieve a BER of 10-10 (1 error for every 1010 transmitted bits), an S/N of 22 dB would be needed. A multimode graded index fiber would be appropriate in terms of bandwidth and attenuation. For example, the manufacturer's bandwidth length product (band factor) might be 400 MHz. For a fiber length of 300 feet (0.09 km), the cable's optical bandwidth is the band factor divided by its length, yielding 4.4 GHz. Dividing this by 1.41 yields the cable's electrical bandwidth of 3.1 GHz.

The total system bandwidth is determined by:

1/BWtotal 2 = 1/BWtrans 2 + 1/BWrec 2 + 1/BWcable 2

where the transmitter, receiver, and cable bandwidths are all considered. If BWtrans is 40 MHz, BWrec is 44 MHz, and BWcable is 3.1 GHz, total bandwidth BWtotal is 30 MHz.

If the cable's attenuation is specified as 5.5 dB/km, this 0.09-km length would have 0.5 dB of attenuation. The splitter has a 3-dB loss. Total coupling loss at the connection points is 5.5 dB, and temperature and aging loss is another 6 dB. Total loss is 15 dB. The system's efficiency can be determined: The adjusted output power is the difference between the transmitter output power and the detector sensitivity. For example, selected parts might yield figures of -8 dB and -26 dB, respectively, yielding an adjusted output power of 18 dB. The received signal level would yield an acceptable S/N ratio of 56 dB. The power margin is the difference between the adjusted power output and the total loss. In this case, 18 dB - 15 dB = 3 dB, a sufficient power margin, indicating a good choice of cable, transmit and receive parts with respect to system loss.

As with any technology involving data protocol, standards are required. In the case of fiber optics, Fiber Distributed Data Interface (FDDI ) and FDDI II are two standards governing fiber-optic data communications networks. They interconnect processors and can form the basis for a high-speed network, for example, linking workstations and a file server. FDDI uses LED drivers transmitting at a nominal wavelength of 1310 nm over a multimode fiber with a 62.5-µm core and 125-µm cladding, with a numerical aperture of 0.275. Connections are made with a dual-fiber cable (separate send and receive) using a polarized duplex connector. FDDI offers data bandwidths of 100 MHz with up to 500 station connections. The FDDI II standard adds greater flexibility to fiber-optic networking.

For example, it allows a time-division multiplexed mode providing individual routes with a variety of data rates.

The Synchronous Optical NETwork (SONET) provides wideband optical transmission for commercial users. The lowest bandwidth optical SONET protocol is OC-1 with a bandwidth of 51.84 Mbps. A 45-Mbps T-3 signal can be converted to an STS-1 (synchronous transport signal level 1) signal for transmission over an OC-1 cable. Other protocols include OC-3 (155.52 Mbps) for uncompressed NTSC digital video, and OC-12 (622.08 Mbps) for high resolution television. SONET is defined in the ANSI T1.105-1991 standard. Telecommunications is discussed in more detail in Section 15.